Top Papers of Last Week (ending 10-Aug-2025)

Top LLM / AI influential Papers from last week

Read time: 13 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (ending 10-Aug-2025):

🇨🇳 Breaking the Sorting Barrier for Directed Single-Source Shortest Paths

🗞️ "Self-Questioning Language Models"

🗞️ "R-Zero: Self-Evolving Reasoning LLM from Zero Data"

🗞️ "Efficient Agents: Building Effective Agents While Reducing Cost"

🗞️ Perch 2.0: The Bittern Lesson for Bioacoustics

🗞️ "ReaGAN: Node-as-Agent-Reasoning Graph Agentic Network"

🗞️ "Medical Reasoning in the Era of LLMs: A Systematic Review of Enhancement Techniques and Applications"

🗞️ "A comprehensive taxonomy of hallucinations in LLMs"

🗞️ "Agentic Web: Weaving the Next Web with AI Agents"

🗞️ "Is Chain-of-Thought Reasoning of LLMs a Mirage? A Data Distribution Lens"

🇨🇳 Breaking the Sorting Barrier for Directed Single-Source Shortest Paths

A Chinese University dropped a landmark computer science achievement.

A canonical problem in computer science is to find the shortest route to every point in a network. A new approach beats the classic algorithm taught in textbooks.

Fastest shortest paths algorithm in 40 years, beating the famous Dijkstra plus Tarjan `O(m + n log n) ` limit by avoiding global sorting and working through a small set of pivot nodes.

Researchers just showed a deterministic O(m log^(2/3) n) single‑source shortest‑paths algorithm for directed graphs with non‑negative weights that is provably faster than Dijkstra on sparse graphs, which breaks the sorting barrier

The paper shows a deterministic O(m log2/3 n) algorithm for directed single‑source shortest paths with real non‑negative weights in the comparison‑addition model, which beats the O(m + n log n) Dijkstra bound on sparse graphs.

The usual practice keeps a global priority queue that effectively sorts up to n distance keys, so hitting a sorting wall near n log n.

🧭 What changed vs Dijkstra

The team stops trying to keep the frontier perfectly sorted. Instead it slices the graph into layers from the source like Dijkstra, but within each layer it clusters nearby frontier nodes and looks only at a representative from each cluster.

The core shift is that the algorithm computes all distances without maintaining a full order of the frontier, so it avoids paying for global sorting.

Prior results even proved Dijkstra is optimal if the algorithm has to output the exact order of vertices by distance. This work targets only the distances, not that full ranking, which opens the door to bypass sorting entirely.

🧪 What this means right now

On sparse graphs with non‑negative weights, Dijkstra’s algorithm is not optimal for SSSP in the standard real‑weight model. The field now has a faster, deterministic baseline for general directed instances, plus a clearer picture of when “sorting the frontier” is extra work rather than necessary work

🎯 Practical application of this

This algo helps anything that runs many shortest path computations, like graph databases, routing engines, ranking pipelines, and network analysis, since each run finishes sooner and uses fewer CPU hours.

It is deterministic and uses only comparisons and additions, so it works cleanly with real-valued weights and portable code, no hardware specific integer hacks.

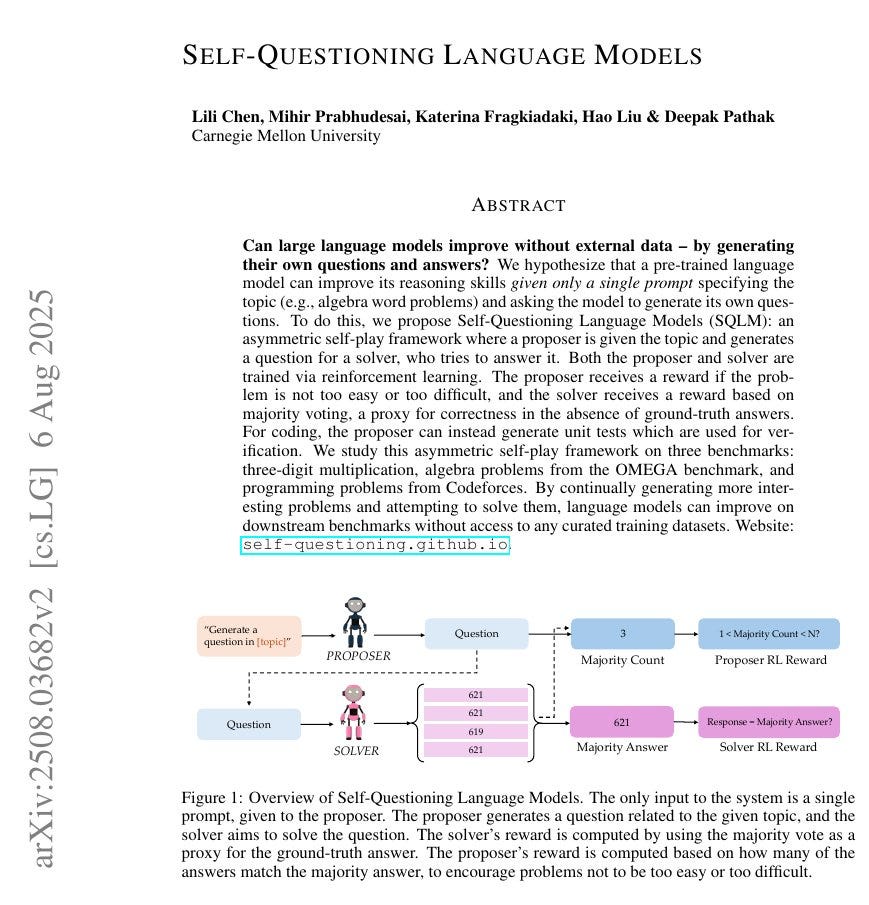

🗞️ "Self-Questioning Language Models"

An LLM teaches itself from a single topic prompt, no human-written questions, no labels.

An LLM plays both teacher and student, creates its own questions, and learns with reinforcement learning. By just splitting into a proposer that writes problems and a solver that answers them, both trained with reinforcement learning.

And with only self-generated data, a 3B model jumps +14% on arithmetic, +16% on algebra, and +7% on coding on held-out tests. The clever twist that makes it work: when checking answers is hard, it uses majority vote over multiple solver attempts, and when checking is easy, like code, the proposer emits unit tests and the solver is rewarded by the fraction of tests passed. This keeps problems “not too easy, not too hard,” so difficulty auto-tunes as the solver improves.

🧠 The idea

The model runs a closed loop where a proposer writes a problem from a topic prompt and a solver tries to answer it. Both roles are the same base LLM, trained with reinforcement learning, so the system can bootstrap without any human-written questions or answers.

🧠 Why this works: The key is curriculum from interaction, not a fixed dataset. Curriculum is just the sequence of training problems the model sees, with difficulty that keeps adjusting to the model’s current skill. The paper calls the setup self-questioning, where the same base model plays proposer and solver using reinforcement learning, so the data gets shaped by performance rather than a fixed dataset

The rewards are minimal but aligned with useful behavior, proposer seeks interesting, solvable problems, solver seeks consistent or verifiable answers. The loop is cheap to run at small scale, which makes it practical to iterate.

🧠 The bottom line

The curriculum appears because the proposer only gets rewarded for writing solvable but non-trivial problems, and the solver only gets rewarded for consistent or verifiable answers. The two keep pulling each other toward that sweet spot, which is exactly where learning is fastest.

🗞️ "R-Zero: Self-Evolving Reasoning LLM from Zero Data"

So many brilliant AI "Self-Evolving" papers. Here's another one. R-Zero shows a model can train itself from zero external data, by pairing a Challenger and a Solver that keep learning right at 50% uncertainty, no human tasks or labels.

On Qwen3-4B it adds +6.49 on math and +7.54 on general reasoning, with MMLU-Pro 37.38 → 51.53, all from self-generated data.

🧭 The problem they tackle

Human‑curated tasks and labels throttle self‑evolving LLMs, cost aside, the bottleneck is scale and coverage. R‑Zero removes that dependency by starting from a single base model and creating its own training stream from scratch.

🔁 The co‑evolving loop

Both roles start from the same base model, one becomes the Challenger, the other becomes the Solver. The Challenger proposes tough questions, the Solver answers them, then both get improved in alternating rounds.

This creates a targeted curriculum that keeps moving with the Solver’s ability.

🗞️ "Efficient Agents: Building Effective Agents While Reducing Cost"

The paper shows how a lean agent stack stays cheap and fast without losing quality. Efficient Agents keeps 96.7% of OWL accuracy while cutting per run cost by 28.4%.

The authors test on GAIA, a hard multi step benchmark. Efficiency is cost-of-pass, the expected dollars for 1 correct answer, equal to cost per try divided by success rate.

The biggest wins come from pruning overhead. Backbone choice drives accuracy, many extras just add tokens. Best-of-N, which tries N candidates and keeps the highest scoring one, gives tiny gains, 53.33% to 53.94% from N=1 to N=4, but tokens jump 243K to 325K. That is a poor trade.

Planning has a sweet spot, max steps 8 works well, and cost-of-pass rises from 0.48 to 0.70 when moving from 4 to 8. Going further mostly adds cost.

Tool use should be wide and light, more search sources help, simple browser actions beat complex clicks, query expansion around 5 to 10 is good. This pulls in better evidence without bloating the context. Memory is the surprise, simple history of observations and actions wins, 56.36% accuracy and 0.74 cost-of-pass.

Heavy “summarized” memory burns tokens and does not help. Their recipe is GPT-4.1, max 8 steps, plan interval 1, multi search sources, 5 query rewrites, no Best-of-N, simple memory.

Each choice cuts waste while keeping capability. This setup keeps results close to OWL while dropping cost per run from 0.398 to 0.285 and tokens from 189K to 127K.

That is real savings with minimal accuracy loss. Bottom line, optimize for cost-of-pass, small switches in planning, tools, and memory save real money with little accuracy loss.

🗞️ Perch 2.0: The Bittern Lesson for Bioacoustics

🐠 With this paper GoogleDeepMind releases open-source AI for interpreting animal sounds. Will make large-scale wildlife monitoring cheap, fast, and accurate from plain audio, so biodiversity trends can be tracked in near real time and conservation actions can be taken sooner.

Thsi Perch 2.0 model shows that a compact, supervised bioacoustics model, trained on many labeled species and two simple auxiliary tasks, reaches state-of-the-art transfer across birds, land mammals, and even whales.

Most systems lean on self-supervised pretraining or narrow, task-specific models, which often struggle when compute is tight and labeled data is limited.

🐦 What changed

Perch 2.0 expands from only birds to a multiple different groups of animals, training set and scales supervision hard. The team trains on 1.54M recordings covering 14,795 classes, of which 14,597 are species.

The backbone is EfficientNet‑B3 with 12M parameters, so it stays small enough for everyday hardware, yet it learns general audio features that transfer well.

Input audio is cut into 5s chunks at 32kHz, converted to a log‑mel spectrogram with 128 mel bins spanning 60Hz to 16kHz. The encoder emits a spatial embedding that is pooled to a 1536‑D vector used by simple heads for classification and transfer.

🗞️ "ReaGAN: Node-as-Agent-Reasoning Graph Agentic Network"

The paper proposes Retrieval-augmented Graph Agentic Network (ReaGAN), a graph learning framework where each graph node is reimagined as its own agent, equipped to plan, reason, and act with the help of a frozen LLM.

ReaGAN lets every graph node run a tiny frozen LLM planner and still score GNN‑level accuracy without any training. ReaGAN tackles a long-standing pain in graph learning: traditional graph neural networks make every node follow one hard-wired message-passing recipe, so well-connected nodes get flooded with junk while lonely nodes stay clueless.

The paper’s punch line is simple, every node now runs its own tiny plan with a frozen large language model, decides whether to gather clues from its neighbors, fetch textually similar but far-away nodes, or just stay quiet, then stores whatever it learns in a small memory.

From that memory it crafts a prompt, asks the planner for an action, and picks local aggregation, global retrieval, prediction, or no‑op. Local aggregation merges immediate neighbors and collects their labels, while global retrieval hunts 5 distant yet similar nodes through a text search over the whole graph.

Three such reasoning rounds already let a frozen 14B-parameter model hit 84.95% accuracy on Cora and 60.25% on Citeseer, matching or beating several fully trained GNNs, yet there is zero gradient training involved.

Removing the planner or retrieval drops accuracy by up to 25 points, confirming that autonomy plus global context drive the gains. What is brand-new here is the mix of node-level autonomy plus retrieval-augmented context.

Local aggregation keeps the usual structural view, global retrieval pulls in semantic twins that live nowhere near the node in the graph, and a NoOp option stops useless chatter, so each node self-tunes how much information it really needs.

The benefits are clear, competitive accuracy without any training, smoother handling of sparse graphs, less noise for dense ones, and a lighter compute footprint because the model never updates its weights, it only prompts.

So, ReaGAN suggests that prompt‑based planning and retrieval can stand in for heavy gradient loops in graph learning.

🗞️ "Medical Reasoning in the Era of LLMs: A Systematic Review of Enhancement Techniques and Applications"

LLMs spit out medical facts, but clinics need step by step reasoning doctors can check. This review follows 60 studies from 2022 to 2025 that push models toward that goal and shows what is still missing.

Early general models treat diagnosis like pattern matching. Researchers instead train or steer models to talk through their logic, because a wrong but confident answer could hurt a patient.

During training, teams use supervised fine-tuning with doctor written or machine generated chains, stage lessons from simple Q\&A to full case workups, and use reinforcement learning so the model gets points when each step is safe, precise, and grounded in the chart.

At test time, cheaper tricks nudge existing models. Clever prompts coax a chain of thought, self consistency chooses the most common answer from multiple tries, retrieval plugs in fresh guidelines, and multi agent setups let specialist bots debate then merge a plan.

Reasoning looks different across data types. Text needs logical flow, images need spatial grounding that links a shadow to a finding, and code agents now run full pipelines inside a sandbox.

The same ideas now drive decision support, tutoring, imaging reports, drug design, and treatment planning. With multiple choice scores tapped out, fresh benchmarks grade reasoning detail, visual grounding, and safety.

The field still wrestles with hallucinations, weak multimodal fusion, and compute cost, yet the direction is clear, transparent, verifiable medical AI.

🗞️ "A comprehensive taxonomy of hallucinations in LLMs"

This paper pins down why hallucination happens, types the errors, and shows tools that keep them manageable. Paper maps every failure mode and proves that some hallucination will always remain.

Posits that hallucination is an inevitable characteristic of LLMs, irrespective of their architectural design, learning algorithms, prompting techniques, or the specific training data employed, provided they are considered ”computable LLMs” operating within the defined formal world.

The author starts by splitting problems into 2 axes.

Intrinsic slips clash with the user prompt, while extrinsic slips invent outside facts.

Another split tracks truth (factuality) versus loyalty to the source (faithfulness).

From there the list explodes.

Wrong dates, tangled logic, time jumps, fake court rulings, risky medical tips, buggy code, and mismatched image captions all appear. Roots include noisy data, the next‑token guessing core of the model, shaky reasoning skills, and careless prompts.

A math section shows any computable model, no matter the size or training, will still miss some questions forever. So the mission shifts from erasing errors to catching and containing them.

The survey checks every big benchmark, from TruthfulQA to niche medical sets, and notes that scores bounce because nobody shares 1 definition of hallucination. Fixes cover tool‑calling models, retrieval‑augmented generation, filtered fine‑tuning, rule based guardrails, and clear confidence cues.

Each tactic helps, but they work best when stacked together with humans supervising. In short, hallucination is baked into today’s model recipe, yet layered engineering, transparent interfaces, and steady oversight keep it from harming real users.

🗞️ "Agentic Web: Weaving the Next Web with AI Agents"

The paper argues the next big thing is the Agentic Web where autonomous AI agents, not humans, run tasks across the internet. The authors show how the Web has already shifted from early pages to mobile feeds and now toward agent‑driven action.

They define 3 dimensions, intelligence, interaction, and economy, that together let an agent search, plan, transact, and even pay other agents. Under a new client‑agent‑server architecture the user device only collects intent, an intelligent agent decomposes that intent into sub‑tasks, and distributed backend services deliver flights, payments, maps, or anything else without extra clicks.

A demand‑skill vector mapper turns human goals into machine‑readable vectors. i.e. Software first turns a human goal into a set of numbers that reflect needed skills.

A task router compares those numbers to the skill profiles of many agents and sends the job to the best fit in real time. A shared ledger then records how much compute each agent used so everyone can settle up.

A worked example shows one request “plan a 3‑day Beijing trip” triggers weather, guide, hotel, and map services that self‑coordinate then return a ready itinerary.

Because agents bargain for bandwidth, security, and latency, network infrastructure must move from best‑effort pipes to fine‑grained service resource zones matched to each task. The result is an execution‑oriented Web where users set goals once and the agentic stack quietly does the heavy lifting in the background.

🗞️ "Is Chain-of-Thought Reasoning of LLMs a Mirage? A Data Distribution Lens"

The paper argues that chain of thought in LLMs is pattern replay bound to training data, not general reasoning.

Chain of thought is useful when prompts match training patterns, but it is not evidence of general reasoning. When test data shifts even slightly, the step by step text stays fluent but logic cracks.

The authors build DataAlchemy, a controlled sandbox, and train small GPT‑2 style models on alphabet puzzles using 2 operations, rotate letters and shift positions, over 4 letter strings.

This lets them probe 3 axes, task, length, and format.

When the test uses the same transformation pattern as training, the model’s full chain output matches the label 100%. The moment the test swaps in new compositions of those operations or a truly unseen transform, that exact match collapses to 0.01% or 0%, even though the model still writes confident step by step text. That text looks reasonable, but the final answer is wrong.

Element shift is similar, novel letter combinations or unseen letters break the chain completely. Length shift hurts too, models trained on length 4 fail on 3 or 5 and even pad or trim steps to mimic seen length, a group padding trick helps a bit.

Format noise degrades outputs, insertions hurt more than deletions, and edits to element or transform tokens matter far more than changes to filler prompt words.

A tiny burst of supervised fine tuning, about 0.00015 of the data, quickly patches accuracy, which signals distribution coverage, not new reasoning skills.

Temperature and size, 68K to 543M parameters, barely change the pattern. Bottom line, when the data moves, accuracy can collapse while the story still sounds fine. Test on real shifts, not just matching cases, and keep training coverage honest.

That’s a wrap for today, see you all tomorrow.