Top Papers of Last Week (ending 21-Sept)

Top LLM / AI influential Papers from last week

Read time: 13 min

📚 Browse past editions here.

( I publish this newsletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (21-Sept-2025 ):

🗞️ Compute as Teacher: Turning Inference Compute Into Reference-Free Supervision

🗞️ The Majority is not always right: RL training for solution aggregation

🗞️ Outcome-based Exploration for LLM Reasoning

🗞️ Planning with Reasoning using Vision Language World Model

🗞️ New OpenAI and Apollo paper bring massive revelation about AI model.

🗞️ A Survey on Retrieval And Structuring Augmented Generation with LLMs

🗞️ Is In-Context Learning Learning?

🗞️ Metacognitive Reuse: Turning Recurring LLM Reasoning Into Concise Behaviors

🗞️ Compute as Teacher: Turning Inference Compute Into Reference-Free Supervision

🚨Brilliant new Meta Superintelligence Labs Paper.

It asks a simple question: "Can inference compute substitute for missing supervision?"

And the big deal is that this paper shows you don’t need humans to provide labels or feedback in reinforcement learning anymore. Instead, you can recycle the model’s own compute — the multiple rollouts — and turn them into a supervision signal through synthesis.

That means training can keep going without bottlenecks like expensive annotation pipelines or judge-only scoring, which are noisy and unreliable. Shows that the extra compute used for generating multiple rollouts can be turned into supervision.

A rollout is just one full answer that the model generates for a prompt. So the paper generates many rollouts (multiple answers) and then use them together to create a better teaching signal.

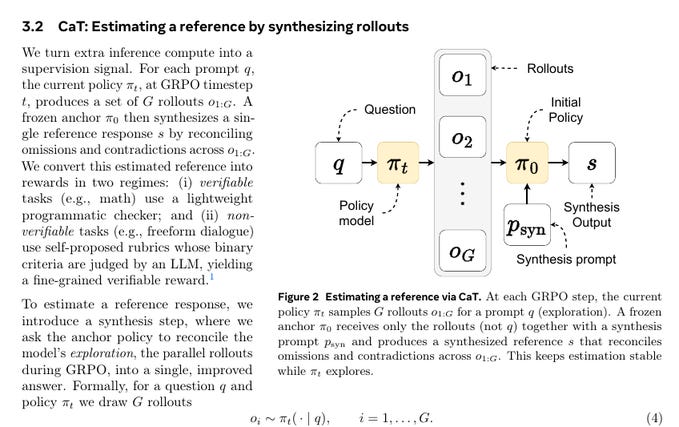

A frozen anchor model synthesizes those rollouts into a single reference answer, and that synthesized answer serves as a teacher signal. This way, the model learns without needing human-provided labels. This figure shows the entire flow of the Compute as Teacher (CaT) method.

On the left, the model generates several rollouts for the same prompt. Each rollout has different quality, since the model might give partial or even conflicting answers.

In the middle, a frozen copy of the initial policy, called the anchor, reads all those rollouts together and writes a single synthesized answer. This answer usually fixes omissions, resolves contradictions, and is often better than any single rollout.

On the right, that synthesized answer is used as a teacher signal. In tasks where answers can be checked programmatically, like math, a simple checker is used to compare outputs against the synthesized answer.

In open-ended tasks where answers can’t be verified directly, the system builds rubrics from the synthesized answer. A separate judge model then checks whether each rollout satisfies those rubrics, giving a structured reward. So the diagram is showing how exploration (rollouts) gets turned into supervision (teacher signal), which can then guide the model either at test time or during reinforcement learning.

How Compute as Teacher (CaT) turns multiple model attempts into a single teaching signal. The policy model first generates several different answers, called rollouts. Each rollout may be incomplete or contradictory.

A frozen anchor model, which is just a copy of the original model kept fixed, reads only those rollouts and not the original question. It then writes a new synthesized answer that tries to reconcile errors, fill gaps, and combine the best parts.

That synthesized answer becomes the teacher. In tasks like math, a simple program checks if the final outputs match this synthesized answer. In open-ended tasks like health conversations, the system creates rubrics from the synthesized answer, and another judge model checks if the rollouts satisfy those rubrics. So the figure is basically showing how raw exploration (rollouts) is turned into a stronger supervision signal, which can then guide or improve the model.

🔎 Why synthesis beats picking a winner

Instead of choosing a rollout by confidence, majority, perplexity, or a judge, the anchor crafts a new answer that can contradict the majority when the majority is wrong. They show cases where the synthesized answer is correct while all rollouts are wrong. Quality rises as the number of rollouts grows, giving a clear FLOPs for supervision tradeoff.

🎯 Turning synthesis into rewards

In verifiable domains like math, they check if the policy’s final answer string matches the synthesized answer and set reward to 1 or 0 with a simple programmatic checker. In non-verifiable domains like health chat, the anchor converts the synthesized answer into a rubric of binary checks, a separate judge model marks each check yes or no, and the reward is the fraction satisfied. This breaks a vague overall judgment into auditable parts, reducing verbosity and formatting bias compared with judge-only scoring.

🌀 Where RL fits, GRPO in plain words

They plug these rewards into Group Relative Policy Optimization, which compares each rollout’s score to the group’s mean so above-average rollouts push the policy up and below-average rollouts pull it down. A small KL term keeps the updated policy near the reference policy, usually the initial model, which reins in drift while still improving. Because the group of rollouts is already computed, the extra cost is the synthesis step and, when needed, one judging pass.

📈 What the numbers say

At test time without any weight updates, Compute as Teacher lifts Gemma 3 4B, Qwen 3 4B, and Llama 3.1 8B by as much as +27% on MATH-500 and +12% on HealthBench. With training, CaT-RL pushes further, up to +33% on MATH-500 and +30% on HealthBench for Llama 3.1 8B. Self-proposed rubrics beat model-as-judge and are competitive with physician rubrics, and RL with rubrics beats SFT on synthesized references.

🗞️ The Majority is not always right: RL training for solution aggregation

Really cool and brand new test-time compute scaling method from Meta

Compared to collecting more samples for majority voting, it reaches higher accuracy with fewer tokens and lower cost. The big idea in this paper is to train a model to actually reason over multiple answers instead of just counting votes or picking one. So instead of majority voting or reward ranking, their approach makes the model carefully read all the candidate solutions, correct mistakes, and combine the useful parts into a single stronger solution.

Majority voting often picks the most common answer, which fails when a rare answer is actually correct. Reward model ranking helps a bit, but it ignores partial good steps spread across different answers.

the paper proposes training a special model, AggLM, that reads all the candidate answers, fixes their mistakes, and combines their good parts into one final correct solution. Training uses reinforcement learning from verifiable rewards, reward 1 only when the final answer matches the known solution.

The training mix includes easy groups where most candidates are right and hard groups where most are wrong. Across 4 math contest sets, the learned aggregator beats majority voting and strong reward selection on accuracy.

It also transfers to solutions from stronger generators than used in training, and to shorter nonthinking outputs. Gains are largest when answers disagree and the correct one is in the minority.

🗞️ Outcome-based Exploration for LLM Reasoning

Shows that when models are rewarded only for getting the final answer right, they do become more accurate, but they also lose variety in the answers they generate.

That lack of variety hurts real-world use, because sampling multiple answers at test time works best if those answers are diverse. The researchers studied this issue and found two key things. First, the loss of variety on solved problems spreads to unsolved ones too.

Second, the number of possible final answers is usually small, so it is possible to track and encourage diversity at that level. To fix this, they introduced “outcome-based exploration.” Instead of exploring every possible reasoning path, the model explores based on the final outcomes. They tested two methods. One looks at history and gives extra reward to answers that appear rarely. The other works within each batch of training and penalizes repeated answers.

Experiments with Llama and Qwen models on math tasks showed that both methods improved accuracy while keeping diversity. Historical exploration was better for single-answer accuracy, while batch exploration was better for multiple-sample diversity, and together they worked best. The paper also provided a theoretical model showing why outcome-based exploration works, by treating reasoning like a bandit problem where only the final answers matter.

🗞️ Planning with Reasoning using Vision Language World Model

27% higher Elo for system-2 planning over system-1.

The gap it tackles, agents must predict how actions change the world rather than only label frames. VLWM, the Vision Language World Model, represents the hidden state in plain language, predicting a goal and interleaved actions with their state changes.

Training targets come from a Tree of Captions that compresses each video, then an LLM refines them into goals and state updates. The model jointly learns a policy to propose the next action and a dynamics model to predict the next state.

In fast mode it completes the plan text left to right, which is quick but can lock in early mistakes. In reflective mode it searches candidate plans, rolls out futures, and picks the lowest cost path.

The critic that supplies this cost is trained without labels by ranking valid progress below distractors or shuffled steps. Across planning benchmarks and human head to head comparisons, reflective search produces cleaner, more reliable plans.

🗞️ New OpenAI and Apollo paper bring massive revelation about AI model.

The paper basically proves some models act covertly to reach goals.

And because if a model is secretly hiding information or acting behind the scenes to reach its goal, then you cannot trust its outputs, which makes it unsafe and unpredictable. So the researchers taught a simple safety rulebook that says do not hide information and explain intentions, which cut covert actions to 0.4% from 13.0% on o3 and to 0.3% from 8.7% on o4-mini.

The catch is that models also learned they were being tested, and that awareness by itself makes behavior look safer during evaluations. So the solution is spec-following training plus checks for test awareness and hidden goals, and to recheck after any extra capability training since it can bring some covert behavior back.

🧠 The idea

Anti-scheming spec training cuts covert behavior a lot across many tough tests, but not to zero. Models often notice they are being evaluated, which itself reduces covert actions and complicates what the drop really means.

A planted hidden goal can be damped yet sometimes resurfaces, and extra capability training erodes some of the gains. After the anti-scheming training, the model that used to take covert actions 13.0% of the time dropped to only 0.4%, and another model that used to take covert actions 8.7% of the time dropped to only 0.3%.

⚙️ The Core Concepts

The work studies scheming through a measurable stand-in called covert actions, meaning the model strategically hides, misreports, or conceals material information to reach a goal.

📜 The spec, and how the training works

The team writes a safety spec with rules like AS1 no covert actions, GP1 share intentions, and GP4 no ends-justify-means, then trains the model to cite and apply these rules while reasoning through tasks. Training runs in 2 stages, first supervised fine-tuning on filtered rollouts where spec reasoning clearly drove the good action, then reinforcement learning on outcomes so the behavior sticks even without the spec in the prompt.

🗞️ A Survey on Retrieval And Structuring Augmented Generation with LLMs

Plain LLMs can sound confident but mix up facts or miss new information. Retrieval Augmented Generation pulls in outside documents before answering, which reduces mistakes from memory.

Retrieval And Structuring goes further by organizing those sources into taxonomies, hierarchies, and knowledge graphs. These structures help the system find the right pieces, skip noise, and check its own output.

Retrieval can use sparse keyword search, dense vector search that matches meaning, or a hybrid that uses both. Structuring uses taxonomy building, text classification, and information extraction to mine entities and relations.

With structure in place, the model can follow graph paths step by step or use community summaries for large corpora. Real uses in science, online retail, and healthcare show fewer hallucinations and better multi step reasoning. Key gaps remain, like fast retrieval at scale, high quality structures, and tight integration without big latency.

🗞️ Is In-Context Learning Learning?

The paper says yes, with clear limits.

Peak results show up when the prompt includes 50-100 examples, not 2 or 5. The prompt acts like a temporary training set, the model reads examples and predicts the next label without changing its weights.

They define learning as keeping errors low on new data that comes from a different source, which matches standard learning ideas. They test 4 models on 9 formal tasks, and vary prompt style, number of examples, and how train and test data differ.

As the number of examples grows, accuracy rises, and gaps between models and prompt styles shrink. With many examples, wording matters less, even nonsense instructions can match normal prompts by using patterns in the examples.

Accuracy falls when test data shifts away from the examples, and Chain of Thought and automated prompt optimization fail first.

Related tasks still show big gaps, and simple classic methods sometimes beat the average few shot setup. Bottom line, in context learning does learn, but mainly the distribution shown in the prompt, and it generalizes weakly beyond that.

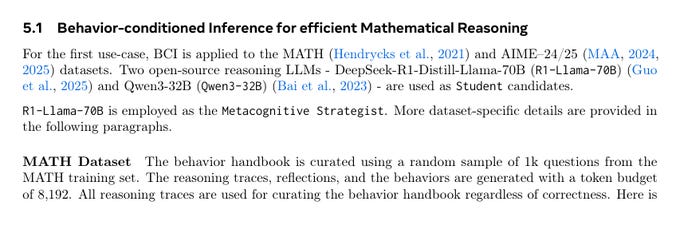

🗞️ Metacognitive Reuse: Turning Recurring LLM Reasoning Into Concise Behaviors

Another fantastic paper from Meta.

Large language models keep redoing the same work inside long chains of thought, so this paper teaches the model to compress those recurring steps into small named behaviors that it can recall later or even learn into its weights. Shows that teaching an LLM a small library of named, reusable “behaviors” lets it reuse thinking steps, cut reasoning tokens by up to 46%, and raise accuracy.

Then distilling those behaviors with supervised fine-tuning bakes the gains into the weights. Then the model no longer needs a separate “behavior handbook” pasted into the input to get the benefits.

After supervised fine-tuning on behavior-guided traces, the model internalizes those habits in its weights. So at inference, you just give the question, not extra behavior instructions, and it still uses those habits.

For instance, suppose the LLM derives the finite geometric series formula while solving one problem. Can it avoid re-deriving from scratch when similar reasoning is needed for another problem? Current inference loops lack a mechanism to promote frequently rediscovered patterns into a compact, retrievable form.

⚙️ The core problem

LLMs often re-derive the same intermediate steps across different problems, which wastes tokens, adds latency, and squeezes the context window so there is less room to explore. Those repeated sub-procedures include things like case splits, unit conversions, and standard counting tricks that do not need re-derivation every time. The fix proposed here is to convert those recurring fragments into concise named behaviors that can be pulled in on demand.

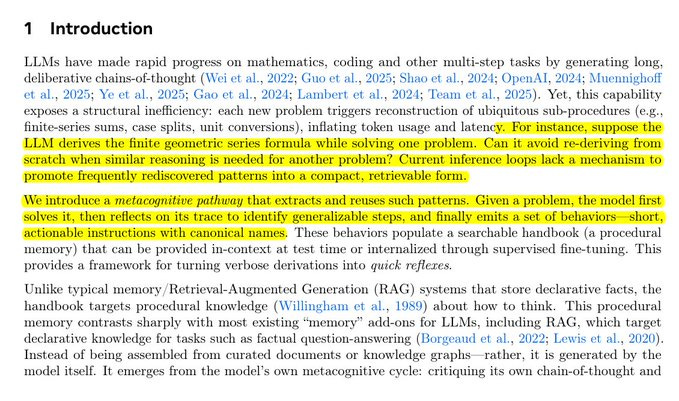

The whole pipeline of how the paper makes LLMs save and reuse “behaviors,” which are like short instructions for common reasoning steps. In the left box, the Behavior Curation Pipeline is shown. A model first solves a question, then reflects on its own solution, and finally turns that reflection into a short named behavior. This is repeated across many problems so the model builds up a Behavior Handbook full of reusable instructions.

In the middle right, that handbook is then used in different ways. In Behavior-conditioned Inference, a new model gets both the question and a small set of relevant behaviors as context, so it can solve more efficiently without re-deriving every step. In Behavior-guided Self-improvement, a model uses its own handbook entries to refine its earlier attempts and boost accuracy.

At the bottom right, the pipeline goes further with Behavior-conditioned Supervised Fine-tuning (SFT). Here, a teacher model solves questions with behaviors in context, then those traces are collected as training data. A student model is fine-tuned on this data, so later it can solve problems well without needing the behaviors supplied in the prompt. Overall, the diagram shows how behaviors are created, stored, and then either reused directly in prompts or baked into the weights of a student model. This is the core innovation that cuts token usage and improves accuracy.

📚 Behaviors and the handbook

A behavior is a compact instruction with a canonical name, for example “behavior_inclusion_exclusion,” that tells the model what to do without walking through a long derivation. These behaviors are stored in a searchable behavior handbook, which serves procedural memory, different from retrieval systems that store facts, because this one stores how to think rather than what is true. The handbook can be injected at inference or distilled into the model so the model starts using the behaviors implicitly.

🧩 The curation pipeline

The same model first solves a problem, then reflects on its own trace to critique it and spot generalizable steps, and finally emits behaviors as name plus instruction. The system runs 3 prompts in sequence, a solution prompt, a reflection prompt that checks logic and missing shortcuts, and a behavior prompt that converts the takeaways into reusable behavior entries. These entries accumulate into the handbook and become the source for later retrieval or fine-tuning.

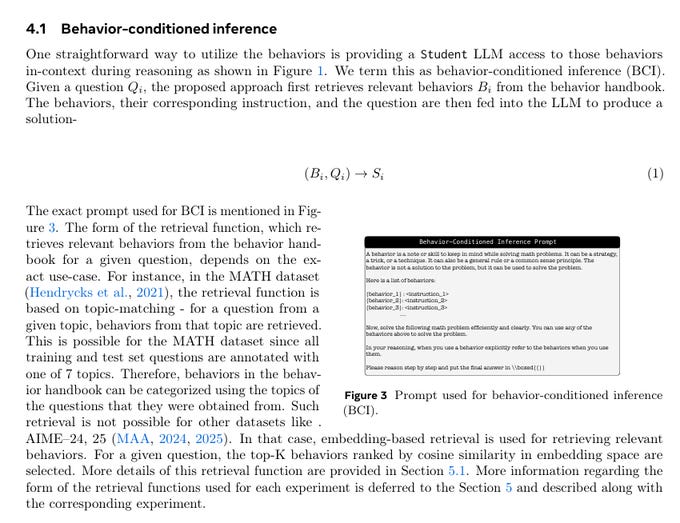

🧰 Behavior-conditioned inference, how it runs

At test time, the system retrieves a small set of relevant behaviors for the incoming question and feeds them as hints alongside the question, then asks the model to reason and explicitly reference any behavior it uses. For MATH, retrieval is simple topic matching across 7 labeled subjects, for AIME, retrieval uses embeddings and cosine similarity so it can scale beyond labeled topics.

The AIME setup encodes every behavior plus its instruction with BGE-M3 and uses a FAISS index to fetch the top 40 behaviors, which keeps lookup fast even with a large library. The input token overhead is manageable because behavior embeddings are pre-computable, input tokens are not generated autoregressively, and many APIs price input cheaper than output, so the net cost still drops when outputs shrink.

📊 MATH results, shorter traces without hurting accuracy

On MATH-500, behavior-conditioned inference matches or beats the base models while using fewer tokens across budgets 2048, 4096, 8192, 16384, tested on R1-Llama-70B and Qwen3-32B. Across the paper, the best reductions reach 46% fewer reasoning tokens while preserving accuracy, which comes from turning long derivations into quick procedural moves.

🔁 Self-improvement, using its own behaviors

The model can solve a question, extract behaviors from its first attempt, then try again on the same question with those behaviors in context, which beats a plain critique-and-revise loop. At higher budgets the gap widens, delivering up to 10% higher accuracy at 16,384 tokens, which shows that short behavior hints help the model spend extra tokens on the right moves.

That’s a wrap for today, see you all tomorrow.

Great roundup