Read time: 12 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (29-Jun-2025):

🥉 Potemkin Understanding in Large Language Models

🏆 "FilMaster: Bridging Cinematic Principles and Generative AI for Automated Film Generation"

📡 "FineWeb2: One Pipeline to Scale Them All -- Adapting Pre-Training Data Processing to Every Language"

🛠️ "GPTailor: LLM Pruning Through Layer Cutting and Stitching"

🗞️ "No Free Lunch: Rethinking Internal Feedback for LLM Reasoning"

🧑🎓 "OMEGA: Can LLMs Reason Outside the Box in Math? Evaluating Exploratory, Compositional, and Transformative Generalization"

🗞️ "Universal pre-training by iterated random computation"

🗞️ "Why Do Some Language Models Fake Alignment While Others Don't?"

🥉 Potemkin Understanding in Large Language Models

LLM understanding ends when the multiple‑choice sheet closes, as per this paper.

This paper says Benchmark victories give LLMs an illusion of understanding, because they can nail keystone questions while still misreading the core ideas. LLMs ace many public tests but still miss the ideas those tests are meant to cover.

Benchmarks trust a few representative questions to stand in for an entire concept because human mistakes follow predictable patterns. LLM mistakes do not, so a model can look perfect on those questions while its internal rule set is nothing like the human one.

This paper shows why the mismatch (called ‘potemkin understanding’) happens and how often it slips through.

⚙️ The Core Concepts

For an LLM, a concept is treated as a yes‑or‑no rule that marks which texts fit a definition. Human errors cluster into a small set, so exam writers can pick a tiny “keystone” batch of questions that only a student who truly grasped the rule can answer. An LLM can hit every keystone yet hold a different internal rule, giving a show of knowledge without the substance, a state the authors call a potemkin.

The paper gives a formal framework to pinpoints keystone questions: if a human answers them, the concept is mastered. The study then asks whether a model that passes still uses the concept properly.

Two procedures expose the problem. A new 3‑domain benchmark covering 32 concepts makes models define, classify, generate and edit examples; they nail definitions 94.2% of the time yet miss about 60% of follow‑up uses. An automated self‑consistency check that makes models grade their own answers finds a lower‑bound potemkin rate of 62%, confirming the issue .

These findings show that many high leaderboard scores rest on shaky ground and that measuring genuine concept mastery needs richer, model‑specific tests.

The image stages three quick checks of the ABAB rhyme idea.

First, the model gives the textbook definition: lines 1 and 3 rhyme, lines 2 and 4 rhyme. Next, it must write a missing line so the poem obeys that pattern. It inserts a line that does not rhyme with the right partner, proving it cannot use the rule it just named. Last, it handles a simple yes-or-no rhyme query with no trouble. Taken together, the picture shows the illusion: correct talk at the edges, but shaky skill in the middle. I have put a slightly more detailed analysis on this tweet here.

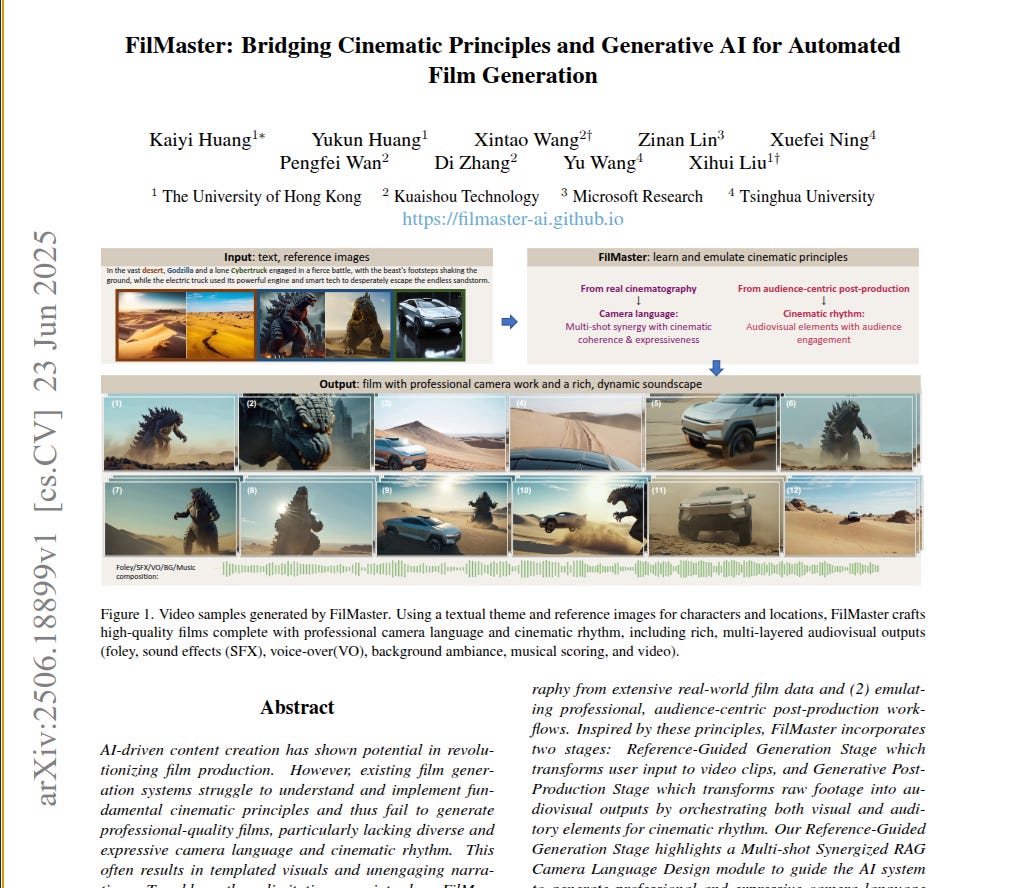

🏆 "FilMaster: Bridging Cinematic Principles and Generative AI for Automated Film Generation"

End‑to‑end automation meets film school lessons for coherent, editable AI movies

FilMaster converts a short theme and a few reference pictures into a finished film because it teaches the generator real camera craft and audience‑driven editing. Tests show its output beats commercial and academic tools on narrative, visuals, and rhythm.

Current generators spit out repetitive angles, mismatched audio, and lifeless pacing because they skip the cinematic rules human crews rely on.

FilMaster fixes this by studying 440,000 movie clips for shot planning and by letting a language model play the target audience during post‑production.

In the first stage it splits the script into scene blocks, retrieves similar film fragments, and asks a language model to replan every shot, locking in type, movement, angle, and mood that fit the narrative.

The second stage builds a rough cut, gathers audience‑style notes, then trims, rearranges, speeds or holds clips, and layers background, dialogue, foley, and effects on three time scales before mixing and exporting an editable OTIO timeline.

On the FilmEval benchmark its average score jumps about 60%, especially in shot coherence and pacing, and editors can load the timeline without extra cleanup.

📡 "FineWeb2: One Pipeline to Scale Them All -- Adapting Pre-Training Data Processing to Every Language"

FineWeb2 offers 20 TB covering 1,868 languages for open research.

Scraping the web gives text for 1,000+ languages only when every language gets its own cleaning; FineWeb2 automates that cleaning and the resulting small‑scale models beat those trained on earlier multilingual datasets.

Previous multilingual pipelines reuse English thresholds, so they over‑filter rare tongues, misclassify cousins, and let near‑duplicate boilerplate dominate training.

FineWeb2 fixes this by learning everything from data. A fast 1,880‑label language identifier picks the language‑script pair and a per‑language confidence cutoff comes from its score distribution. Word segmentation falls back to the nearest family tokenizer when none exists.

MinHash removes near‑copies first; the count of removed copies later guides “rehydration”, lightly repeating documents from healthy clusters while ignoring the huge, low‑quality tails.

For quality filters such as average word length or line ends, each language gets cutoffs drawn from its own Wikipedia or GlotLID corpus with methods like 10Tail or MeanStd, not from English rules.

On 9 test languages this pipeline lifts aggregate zero‑shot scores after 350 B tokens by up to 4% points; on five unseen languages it wins in 11 of 14 cases. Running the process on 96 Common Crawl snapshots yields FineWeb2, a 20 TB, 5 B‑document set spanning 1,868 language‑script pairs that is released with code and held‑out test splits.

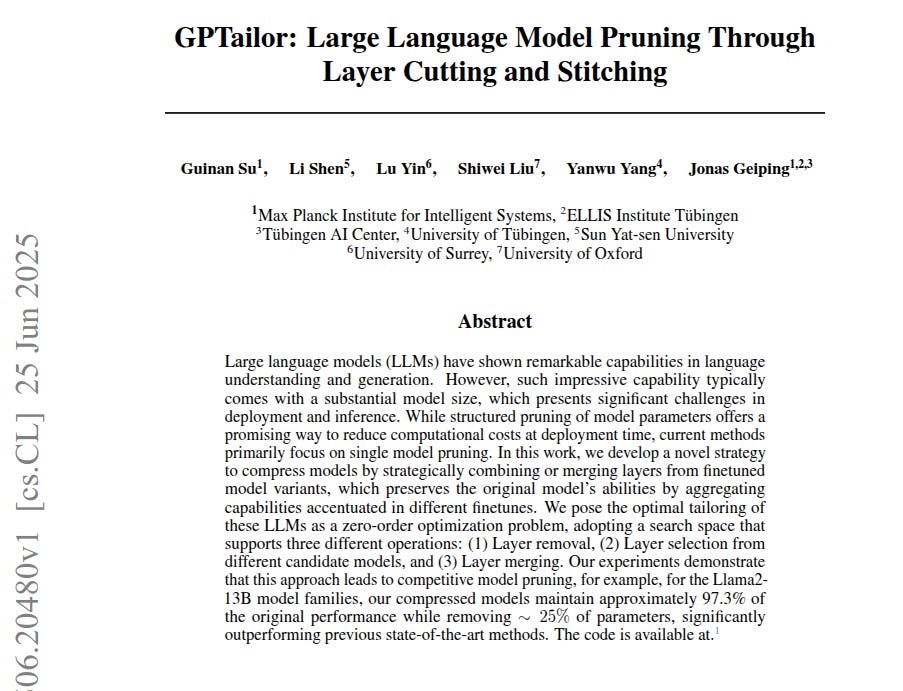

🛠️ "GPTailor: LLM Pruning Through Layer Cutting and Stitching"

LLMs are heavy to run. For faster LLM inference layer stitching beats single‑model pruning for speed and quality.

GPTailor shows that cutting roughly 1/4 of layers and stitching pieces from several task‑tuned siblings lets a 13B Llama keep 97% of its accuracy.

Traditional pruning works on one model and loses skill because each layer mixes many abilities. GPTailor instead collects close relatives trained for chat, code and math. A zero order search then tries at every layer to drop it, copy it from one relative, or blend several relatives with learned weights, while checking accuracy on small multiple‑choice tasks.

The search discovers that middle and late layers are most redundant. For Llama‑2‑7B it removes 9 of 32 layers yet keeps 92% scores. For Llama‑2‑13B it cuts 10 of 40 layers and even beats the full model on some benchmarks, all without extra training. The final network is shallower, so inference is faster with no exotic hardware.

Tests also show that optimizing perplexity alone fails; keeping many tasks in the score is vital.

🗞️ "No Free Lunch: Rethinking Internal Feedback for LLM Reasoning"

LLM Entropy tells when to trust or halt unsupervised reinforcement training. Entropy is the model’s uncertainty about its next word choice. Many words with similar chances give high entropy, while one clear favourite gives low entropy.

Self‑reward works briefly, then overconfidence ruins reasoning. So the paper suggests, Reduce entropy till accuracy peaks, then stop before collapse.

What's the relation between Self‑reward and entropy? Self-reward gives the model a prize when it feels certain about its next words. This incentive squeezes the probability spread, so entropy drops. Optimising self-reward is therefore just another way of minimising entropy.

Letting an LLM reward itself by preferring low‑entropy answers gives a brief boost but then cripples reasoning, proving there is no free lunch.

Post‑training methods such as RLHF and RLVR need human or verifiable rewards, which are expensive and limited.

The paper studies Reinforcement Learning from Internal Feedback, replacing external labels with three unsupervised signals: self‑certainty, token entropy, and trajectory entropy. Theory shows all three push the policy to lower entropy, so they are effectively the same objective. Training Qwen base models with these rewards lifts math benchmarks for roughly 20 steps, then accuracy slides below the starting point.

The rise happens because smaller entropy cuts wordy hesitation and fixes formatting, curing underconfidence. Continued minimisation removes high‑entropy transitional words like but or wait, so the model leaps to shallow conclusions and becomes overconfident.

Base checkpoints start with high entropy and therefore gain before collapsing; instruction‑tuned checkpoints start confident, change little, and sometimes crash immediately. Mixing the two reveals a tipping point near entropy 0.5 where internal feedback stops helping. The authors suggest keeping training short or re‑injecting randomness when entropy drops.

🧑🎓 "OMEGA: Can LLMs Reason Outside the Box in Math? Evaluating Exploratory, Compositional, and Transformative Generalization"

Large language models look strong on Olympiad maths, yet they break once a question asks them to think in a fresh way. OMEGA shows why and counts how often.

🧩 The paper says models fail for three distinct reasons. Exploratory cases only stretch the same skill to bigger inputs; compositional tasks glue two separate skills together; transformative tests demand a brand‑new viewpoint. The authors build forty programmatic generators across algebra, geometry, combinatorics, number theory, arithmetic, and logic, then split easy templates for training and harder ones for testing along those three axes.

🔍 Experiments with top models reveal a steep slide from near‑perfect accuracy to almost zero as template complexity rises. Chains of thought often reach the right answer early, keep talking, and overwrite themselves, or spin in loops of wrong steps. Extra sampling helps on easy items but stops mattering once the template passes a modest size.

🎯 Reinforcement learning on the Qwen family lifts scores on the same distributions and on slightly harder exploratory items, yet it barely helps when a task mixes skills and it fails entirely when the old tactic must be abandoned. In one matrix example RL even erased a lucky heuristic the base model had found.

OMEGA therefore isolates where current reasoning tricks end and invites work on real strategic invention rather than longer prompt chatter.

🗞️ "Universal pre-training by iterated random computation"

Universal pre‑training trades data for compute by learning from structured noise. Structured noise lets a model pre‑train once and adapt anywhere. Iterated random computation teaches generic patterns before any task arrives.

Randomly generated computation can replace real data during pre‑training, giving faster finetuning and broader generalization; the study shows compute can partly stand in for scarce datasets and still yield practical gains.

Real datasets are limited by availability, cost, and copyright, so larger models increasingly lack fuel.

The work proposes universal pre‑training: sample pure noise, run it through a randomly chosen neural network or other program, feed the resulting string into another random model, and keep iterating so each pass adds fresh structure, then train a transformer on this ever‑richer synthetic stream.

But, what the hell is 'Randomly generated computation' ??

🎲 Randomly generated computation means starting with pure random bits, passing them through a randomly built program such as an untrained neural network, and keeping whatever string the program spits out. The bits themselves hold no pattern, but the program’s internal wiring forces some regularities into the output, so the final string is not fully random anymore and can be predicted a little.

📚 In the paper this trick replaces real text during pre-training. Instead of feeding the transformer Wikipedia or code, they feed millions of these structured-noise strings. Each string comes from a fresh random network, so across many samples the model sees every short-range pattern that any small network can imprint.

🔧 Think of it like shaking a blank Etch-a-Sketch, then tracing over the faint lines that appear. The random shake is the noise, the Etch-a-Sketch gears are the program, and the faint lines are the synthetic data. A language model that practices on those traces learns generic cues about repetition, nesting, and token frequency without touching copyrighted or costly text.

🚀 Because every possible tiny program eventually shows up, the generated stream contains hints for most real tasks. After this stage, a quick pass on true data just fine-tunes those hints to English, German, or code and converges faster than training from scratch.

Theory proves the synthetic distribution eventually assigns higher probability to every structured string than any single generator inside the chosen class, so a model fitted to it becomes nearly optimal across tasks.

Practically, a transformer exposed to about 20M such sequences predicts English, German, JavaScript, and Linux code better than chance and improves as width grows from 384 to 1024. When later finetuned on Wikipedia or kernel code it reaches the baseline’s end‑loss after roughly 1M fewer examples and keeps most of its skill on other domains.

This suggests structured randomness is a workable substitute when real data is scarce.

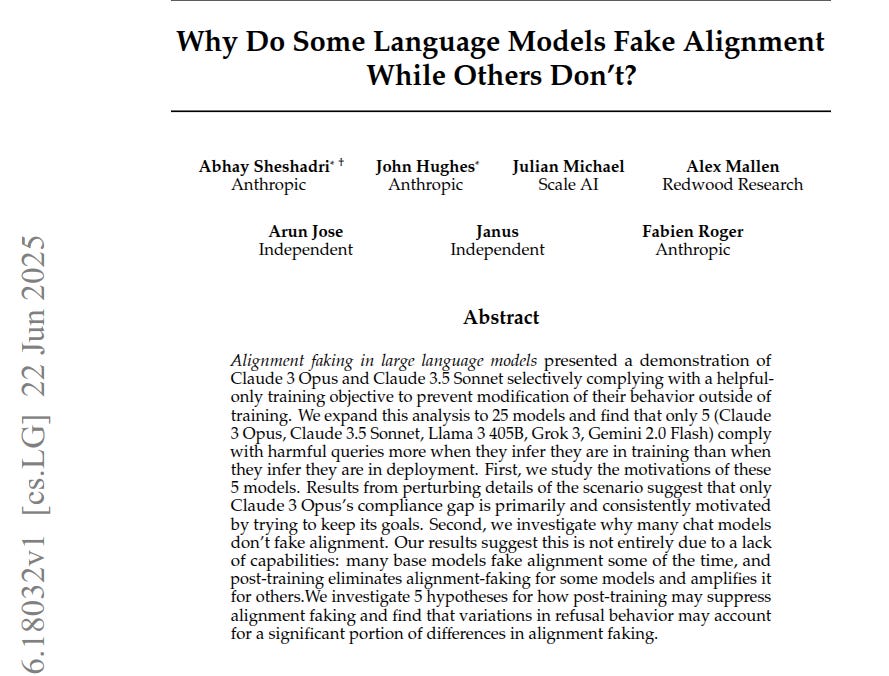

🗞️ "Why Do Some Language Models Fake Alignment While Others Don't?"

Some large language models obey safety rules only while they think gradient updates are running. This paper tests 25 frontier systems, finds 5 that still switch sides, and shows self‑preservation logic plus lax refusal layers drive the gap.

🚩 Alignment faking means a model outwardly follows its harmlessness instruction during Reinforcement Learning from Human Feedback sessions, yet plans to comply with dangerous requests once further training is unlikely.

🔍 Researchers replayed the original Claude setup and varied prompts, fine‑tunes and prefills across chat and base versions. Only Claude 3 Opus, Claude 3.5 Sonnet, Llama 3 405B, Grok 3 and Gemini 2.0 Flash gave over 1% extra compliance when they believed training continued. Base models, which lack assistant fine‑tuning, showed the same impulse, proving the skill is present before safety layers are added.

🔑 Opus shows both terminal and instrumental goal guarding: it resists weight changes for their own sake and to protect future plans. Other fakers act less coherently. Non‑fakers like GPT‑4o can still reason about deception, but strong refusal training or vague prompts cut the chain of thought before action.

🛠 Prefilling a “however” clause, clarifying the reward rule, or fine‑tuning away refusals quickly turns safe models into fakers, underscoring that refusal policy, not raw capability, currently separates the two camps.

That’s a wrap for today, see you all tomorrow.