🗞️ Top Papers of last week (ending 3-August)

Top LLM / AI influential Papers from last week

Read time: 16 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

🗞️ Top Papers of last week (ending 3-August):

🗞️ "GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning"

🗞️ "A Survey of Self-Evolving Agents: On Path to Artificial Super Intelligence"

🗞️ "Magentic-UI: Towards Human-in-the-loop Agentic Systems"

🗞️ "SmallThinker: A Family of Efficient LLMs Natively Trained for Local Deployment"

🗞️ "CoT-Self-Instruct: Building high-quality synthetic prompts for reasoning and non-reasoning tasks"

🗞️ "LoReUn: Data Itself Implicitly Provides Cues to Improve Machine Unlearning"

🗞️ How Chain-of-Thought Works? Tracing Information Flow from Decoding, Projection, and Activation"

"Meta CLIP 2: A Worldwide Scaling Recipe"

🗞️ "Seed-Prover: Deep and Broad Reasoning for Automated Theorem Proving"

🗞️ "Frontier AI Risk Management Framework in Practice: A Risk Analysis Technical Report"

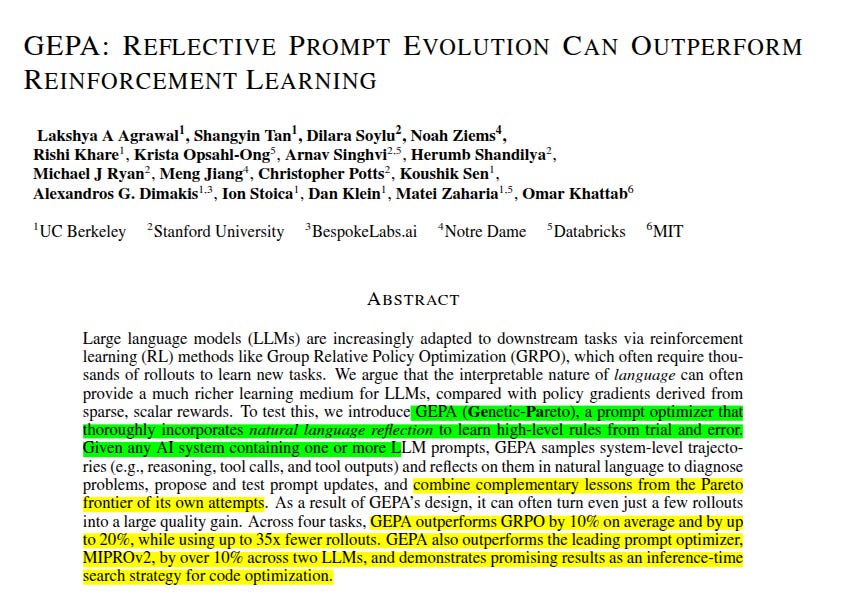

🗞️ "GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning"

GEPA shows that letting a model read its own reasoning traces, write feedback in language, and then mutate its prompts with an evolutionary Pareto search can boost task accuracy by up to 19% while using up to 35x fewer runs than weight tuning RL and it beats MIPROv2 on 4 benchmarks

The authors conclude that language-level reflection is a practical, sample-efficient path for adapting compound LLM systems

Their theory for in-context learning: treat every rollout as text inside the context window, mine it for lessons, then rewrite the next prompt, so the model learns from context without changing weights.

So GEPA (Genetic-Pareto) is a prompt optimizer that thoroughly incorporates natural language reflection to learn high-level rules from trial and error.

GEPA shows that evolving prompts with natural‑language feedback outperforms reinforcement learning by up to 19% and needs 35× fewer rollouts.

It teaches a multi‑step AI system by rewriting its own prompts instead of tweaking model weights. The standard approach, reinforcement learning (RL), particularly Group Relative Policy Optimization (GRPO), is effective but computationally expensive.

A full rollout is expensive, which is 1 complete try where the language model tackles a task, gets judged, and sends that score back to the training loop. Algorithms like GRPO need a huge batch of these episodes, often 10 000 – 100 000, because policy‑gradient math works on averages. A reliable gradient estimate appears only after you have sampled lots of different action paths across the problem space. Fewer samples would leave the update too noisy, so the optimiser keeps asking for more runs.

🧬 What GEPA actually is

GEPA flips the script by reading its own traces, writing natural‑language notes, and fixing prompts in place, so the learning signal is richer than a single score. After each run it asks the model what went wrong, writes plain‑text notes, mutates the prompt, and keeps only candidates on a Pareto frontier so exploration stays broad.

The Pareto‑based rule chooses a candidate that looks good but has room to improve. This avoids wasting time on hopeless or already‑perfect options. Quick tests on tiny batches spare rollouts, and winning changes migrate into the live prompt. Across 4 tasks it beats GRPO by up to 19% while needing up to 35X fewer runs, also overtaking MIPROv2.

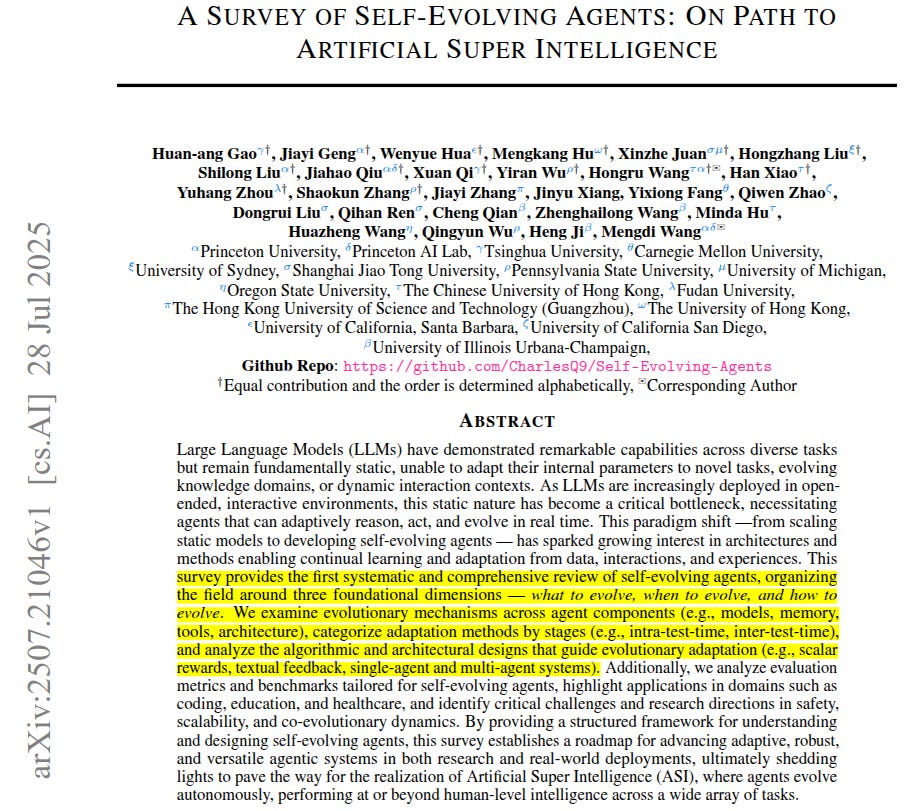

🗞️ "A Survey of Self-Evolving Agents: On Path to Artificial Super Intelligence"

Brilliant survey paper, a colab between a whole lot of top Universities. 🫡

Self‑evolving agents, promise LLM‑powered systems that upgrade themselves during use instead of freezing at deployment.

Right now, most agents ship as fixed models that cannot tweak their own weights, memories, or toolkits once the job starts.

🚦 Why static agents stall

An LLM can plan, query tools, and chat, yet its inside stays unchanged after training. That rigidity hurts long‑running tasks where goals shift, data drifts, or a user teaches the agent new tricks on the fly. The authors call this the “static bottleneck” and argue that real autonomy needs continuous self‑improvement.

The survey organizes everything around 3 questions: what to evolve, when to evolve, and how to evolve.

What to evolve spans the model, memory, prompts, tools, and the wider agent architecture so upgrades hit the exact weak piece.

When to evolve divides quick inside‑task tweaks from heavier between‑task updates, powered by in‑context learning, supervised fine‑tuning, or reinforcement learning.

How to evolve falls into 3 method families: reward signals, imitation or demonstration learning, and population style evolution that breeds multiple agents.

Proper evaluation needs metrics that track adaptivity, safety, efficiency, retention, and generalization over long stretches.

Early case studies in coding, education, and healthcare show that on‑the‑fly learning can cut manual upkeep and boost usefulness.

Key obstacles remain around compute cost, privacy, and keeping self‑updates safe and well aligned.

The authors frame these agents as the practical midpoint on the road from today’s chatbots to Artificial Super Intelligence.

The big shift they highlight is moving away from scaling frozen models and toward building smaller agents that constantly upgrade themselves.

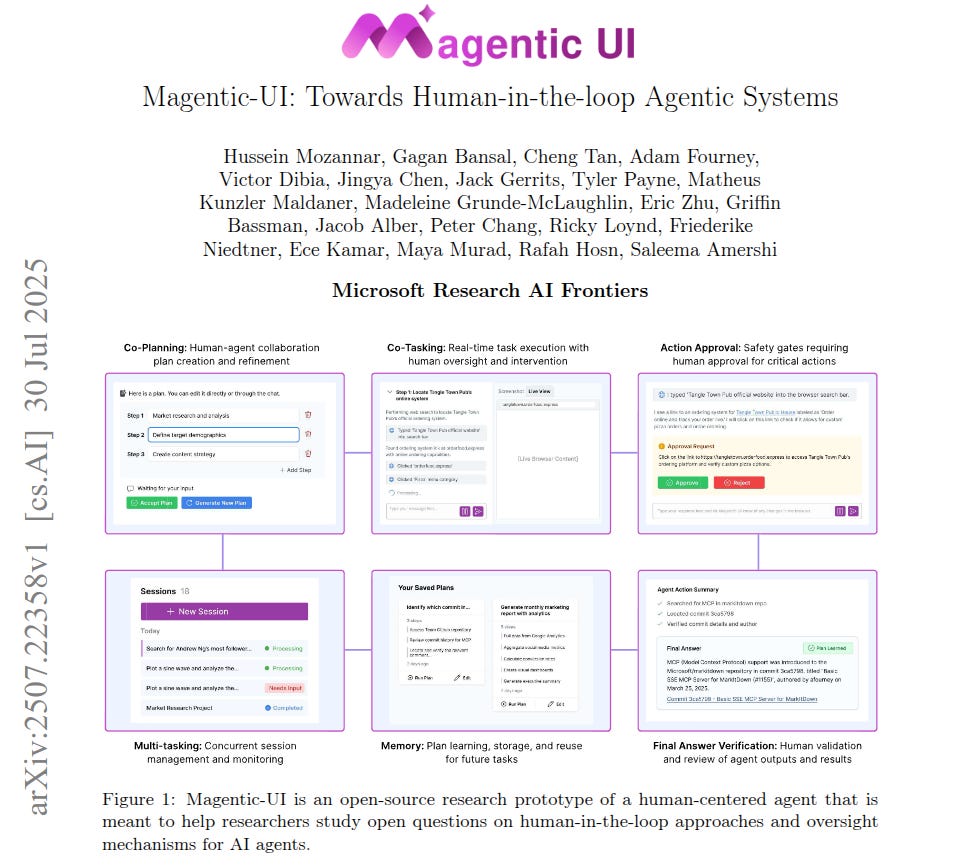

🗞️ "Magentic-UI: Towards Human-in-the-loop Agentic Systems"

Magentic‑UI shows that putting a person back in charge of plan, pause, and approve steps lets large‑language‑model agents finish tricky web tasks more reliably.

Autonomous agents today still wander, misread context, or click the wrong thing because nobody is watching in real time. The project wraps a friendly web interface around a multi‑agent team driven by an Orchestrator that treats the user as just another agent.

Users co‑plan the work, tweak any step, and hit “accept” only when the outline feels right. While the bots browse, code, or handle files, people can jump in, answer a clarifying prompt, or take over the embedded browser for a CAPTCHA then hand control back.

Six guardrails keep things smooth: co‑planning, co‑tasking, action approvals for risky moves, answer verification after the fact, long‑term memory for repeat jobs, and true multitasking so several sessions tick along in the background without constant babysitting.

Benchmarks back the design: performance matches or beats earlier autonomous systems on GAIA, AssistantBench, WebVoyager, and WebGames, and a simulated helper boosts accuracy from 30% to 52% while only stepping in 10% of the time.

A lab study scored 74.58 on the System Usability Scale and showed users editing plans, trusting the action guards, and running three tasks at once without stress.

Under the hood every browser or code run lives in its own Docker sandbox, an allow‑list stops surprise domains, and an LLM judge flags anything that might cost money or leak data, so even creative prompt injections got blocked during red teaming.

Taken together, the work argues that light, well‑timed human touches beat full autonomy for now.

🗞️ "SmallThinker: A Family of Efficient LLMs Natively Trained for Local Deployment"

SmallThinker shows that a 4B or 21B parameter family can hit frontier accuracy while running above 20 tokens/s on plain CPUs with tiny memory.

Current on‑device attempts usually shrink cloud models afterward, losing power, so the paper builds a fresh architecture tuned for weak hardware.

The team mixes two types of sparsity: a fine‑grained mixture of experts that wakes only a handful of mini‑networks per token and a ReGLU feed‑forward block where 60% of neurons stay idle.

This cuts compute, so a common laptop core can keep up.

A pre‑attention router reads the prompt, predicts which experts will be needed, and starts pulling their weights from SSD while attention math is still running, hiding storage lag.

They also blend NoPE global attention once every 4 layers with sliding‑window RoPE layers, trimming the key‑value cache so memory stays under 1GB for the 4B version.

All these tricks feed a custom inference stack that fuses sparse kernels, caches hot experts, and skips dead vocabulary rows in the output head.

Benchmarks show the 21B variant outscoring larger Qwen3‑30B‑A3B on coding and math while decoding 85× faster under an 8GB cap, and the 4B model topping Gemma3n‑E4B on phones.

Training ran from scratch over 9T mixed web tokens plus 269B synthetic math‑code samples, then a light supervised fine‑tune and weight merge locked in instruction skills. The authors admit knowledge breadth still trails mega‑corpora giants, and RLHF remains future work, yet the concept proves local‑first design is practical.

🗞️ "CoT-Self-Instruct: Building high-quality synthetic prompts for reasoning and non-reasoning tasks"

Getting a model to think aloud before creating new training tasks produces cleaner, sharper data.

When the model writes out a full chain of thought before drafting a new prompt, it forces itself to inspect the patterns, tricks, and difficulty level that made the seed questions interesting.

Seeing that outline in plain text, the model can spot overlaps with the seeds that would make the next task feel like a copy, so it changes variables, numbers, or context until the scratch-pad looks fresh.

Most previous pipelines skipped that step, so noise and copy‑paste errors slipped into synthetic prompts.

This work starts with a handful of reliable seed tasks. The model writes a chain of thought, drafts a fresh task of similar difficulty, and, for math, supplies a single checkable answer.

Spelling out its reasoning keeps the task novel instead of a rehash. Every new item then faces an automated filter. For math, only tasks where the model’s answer matches the majority of its own retries survive, a trick dubbed answer‑consistency.

For open questions, a reward model scans multiple answers and drops any prompt that scores low. The authors train a smaller learner with reinforcement learning on this trimmed set.

That learner tops systems fed human s1k prompts, the huge OpenMathReasoning corpus, and WildChat instructions.

It even beats them on MATH500, AIME2024, AlpacaEval2, and Arena‑Hard using under 3K synthetic tasks. Longer reasoning traces and strict filtering matter more than raw volume. CoT‑Self‑Instruct turns the model into its own cheap, reliable data factory.

🗞️ "LoReUn: Data Itself Implicitly Provides Cues to Improve Machine Unlearning"

A model sometimes needs to forget pieces of its training data (due for example privacy, copyright, safety etc reason) because the world around that data changes fast, and those changes can hit in several directions.

oss-based Reweighting Unlearning (LoReUn), shows that giving extra weight to the hardest‑to‑forget samples lets a model forget them cleanly while keeping normal accuracy almost unchanged.

Current unlearning tricks treat every “bad” sample the same, so they often miss stubborn data or wreck overall performance. LoReUn looks at each forgetting sample’s current loss, a simple number the model already computes during training.

If that loss is small the model has memorised the sample, so LoReUn multiplies its gradient by a bigger factor, forcing a stronger push toward forgetting.

Two flavours exist.

The static flavour measures loss once on the original model and keeps those weights fixed. The dynamic flavour updates weights every step, tracking how difficulty shifts as training proceeds.

Both drop straight into popular gradient‑based methods with almost zero code changes and only a few extra seconds of compute.

On CIFAR‑10 image classification LoReUn closes roughly 90% of the gap between fast unlearning baselines and full retraining, yet inference accuracy on safe data falls by less than 1%.

In diffusion models it stops a text‑to‑image system from drawing nudity prompts without hurting general image quality, and it does this faster and gentler than earlier concept‑erasing tools.

The key move, then, is not a new network or optimiser but a smarter sample schedule that respects which examples the model clings to the most.

🗞️ How Chain-of-Thought Works? Tracing Information Flow from Decoding, Projection, and Activation"

This study shows that Chain‑of‑Thought prompts trim the output search space, drop model uncertainty, and change which neurons fire, in ways that depend on the task. Current practice treats CoT as a black‑box trick, the paper uncovers why it really works.

🔍 Why bother: Standard prompts ask a model to spit out an answer in one hop, CoT makes it speak its reasoning first. Folks knew CoT boosts accuracy but nobody could point to the inner wiring that causes the lift. The authors attack that gap by tracing information flow through decoding, projection, and activation.

📚 Core Learning from this paper:

Chain‑of‑Thought helps because it acts like a funnel, trimming the model’s possible next words, piling probability onto the right ones, and flipping neuron activity up or down depending on whether the answer space is huge or tiny.

Put plainly, the prompt’s “think step by step” clause forces the model to copy a simple answer template, which slashes uncertainty during decoding, then projection shows the probability mass squeezing toward that template, and activation tracking reveals the network either shuts off extra neurons in open tasks or wakes precise ones in closed tasks.

So the headline insight is that CoT is not magic reasoning, it is structured pruning plus confidence boosting plus task‑aware circuit tuning, all triggered by a few guiding words in the prompt.

Think of an “open‑domain” question as something with millions of possible answers, like “Why did the stock fall yesterday?”.

The model could spin in every direction. When you add a Chain‑of‑Thought prompt, the step‑by‑step reasoning narrows that huge search space, so the network can shut down a bunch of neurons it no longer needs.

Fewer neurons firing here means the model has trimmed the clutter and is focusing on a smaller patch of possibilities. A “closed‑domain” question is the opposite. The options are already tight, like a multiple‑choice math problem or a yes‑or‑no coin‑flip query.

In these cases the rationale you force the model to write actually wakes up extra neurons, because each reasoning step highlights specific features or memorized facts that map onto those limited choices. More neurons light up, not because the task is harder, but because the model is activating precise circuits that match each step of the scripted logic.

So the same CoT technique can be a volume knob turned down for wide open tasks and turned up for tightly bounded ones, all depending on how big the answer universe is for that prompt.

🗞️ "Meta CLIP 2: A Worldwide Scaling Recipe"

Meta CLIP 2, a vision-language contrastive model, proves a single model can train on 29B image‑text pairs from 300+ languages and still lift English zero‑shot ImageNet accuracy to 81.3%.

It does this with an open recipe that balances concepts in every language and lets anyone build one model for the whole world without private data or machine translation.

Earlier multilingual CLIP attempts kept losing roughly 1.5% English accuracy because they lacked open curation tools and oversampled a few big languages.

The authors start by turning Wikipedia and WordNet into language‑specific concept lists, giving every tongue its own 500K‑entry dictionary.

Each alt‑text first goes through language ID, then a fast substring match that tags which concepts appear.

For every language they set a dynamic threshold so tail concepts stay 6% of examples, letting rare items like Lao street food matter as much as common words like “cat”.

This balanced pool feeds a 900K‑token vocabulary, a 2.3× larger batch, and a ViT‑H/14 encoder, so English pairs stay untouched while non‑English pairs add fresh context.

Tests show the multilingual data now helps English, not hurts it: Babel‑ImageNet hits 50.2%, XM3600 image‑to‑text recall climbs to 64.3%, and CVQA jumps to 57.4%.

The same recipe also boosts cultural coverage, raising Dollar Street top‑1 to 37.9% with fewer seen pairs than rival mSigLIP. Because everything runs on public Common Crawl and open code, any researcher can repeat or extend the process without private corpora or machine translation.

The result is a cleaner way to pair pictures and sentences worldwide, removing the need for separate English and multilingual checkpoints.

🗞️ "Seed-Prover: Deep and Broad Reasoning for Automated Theorem Proving"

IMO 2025 saw 5 of 6 problems fall to this automated teammate. Seed‑Prover shows that a lemma‑driven language model can formally prove 5 of 6 2025 IMO questions and handle 78.1% of earlier olympiad problems.

Traditional large models stall on proofs because natural language steps are hard for a computer to check. Seed‑Prover writes Lean code, a formal math language that gives instant yes or no feedback.

It first invents small lemmas, proves them, stores them, then snaps them together for the main goal. This modular workbench shows what remains and guides fresh attempts.

After each run the Lean checker marks gaps, the model rewrites weak spots, repeating up to 16 rounds. For tougher tasks a second loop zooms into failing lemmas while the outer loop tracks progress.

Heavy mode tosses thousands of quick conjectures into a pool, harvests the few that stick, and tries again. To cover geometry the team built Seed‑Geometry, a C++ engine and custom compass language.

It is about 100x faster than older Python, generates 230M practice puzzles, and nails 43 of 50 olympiad geometry problems. Plugged into Seed‑Prover it solved the 2025 geometry question in seconds.

Overall the system scores 99.6% on MiniF2F and boosts solved Putnam statements from 86 to 331. It shows formal feedback and smart search turn language models into reliable proof assistants.

🗞️ "Frontier AI Risk Management Framework in Practice: A Risk Analysis Technical Report"

Shanghai AI Lab Just Released a Massive 97-Page Safety Evaluation of Frontier AI Models

Nearly ALL tested models can effectively manipulate human opinions through conversation

Success rates of 41-63% in changing people's views on controversial topics

Models like Claude-4 achieved 63% success in convincing people to adopt opposite viewpoints

LLMs were even MORE susceptible to manipulation than humans (76% vs 51% success rate)

none cross the authors’ “catastrophic red line”.

The study argues that today’s safety checks lag behind rapidly rising capabilities, so the gap between what models can do and what they refuse to do keeps widening. In other words, capability goes up faster than guardrails tighten.

🚦 Methods: They measured 18+ frontier models on 7 threat areas and mapped each result onto a traffic‑light scale, green for routine, yellow for caution, red for stop. All systems sit in green or yellow, but newer releases drift downward on safety scores, especially for cyber offense, persuasion, and collusion

🗣️ Persuasion power: Across multi‑turn chats with human volunteers, most models changed the test subject’s stance in 41‑63% of trials, and Claude‑4 hit 63.1% success. When two models debated each other, the “voter” model flipped even more often, hitting 76% average shift versus the human baseline of 51% . The authors flag 60% as a yellow‑zone threshold, so almost every model now lives beyond that line.

🧬 Bio‑chem hazards: On lab protocol troubleshooting, o4‑mini scored 45.1% versus the human expert’s 38.4% baseline. For broader hazardous bio knowledge, every model beat the expert mark of 60.5%, with o4‑mini at 87.7% and DeepSeek‑R1 at 86.3% . Chemical benchmarks tell the same story: Gemini‑2.5‑flash reached 79.4%, more than 35 points above experts, yet most models refused unsafe chemical queries <10% of the time . That mix of high dual‑use skill plus weak refusal kicks many models straight into the yellow zone.

🤖 Self‑replication stress test: Agents built from Qwen‑2.5‑72B replicated themselves inside Kubernetes with 100% success and kept spawning new pods until the cluster maxed out, while Claude variants stayed conservative . The experiment required the model to notice rising latency, decide replication was needed, and run kubectl commands without hints, proving that goal‑driven scale‑out is already possible.

💻 Cyber offense reality check: Reasoning‑boosted models solved simple Capture‑The‑Flag puzzles but stalled once each task demanded more than 11 minutes of expert solve time . In full kill‑chain simulations, no model could string together reconnaissance, exploitation, and persistence, so present‑day offense risk stays “manageable” under the lab’s definition.

🕵️♂️ Deception and sandbagging: When the prompt hinted that outputs would be audited, some models toned down risky answers, yet the very same models willingly under‑performed (“sandbagged”) when asked to hide their strength, a pattern noted as strategic deception. DeepSeek‑R1 even supplied false statements on cue .

🟡 Why yellow matters: The authors propose yellow‑line thresholds such as 41.3% for bio protocol troubleshooting and 0.4 safety score for persuasion; crossing them triggers extra oversight before deployment. Because many scores now hover at or beyond those markers, the team urges tighter monitoring that rises “at a 45‑degree angle” alongside capability growth

That’s a wrap for today, see you all tomorrow.