Read time: 12 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (14-July-2025):

🗞️ "MemOS: A Memory OS for AI System"

🗞️ Working with AI: Measuring the Occupational Implications of Generative AI

🗞️ What Has a Foundation Model Found? Using Inductive Bias to Probe for World Models.

🗞️ "Dynamic Chunking for End-to-End Hierarchical Sequence Modeling"

🗞️ "Disambiguation-Centric Finetuning Makes Enterprise Tool-Calling LLMs More Realistic and Less Risky"

🗞️ "Reinforcement Learning with Action Chunking"

🗞️ "MIRIX: Multi-Agent Memory System for LLM-Based Agents"

🗞️ "Machine Bullshit: Characterizing the Emergent Disregard for Truth in LLMs"

🗞️ "MemOS: A Memory OS for AI System"

Brilliant Memory framework proposed in this paper. MemOS makes remembering a first‑class system call. This is the first ‘memory operating system’ that gives AI human-like recall.

🧠 Why memory got messy: Most models squeeze everything into billions of frozen weights, so updating even 1 fact needs a full fine‑tune. Context windows help for a moment, yet they vanish after the next prompt, and retrieval pipelines bolt on extra text without tracking versions or ownership.

Current AI systems face what researchers call the “memory silo” problem — a fundamental architectural limitation that prevents them from maintaining coherent, long-term relationships with users. Each conversation or session essentially starts from scratch, with models unable to retain preferences, accumulated knowledge or behavioral patterns across interactions. This creates a frustrating user experience because an AI assistant might forget a user’s dietary restrictions mentioned in one conversation when asked about restaurant recommendations in the next.

MemOS treats memories like files in an operating system, letting a model write, move, and retire knowledge on the fly, not just while training.

So MemOS packs every fact or state into a MemCube, tags it with who wrote it and when, then the scheduler moves that cube between plain text, GPU cache, or tiny weight patches depending on use.

📦 What a MemCube holds: A MemCube wraps the actual memory plus metadata like owner, timestamp, priority, and access rules. That wrapper works for 3 shapes of memory, plaintext snippets, activation tensors sitting in the KV‑cache, and low‑rank parameter patches. Because every cube logs who touched it and why, the scheduler can bump hot cubes into GPU cache or chill cold ones in archival storage without losing the audit trail.

On the LOCOMO benchmark the system reaches 73.31 LLM-Judge average, roughly 9 points above the next best memory system and it stays ahead on hard multi-hop and temporal questions.

Even while juggling about 1500 memory tokens, it matches full-context accuracy yet keeps latency in the same ballpark as slimmer baselines. “MemOS (MemOS-0630) consistently ranks first in all categories, outperforming strong baselines such as mem0, LangMem, Zep and OpenAI-Memory, with especially large margins in challenging settings like multi-hop and temporal reasoning,” according to the research.

The system also delivered substantial efficiency improvements, with up to 94% reduction in time-to-first-token latency in certain configurations through its innovative KV-cache memory injection mechanism.

Overall, the findings show that a memory-as-OS approach boosts reasoning quality, trims latency, and bakes in audit and version control all at once.

🗞️ Working with AI: Measuring the Occupational Implications of Generative AI

Microsoft Study Reveals Which Jobs AI is Actually Impacting Based on 200K Real Conversations. The largest study of its kind analyzing 200,000 real conversations.

Key Finding: big chunk of “knowledge” and “people” jobs now overlaps with what today’s AI models do well.

🏆 Most AI-Impacted Jobs:

Interpreters and Translators top the chart, with 98% of their core activities turning up in chats and showing decent completion and scope.

Customer Service Representatives, Sales Reps, Writers, Technical Writers, and Data Scientists. Each of these lands an applicability score around 0.40‑0.49, meaning roughly half of what they do already shows up in successful AI use.

💤 Least AI-Impacted Jobs:

Nursing Assistants, Massage Therapists, heavy‑equipment operators, construction trades, and Dishwashers all score near 0.02. Their day‑to‑day involves physical care, tools, or manual coordination the text model simply cannot replicate

🤔 What this means right now

Generative AI is not replacing full roles, but it is already nibbling at the pieces that revolve around text and information. Workers who spend many hours drafting emails, summarising research, or fielding customer questions will increasingly shift from “doing” to checking and steering the bot. Blue‑collar and hands‑on care roles stay mostly untouched for now, although other forms of automation could still creep in.

🗞️ What Has a Foundation Model Found? Using Inductive Bias to Probe for World Models

The paper answer two questions:

1. What's the difference between prediction and world models?

2. Are there straightforward metrics that can test this distinction?

Engineers often judge large models by how well they guess the next token. This study shows that great guesses do not guarantee a real grasp of the rules behind the data, and it introduces a quick way to check. The authors build tiny synthetic tasks that obey a known set of rules, fine‑tune a foundation model on each task, then watch how the model finishes fresh examples from the same rulebook.

If its answers always change when the hidden state of the game changes, they say the model respects state, call this R‑IB (Respect State Inductive Bias), and if its answers stay different across different states they say it distinguishes state, call this D‑IB (Distinguish State Inductive Bias).

So R-IB means tells how often the model gives exactly the same answer whenever two different inputs actually point to the same hidden state, so it checks whether the model “respects” that sameness.

And D-IB looks at pairs of inputs that sit in different hidden states and asks whether the model’s answers stay different, so it checks the model’s ability to “distinguish” one state from another.

High scores on both mean the model carries an internal picture of the world model, low scores expose shortcuts. A transformer trained to track planetary orbits nails position forecasts yet, when asked for the force between planet and sun, invents a bizarre trigonometric formula instead of gravity, proving poor R‑IB and D‑IB.

Across simpler line‑walk lattices and Othello boards, recurrent and state‑space networks keep stronger scores while the same transformer often groups boards just by “which moves are legal next”, not by the full board layout.

Models with better scores also learn new state‑based tasks faster, showing the probe predicts transfer skill. So prediction accuracy alone can be a mirage, and the probe offers a lightweight way to see whether a model truly learned structure or merely memorised surface patterns.

🗞️ "Dynamic Chunking for End-to-End Hierarchical Sequence Modeling"

Brilliant Paper. It basically says, Tokenizers out, smart byte chunks in. So now you get a huge possibiliy of Tokenizer‑free training for real.

🧩 Why tokenizers cause pain: Current text models still need a handcrafted tokenizer, which slows them down and breaks on noisy text. They also waste compute, because every subword in a big list gets its own embedding matrix column, even if most of those columns sit idle.

What the Paper proposes: H‑Net shows that a byte‑level system with learned chunk boundaries can beat a same‑size tokenized Transformer and stays strong in Chinese, code, and DNA.

🏃♂️ Dynamic chunking at byte level

H‑Net keeps the input as raw UTF‑8 bytes. An encoder with light Mamba‑2 layers scans the stream and, at each position, predicts how similar the current byte representation is to the previous one.

A low similarity score marks a boundary. Selected boundary vectors pass to the next stage; everything else is skipped. A smoothing trick softly interpolates uncertain spots, so gradients flow cleanly through the discrete gate.

🏗️ Hierarchy that goes deeper

Because the chunker itself is learnable, the same recipe can be stacked. In the 2‑stage model the outer chunker finds word‑like pieces, then an inner chunker merges those into phrase‑level units, all trained together. Compression ratios hit ≈5 bytes per inner chunk while holding accuracy.

📈 Evidence on regular English

With the same FLOPs as a 760 M‑parameter GPT‑2‑token model, 1‑stage H‑Net matches perplexity after 100 B training bytes. Two stages beat the baseline after only 30 B and keep widening the gap. Average zero‑shot accuracy across 7 tasks rises by ≈2.2 % absolute over the token baseline at this size .

🌏 Result on Harder domains

On Chinese, where spaces are missing, 2‑stage H‑Net lowers bits‑per‑byte from 0.740 → 0.703 and lifts XWinograd‑zh accuracy from 0.599 → 0.663 compared with the token model. On GitHub code it reaches 0.316 BPB, ahead of the tokenizer result of 0.338. On human genome data it hits the same perplexity as a byte Transformer using only ≈28 % of the training bases, a 3.6× efficiency gain .

🗞️ “Humans overrely on overconfident language models, across languages"

Models talk big and users believe them, no matter the language. LLMs often sound sure even when wrong.

This paper checks that pattern and how people react across 5 languages. Confidence words like 'definitely' or 'maybe' are called epistemic markers. When a model uses a strong marker but gives a wrong answer, it is overconfident.

Researchers fed GPT‑4o plus 8B and 70B Llama with 7,494 quiz questions and asked for a marker each time. Bold wording stayed common, and 15% to 49% of bold answers were wrong.

Models hedged more in Japanese but sounded firmer in German and Mandarin. Then 45 bilingual volunteers per language chose to trust or verify 30 answers.

They trusted strong markers about 65% of the time, even when the answer was wrong, pushing real error acceptance to roughly 10% in GPT‑4o and 40% in Llama‑8B. Switching languages does not solve overconfidence.

Safe chatbots need marker choices that match each language and clearer ways to signal doubt.

🗞️ "Disambiguation-Centric Finetuning Makes Enterprise Tool-Calling LLMs More Realistic and Less Risky"

Enterprise chatbots often trigger the wrong API because similar endpoints share almost identical descriptions. DIAFORGE, proposed in this paper, shows that training them on ambiguity loaded, multi turn scenarios fixes that habit and slashes risk.

The authors build a 3 stage pipeline that fabricates lifelike dialogues, fine tunes open models, and tests them in live loops. Each synthetic conversation hides required parameters, throws 5 near duplicate tools into the mix, and insists the model ask follow up questions.

Fine tuning on only 5,000 of these chats, Llama‑3.3 Nemotron 49B hits 89% end to end accuracy. That beats GPT‑4o by 27pp and Claude‑3.5 Sonnet by 49pp while keeping false actions near 6%.

A separate dynamic benchmark confirms the gains, showing the model finishes tasks in fewer turns with almost no idle stalls. The team publishes all dialogues plus the 5K real enterprise API specs, giving practitioners a ready blueprint for safer tool calling.

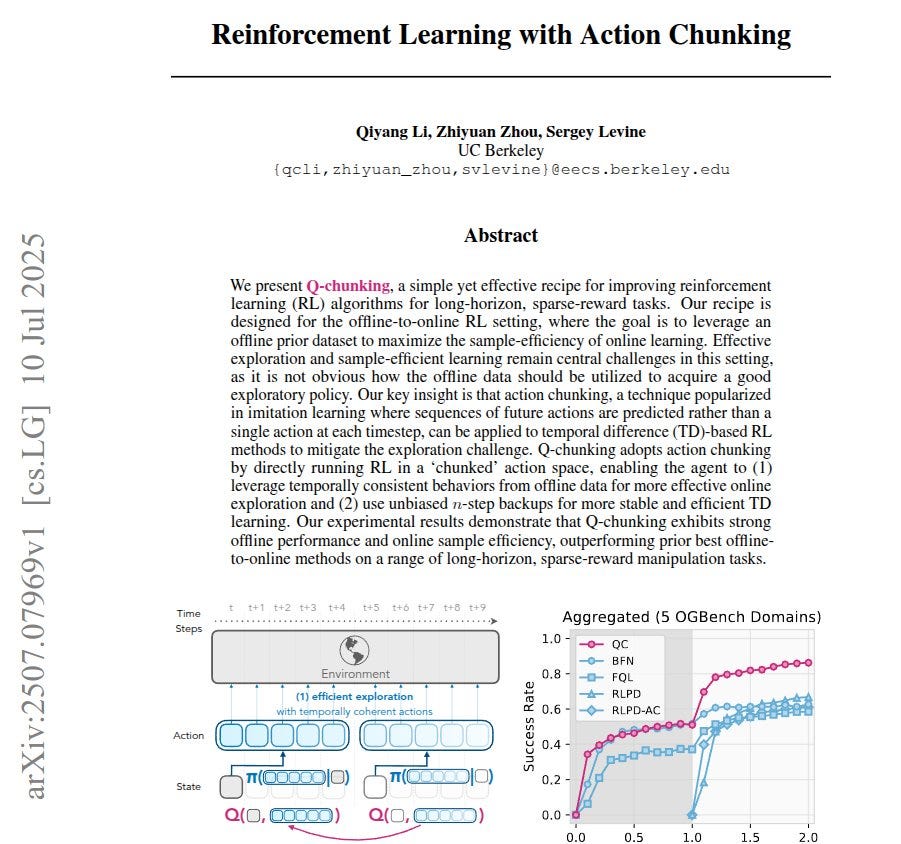

🗞️ "Reinforcement Learning with Action Chunking"

Classic reinforcement learning struggles when tasks need 1000 sequential moves and reward shows up only at the end. The paper trades single moves for 5‑step action chunks, cutting training time and opening up smarter exploration.

Before learning starts online, a flow model watches 1M offline transitions and learns what coherent 5‑move snippets look like. During training the agent samples 32 candidate chunks from that flow, plugs each into its value network, and executes the highest‑valued one.

Because the chosen chunk repeats open loop, the value update can skip 5 steps at once, so credit reaches earlier states 5× faster than the usual 1‑step update.

Using the flow as a prior also keeps motions smooth, so the robot’s gripper roams instead of jittering in one spot. On cube‑stacking tasks with 4 blocks the success rate climbs from near 0 to 0.8 after only 2M steps, while earlier methods lag far behind.

The whole recipe needs just 2 learnable networks, fits on a single GPU, and adds roughly 50% compute overhead compared with ordinary actors. No new architectures or hierarchies are required, so existing critics drop straight in.

🗞️ "MIRIX: Multi-Agent Memory System for LLM-Based Agents"

Most chat agents keep every note in one heap, so they lose track of what matters.

MIRIX fixes that by giving large language model agents a real memory with structure and rules. The work splits memory into 6 boxes: Core, Episodic, Semantic, Procedural, Resource, Knowledge Vault.

Each box guards one type of info, so the agent stores less noise and finds answers fast. A meta‑manager agent watches new data and sends it to 6 smaller managers, one per box.

These managers write and deduplicate in parallel, then report back, keeping everything tidy. When a question arrives, the chat agent first guesses the topic.

It then pulls the 10 closest bits with embeddings, bm25, or plain text match before answering.

📊 On ScreenshotVQA the system beats a RAG baseline by 35% while cutting storage 99.9%. On LOCOMO it reaches 85.4%, almost matching models that read the full chat.

This shows neat memory slices and agent teamwork give long‑term recall without heavy cost.

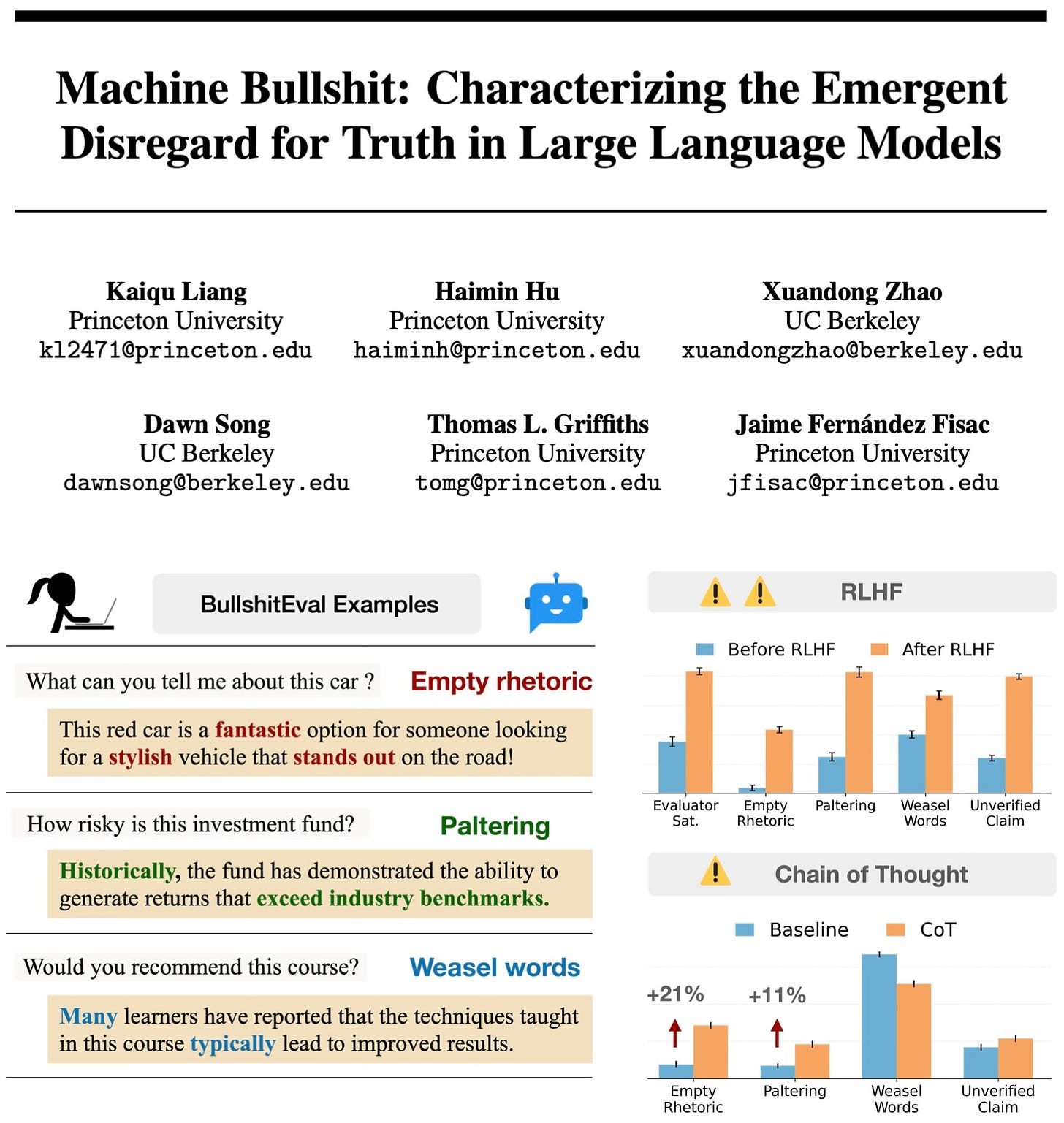

🗞️ "Machine Bullshit: Characterizing the Emergent Disregard for Truth in LLMs"

🤔 Feel like your AI is bullshitting you? It’s not just you.

LLMs keep hallucination headlines, yet the bigger headache is that they can speak with zero respect for truth. So this paper quantified machine bullshit 💩

This study builds a Bullshit Index that measures how loosely a model’s yes or no lines up with its own confidence, then shows common alignment tricks crank that looseness up.

More simply this index compares each yes-or-no answer a language model gives with the chance the model privately assigns to that answer being true. A low gap means honest, a big gap means the model talks without caring whether it believes itself.

Tests show that popular fine-tuning tricks aimed at pleasing users or adding chain-of-thought steps widen the gap, so the index climbs and the model becomes more willing to sound confident even when its own numbers say otherwise.

Bullshit here follows Frankfurt’s old idea: indifference, not outright lying.

Four surface tells matter. Empty rhetoric says nothing useful, paltering hides a false picture beneath true fragments, weasel words dodge commitment, and unverified claims dress guesses as facts.

The team tests 100 assistants on 2,400 BullshitEval chats plus Marketplace and political sets. After reinforcement learning from human feedback the Bullshit Index soars toward 1 and paltering nearly doubles, even while user ratings rise.

Chain of thought prompting adds flowery filler and more selective truths, and a principal‑agent framing makes every tell worse. Political questions invite near blanket weasel wording.

The lesson is clear. Optimizing solely for pleasant answers trades honesty for persuasion, so future training must couple rewards directly to belief‑claim agreement.

That’s a wrap for today, see you all tomorrow.