Read time: 13 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (11-Jan-2026):

🗞️ Extracting books from production language models

🗞️ Accelerating Scientific Discovery with Autonomous Goal-evolving Agents

🗞️ Logical Phase Transitions: Understanding Collapse in LLM Logical Reasoning

🗞️ From Failure to Mastery: Generating Hard Samples for Tool-use Agents

🗞️DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

🗞️ Let’s (not) just put things in Context: Test-Time Training for Long-Context LLMs

🗞️ Let It Flow: Agentic Crafting on Rock and Roll, Building the ROME Model within an Open Agentic Learning Ecosystem

🗞️ Youtu-Agent: Scaling Agent Productivity with Automated Generation and Hybrid Policy Optimization

🗞️ K-EXAONE Technical Report

🗞️ Can LLMs Predict Their Own Failures? Self-Awareness via Internal Circuits

🗞️ Extracting books from production language models

New Stanford paper shows production LLMs can leak near exact book text, with Claude 3.7 Sonnet hitting 95.8%. The big deal is that many companies and courts assume production LLMs are safe because they have filters, refusals, and safety layers that stop copying.

This paper directly tests that assumption and shows it is false in multiple real systems. The authors are not guessing or theorizing, they actually pull long, near exact book passages out of models people use today.

A production LLM is the kind people use through a company app, and it can memorize chunks of books from the text used to teach it. The authors test leakage by giving a book’s opening words, asking for the next lines, then repeating short follow ups until the model stops.

When the model refuses, they try many small wording changes and keep the first version that continues the text. They run this on 4 production systems across 13 books, and they use near verbatim recall, which only counts long, continuous matches. That matters because safety filters, meaning built in rules that try to block copying, can still miss memorized passages in normal use.

🗞️ Accelerating Scientific Discovery with Autonomous Goal-evolving Agents

SAGA is a system where an LLM keeps rewriting the score function during a scientific search, so the search stops chasing fake wins. The big deal is that most “AI for discovery” work assumes humans can write the right objective up front, but in real science that objective is usually a shaky proxy, so optimizers learn to game it and return junk that looks good on the proxy.

This paper’s core proposal is to automate that missing human loop, where people look at bad candidates, notice what is wrong, then add or change constraints and weights until the search produces useful stuff. SAGA does this with 2 loops, an outer loop where LLM agents analyze results, propose new objectives, and turn them into computable scoring functions, and an inner loop that optimizes candidates under the current score.

Why it matters is that this turns “objective design” from manual trial and error into something the system can do repeatedly, which is exactly where reward hacking usually sneaks in. SAGA watches the results, has the LLM suggest new rules the design must meet, turns those rules into a score the computer can calculate, and runs the search again.

The authors tested it on antibiotic molecules, new inorganic materials checked with Density Functional Theory, a physics simulation for material stability, DNA enhancers that boost gene activity, and chemical process plans, with both human-guided and fully automatic runs. Across tasks, the evolving goals reduced score tricks, and in the DNA enhancer task it gave nearly 50% improvement over the best earlier method.

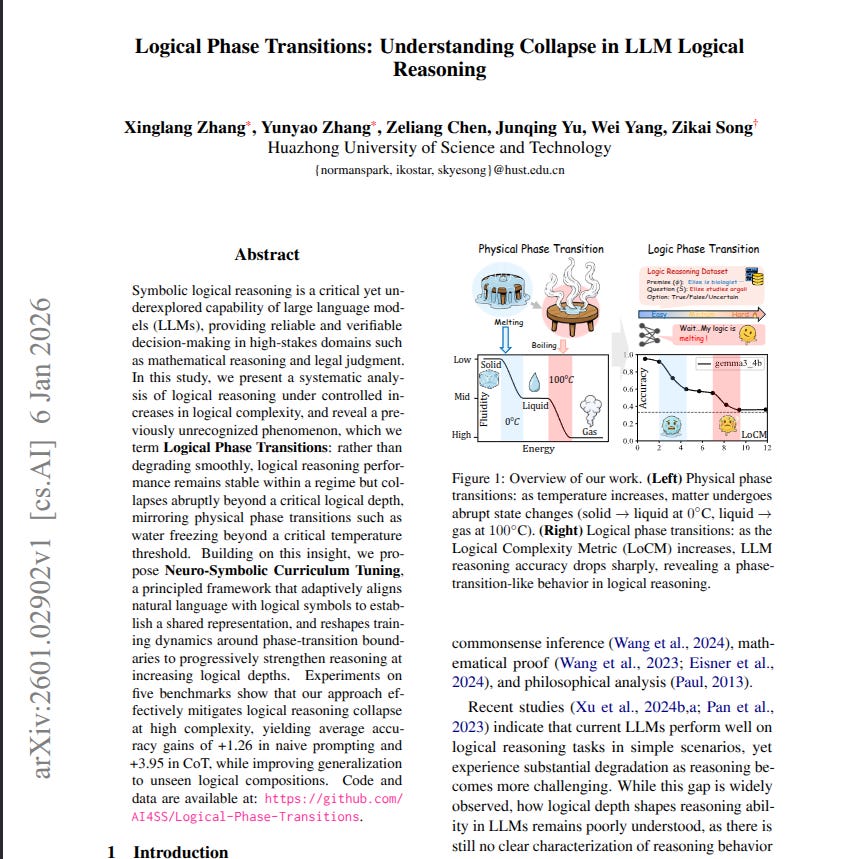

🗞️ Logical Phase Transitions: Understanding Collapse in LLM Logical Reasoning

The paper shows LLM logic can suddenly collapse past a complexity threshold, and proposes training that reduces it. LLM logic does not fade slowly, it snaps at a threshold, and the authors show how to soften that snap

Many tests assume accuracy drops smoothly as logic gets harder, but the authors find a sharp break where the model starts guessing. They create a Logical Complexity Metric, a single score for how hard the logic is, based on facts, nesting, and logic words like and, or, not, if then, all, some.

When they compare accuracy to that score, it stays stable for a while, then crashes in narrow bands they call logical phase transitions, meaning sudden drops. They also build training data where each English statement is paired with a matching first order logic version, a strict symbol form for rules, so the model can connect meaning to rules.

They then do extra training with a complexity aware curriculum, meaning training starts easy and moves harder while spending extra time near the crash bands. On 5 benchmarks, this approach improves average accuracy about 1.26 with direct answers and about 3.95 when it writes steps first, and it holds up better on new rule mixes. This matters because it gives a practical way to tell when an LLM is near its reasoning limit and strengthen it.

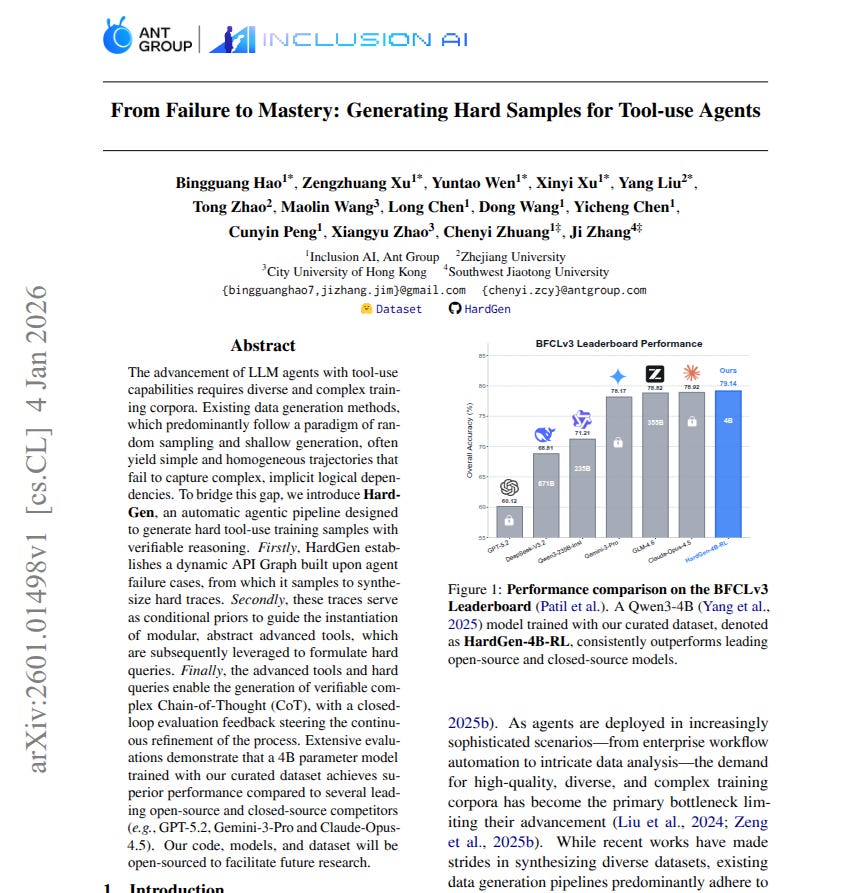

🗞️ From Failure to Mastery: Generating Hard Samples for Tool-use Agents

This paper shows that training on mistakes, not perfect demos, can make tool using LLM agents much stronger. HardGen turns tool use failures into hard training data, letting a 4B LLM agent compete with much bigger models.

An LLM is a text model that predicts the next words, and tool use means it sends a structured tool command to an application programming interface (API) and uses the result. Most synthetic datasets only show easy, fully spelled out steps, so the model never learns the hidden links between tool calls.

HardGen starts by testing an agent, collecting its failure tools, and building a living graph of which tools depend on which. It samples a valid tool trace from that graph, then rewrites the trace into an advanced tool description that hides the middle steps.

A hard query is written to match that description, and a reasoner plus verifier loop runs tools, spots mistakes, and gives hints to fix them. Using 2,095 APIs, they generated 27,000 verified conversations, trained Qwen3 4B, and got 79.14% on BFCLv3, a tough function calling test for tool commands. The result suggests agents improve faster when training targets real failure patterns, so small models can handle multi turn tool plans.

🗞️DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

DeepSeek-R1’s famous paper was updated 2 days ago, expanding from 22 pages to 86 pages and adding a substantial amount of detail.

mainly on the following areas.

adds much clearer training story with 3 checkpoints, Dev1, Dev2, Dev3. You see what each stage teaches, from basic instruction following, to reasoning gains from RL, to final polish.

explains the RL setup in more detail. they spell out reward rules for correctness, safety, and tidy language.

Benchmarks are much broader. Beyond math and coding, it now covers general knowledge sets like MMLU and GPQA, plus human baselines, so results feel less cherry picked.

There is analysis of how the model “self evolves” as it trains, for example more reflective steps and better error checks over time, plus many visuals and long appendices with data and infra details.

🗞️ Let’s (not) just put things in Context: Test-Time Training for Long-Context LLMs

New Meta paper shows long context large language models miss buried facts, and fixes it with query-only test-time training (qTTT). On LongBench-v2 and ZeroScrolls, it lifts Qwen3-4B by 12.6 and 14.1 points on average.

A large language model predicts the next token, meaning a small chunk of text, using the text it already saw. It decides what to pay attention to with self-attention, where a query searches earlier keys and pulls their values.

With very long prompts, many tokens look similar, so the real clue gets drowned out and the model misses it. The authors call this score dilution, and extra thinking tokens stop helping fast because the attention mechanism never changes.

qTTT fixes that by caching the keys and values once, then doing small training steps that change only the weights that make queries. Those steps push queries toward the right clue and away from distractors, so the model puts its attention on the useful lines. So for long context, a little context-specific training while answering beats spending the same extra work on more tokens.

🗞️ Let It Flow: Agentic Crafting on Rock and Roll, Building the ROME Model within an Open Agentic Learning Ecosystem

This paper shows how to train AI agents to reliably use real tools, fix their own mistakes, and finish long tasks instead of stopping early. They prove that when you train agents inside real sandboxes and reward whole actions instead of tiny text tokens, a smaller open model can perform like much larger models on real world tasks.

ROME is an open agent model trained on tool runs, so it keeps going when tasks get messy. Their Agentic Learning Ecosystem, called ALE, combines ROCK for locked down sandboxes, ROLL for training, and iFlow CLI for packing the right context each step.

They generate more than 1mn trajectories, full logs of actions and observations, and train ROME to plan, act, check, and retry inside tools. Their IPA method uses reinforcement learning, training by trial and scoring, and it rewards whole interaction chunks so long tasks do not fall apart.

They test on terminal and repository bug fix tasks and add Terminal Bench Pro, a bigger command line test set that tries to avoid leaked answers. ROME reaches 57.40% on SWE bench Verified, a GitHub bug report fixing test, which shows open stacks can rival much larger models.

🗞️ Youtu-Agent: Scaling Agent Productivity with Automated Generation and Hybrid Policy Optimization

New Tencent paper proposes Youtu-Agent - an LLM agent that can write its own tools, then learn from its own runs. Its auto tool builder wrote working new tools over 81% of the time, cutting a lot of hand work.

Building an agent, a chatbot that can use tools like a browser, normally means picking tools, writing glue code, and crafting prompts, the instruction text the LLM reads, and it may not adapt later unless the LLM is retrained. This paper makes setup reusable by splitting things into environment, tools, and a context manager, a memory helper that keeps only important recent info.

It can then generate a full agent setup from a task request, using a Workflow pipeline for standard tasks or a Meta-Agent that can ask questions and write missing tools. They tested on web browsing and reasoning benchmarks, report 72.8% on GAIA, and show 2 upgrade paths, Practice saves lessons as extra context without retraining, and reinforcement learning trains the agent with rewards. The big win is faster agent building plus steady improvement, without starting over every time the tools or tasks change.

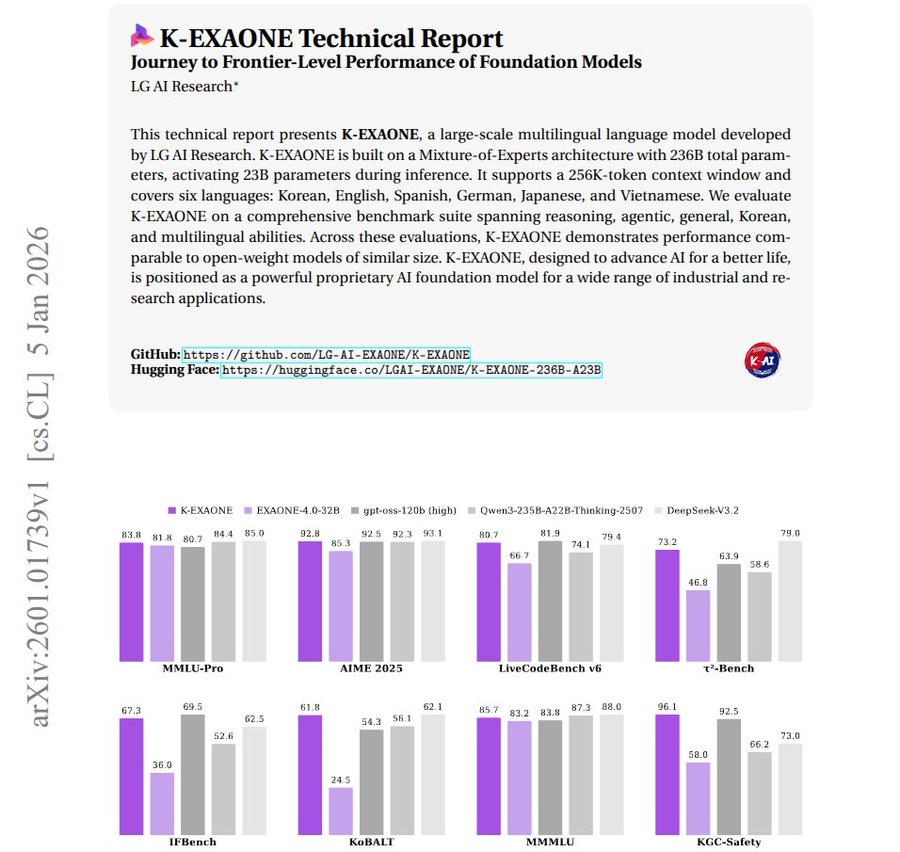

🗞️ K-EXAONE Technical Report

K-EXAONE Technical Report

A new 236B MoE model from LG AI Research is here, running 23B active parameters with a 256K context window and 6-language capability. It combines hybrid attention with Multi-Token Prediction for a 1.5x speed boost, performing on par with open-weight models of comparable scale.

Training frontier sized LLMs needs huge amounts of AI chips and data centers, and Korea has had less of both. To get scale without paying full cost, it uses a Mixture of Experts design with 236B numbers, but runs about 23B per token, and a token is a small chunk of text.

It can read up to 256K tokens at once by using a mixed setup that focuses nearby text and still tracks far away text. The authors then checked it on standard tests for reasoning, math, coding, tool use, Korean, other languages, long input recall, and safety.

They report performance comparable to open weight models in the same size range, while supporting 6 languages, Korean, English, Spanish, German, Japanese, and Vietnamese. This matters because it shows a way to scale a big, multilingual, long context LLM without needing extreme compute.

🗞️ Can LLMs Predict Their Own Failures? Self-Awareness via Internal Circuits

This paper shows LLMs can predict their own mistakes by looking at internal activity while answering. It adds about 5M parameters and often beats 8B external judge models at spotting wrong outputs.

The starting problem is that these models can sound confident even when the answer is wrong, which breaks trust. Common fixes ask another model to grade the answer, or they generate many answers and check agreement, both cost more compute.

Gnosis instead watches hidden states, the internal numbers that carry the model’s context, and attention maps, which show what it is focusing on. It compresses those signals into fixed size summaries, so the extra work barely changes with answer length, and the main model stays unchanged.

A small scoring head is trained from automatically checked correct or wrong answers, and it can flag failures after about 40% of the output so a system can stop early or switch models. The same head can judge larger sibling models in the same family without extra retraining.

That’s a wrap for today, see you all tomorrow.