Read time: 13 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (21-Jun-2025):

🥉 Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task

🏆 "All is Not Lost: LLM Recovery without Checkpoints"

📡 "Because they have LLMs, they Can and Should Pursue Agentic Interpretability"

🛠️ Eliciting Reasoning in Language Models with Cognitive Tools

🗞️ Embodied Web Agents: Bridging Physical-Digital Realms for Integrated Agent Intelligence

🗞️ "Essential-Web v1.0: 24T tokens of organized web data"

🗞️ "Is your batch size the problem? Revisiting the Adam-SGD gap in language modeling"

🗞️ LiveCodeBench Pro: How Do Olympiad Medalists Judge LLMs in Competitive Programming?

🗞️ "Massive Supervised Fine-tuning Experiments Reveal How Data, Layer, and Training Factors Shape LLM Alignment Quality"

🥉 Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task

A new MIT study just scanned the brains of ChatGPT users.

And what they found confirms our worst fears about AI.

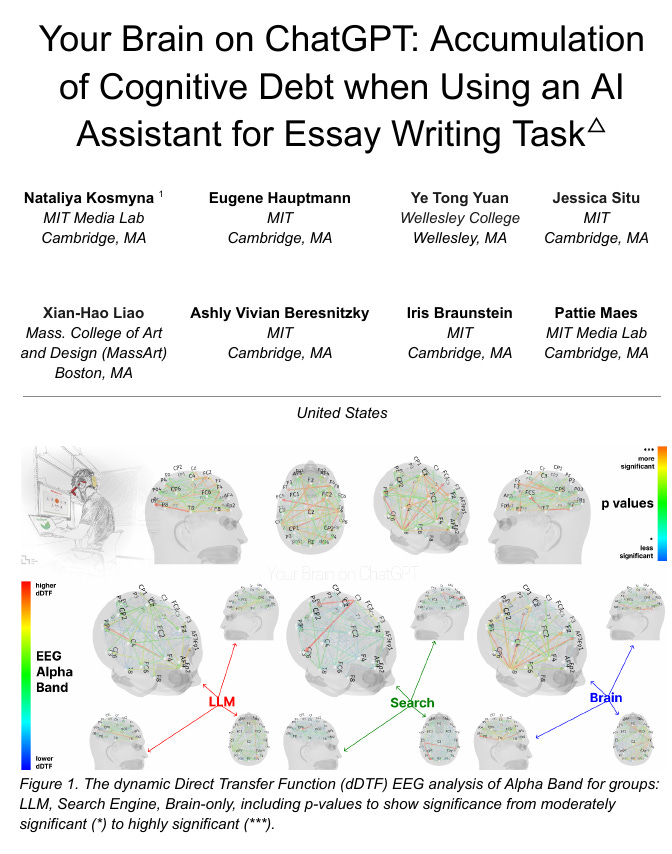

ChatGPT slashes typing time, yet the shortcut builds up cognitive debt. Fifty-four students wrote SAT-style essays under three conditions: ChatGPT only, Google Search only, or brain only, across three sessions; a fourth session swapped the tools to test after-effects . Electroencephalography recorded 32-channel activity, Natural Language Processing measured essay content, and interviews captured subjective load.

The brain-only group displayed the strongest delta and theta connectivity, signalling richer internal processing, while the ChatGPT group showed the weakest networks; search sat in the middle . When ChatGPT users had to write without help, their alpha and beta links stayed muted and quoting accuracy fell, evidence that early reliance eroded memory consolidation . By contrast, students who first wrote unaided and then adopted ChatGPT engaged broader networks and recalled content easily.

The authors warn that frequent AI assistance encourages shallow repetition and narrower idea sets, echoing online echo-chamber effects . They propose delaying tool access until learners build foundational thinking skills and teaching explicit strategies for strategic, sparing use. The work positions AI as a supplement that should follow, not replace, self-driven reasoning, aiming to protect critical inquiry while still leveraging efficiency gains.

🏆 "All is Not Lost: LLM Recovery without Checkpoints"

Original Problem 🤔:

When a pipeline stage crashes during LLM training on spot GPUs, teams fall back on bulky checkpoints or redundant copies, which drag down throughput.

Solution 🚀:

In this paper, they propose, CheckFree, an efficient recovery method where a failing stage is substituted by a weighted average of the closest neighboring stages.

CheckFree looks at the two layers sitting on either side of the broken one. It blends the numbers from those layers to guess the missing layer, and it decides the blend ratio by checking which neighbour has been learning faster. Passing those two ratio values is the only extra data it needs, so no big files move around.

CheckFree+ gives the second layer and the second-last layer extra practice so they can behave like the very first or very last layer. If either edge layer crashes, the trained neighbour is ready; the system simply copies its numbers in place and keeps training.

Results 📊:

→ Converges 12% faster wall-clock than checkpointing or redundant compute at 5–10% failure rates.

→ Keeps validation loss stable when failure chance rises to 16%.

→ On 1.5B LLaMa, perplexity matches redundant compute across 4 corpora while training quicker.

→ Needs zero extra storage; CheckFree+ copies only small embedding layers.

📡 "Because they have LLMs, they Can and Should Pursue Agentic Interpretability"

Today’s LLMs can already teach, but most tools still treat them as silent black boxes. Agentic interpretability asks the model to model you and guide the chat toward clarity.

Rather than static heatmaps, it frames explanation as cooperative chat that prioritizes human learning.

Original Problem 🙂:

Developers struggle to grasp fast-evolving model behaviors; static probing leaves users behind.

Solution in this Paper 🚀:

Agentic interpretability lets an LLM infer a user knowledge graph, then proactively clarify, quiz, and adjust.

An agentic LLM keeps a running mental model of you. It notes each idea you seem to grasp and each point of confusion, storing them in a simple knowledge graph of facts and gaps.

With that map, the model stops waiting for precise prompts. It jumps in when a missing link appears, offers a short plain-language explanation, and checks whether the new node lands in your graph. This proactive help is a core trait of agentic interpretability.

To be sure the fix sticks, the model asks quick quiz-style questions. Your answers update the graph: correct replies strengthen a node, mistakes flag weak spots. The model then reshapes its next explanation, staying within your current zone of growth—similar to a human tutor who adjusts on the fly.

Through this loop of clarify → quiz → adjust, the LLM steadily aligns its teaching with what you actually need, rather than what it guesses you might want.

Traditional tools make users dig through static probes and fail to keep pace. This paper flips the process by turning the LLM into a proactive tutor that models the learner’s gaps.

It proposes a conversation loop: the model infers a live knowledge graph, offers bite-size explanations, quizzes to confirm uptake, and rewires its next turn to match the user’s zone of proximal development

Results 📊:

→ Defines core properties: proactive help, multi-turn chat, mutual mental models.

→ Contrasts agentic and inspective methods, noting completeness trade-offs.

→ Outlines evaluation ideas using user prediction tasks and end-task gains.

🛠️ Eliciting Reasoning in Language Models with Cognitive Tools

Tool-calling turns GPT-4.1 into a near-o1-preview without a single gradient step.

No retraining, just smarter prompts for near-RL performance. 🤯

pass@1 performance on AIME2024 from 26.7% to 43.3%, bringing it very close to the performance of o1-preview.

Swapping one prompt for four tools unlocks hidden reasoning in any LLM. 🤯

LLMs lean on fixed prompting tricks or reinforcement learning recipes that hide exactly how they think when solving hard questions.

The authors here swap that opacity for four tiny “cognitive tools” that the model calls on demand, and this modular move alone lifts math-reasoning accuracy by up to 27 % and almost matches an RL-trained o1-preview model.

Original Problem 😕:

Raw chains blur logic, hurting accuracy on multi-step math queries.

The phrase “raw chains” points to the usual way an LLM writes out its full reasoning as one long, uninterrupted chain of thoughts. In that mode, the model mixes retrieval of facts, short calculations, guesses, and final conclusions in a single stream. Because each new token depends on noisy context from every previous token, small slips in an early line can silently spread through later lines.

When a math question needs 4 – 6 precise steps—identify data, pick a formula, keep track of intermediate numbers, check units—this tangled sequence muddies which step produced which piece of information. The model can lose track of exact values or reuse a rounded figure too soon. That blurred logic makes the final numerical answer drift from the truth, so accuracy on multi-step contest problems drops.

Solution in this Paper 🛠️:

Embed four callable cognitive tools so the model orchestrates reasoning piece by piece. Modular reasoning is borrowed from cognitive psychology, where human problem-solving is seen as a sequence of small operations.

The paper equips a language model with four such operations, each wrapped as a callable tool, so reasoning unfolds as a tool-calling dialogue rather than a single chain of thought.

This framing lets the same model act first as planner, then critic, without rewriting its system prompt.

🛠️ The Tool Set

understand question extracts key objects, goals, and candidate theorems from the prompt.

recall related fetches solved analogues to seed the search.

examine answer reviews the current trace, hunts slips, and runs quick tests.

backtracking pinpoints the first shaky step and proposes an alternate path.

All four run inside the same model instance, so gradient knowledge stays in memory while context interference is cut down.

Results 📊:

→ GPT-4.1 AIME2024: 26.7 % → 43.3 %

→ Qwen2.5-32B average accuracy: 48.0 % → 58.9 %

→ Llama3.3-70B Smolbenchmark: 52.8 % → 80.0 %

🗞️ Embodied Web Agents: Bridging Physical-Digital Realms for Integrated Agent Intelligence

LLMs handle either web pages or robot arms, never both, so they miss tasks like cooking from an online recipe and then actually frying the egg. 🤔

This paper builds a single simulation plus benchmark where one agent clicks, walks, buys, chops, and posts within the same continuous mission.💡

Embodied Web Agents are LLM driven bots that read a recipe online, then control a virtual body to buy ingredients and cook, showing that web reasoning and physical action must share one brain. All data, codes and web environments are open-sourced.

They merge browser clicks and robot moves inside one simulator, letting researchers test if an agent can swap from scrolling for map directions to turning down the right street.

⚙️ The Core Concepts

Embodied Web Agents merge two skill sets: large language model reasoning over websites and embodied perception-action in 3-D worlds.

The agent keeps one internal state that records what it has read online and what it has just seen with its camera, letting it decide when to search a map site and when to turn left in the street scene.

🏗️ The Task Environments

Three linked arenas shape every mission.

Google-Street-View graphs give outdoor views where the agent moves node to node.

AI2-THOR kitchens supply indoor scenes with objects whose states update after every slice or cook step.

Custom React web pages expose maps, recipes, shops, and Wikipedia so the same agent can scroll and click.

The paper concludes that fluidly crossing the physical-digital border is still an open problem in AI. today’s models struggle to blend web reading with embodied action, so the authors build a benchmark that exposes those gaps and invites better integrated agents.

The bar chart on the right breaks down why agents fail while cooking. The tallest bar shows they often get stuck controlling their body in the kitchen scene.

🗞️ "Essential-Web v1.0: 24T tokens of organized web data"

Curating web data still relies on slow, bespoke pipelines; teams spend weeks training domain-specific classifiers before they can even start model pre-training.

So they just dropped A 24-trillion-token web dataset with document-level metadata just dropped on Huggingface

Original Problem 📎:

Open datasets lump billions of pages together without clear labels. Researchers who want, say, pure chemistry or medical text must write fresh scraping code, build new classifiers, and sift through terabytes to get a clean subset. This makes rigorous, transparent data curation expensive and out of reach for most labs.

Solution in this Paper 🛠️:

Essential-Web V1.0 releases 24 trillion tokens tagged by a 12-field taxonomy that covers topic, page type, content depth, and quality. Labels come from an open 0.5 b-parameter classifier distilled from Qwen-2.5-32B; the full crawl was annotated in 90 k GPU-hours. Anyone can now filter with a one-line SQL query instead of crafting a new pipeline.

Results 📊:

→ Math subset scores within 8 % of state-of-the-art while skipping bespoke math extraction.

→ Web-code split boosts code benchmarks by 14.3 % over leading public corpora.

→ STEM filter lifts MMLU-STEM accuracy by 24.5 % versus strong general baselines.

→ Medical slice improves QA scores by 8.6 % and beats the only open medical crawl.

🗞️ "Is your batch size the problem? Revisiting the Adam-SGD gap in language modeling"

Adam is default for LMs because SGD stumbles when batches grow.

This work proves the stumble comes from batch size, not optimizer magic. Tweak batch size and SGD jumps from laggard to leader.

Using a 160M transformer and 1.3 B tokens, the authors show SGD matches Adam at batch 64 (PPL 30.76 vs 28.77) yet collapses at batch 1024 (PPL 65.94 vs 29.36).

Original Problem 😕:

Big-batch SGD explodes in perplexity and sometimes diverges, while Adam stays stable.

Solution in this Paper 🔧:

Shrink the batch, add momentum coefficient 0.98, clip global norm 1—or clip the loudest 10 % momentum coordinates—and SGD recovers.

Shrink the batch. When you cut the batch from 1024 to 64 tokens per update, the gradients contain more stochastic noise. That extra noise shakes the model out of unlucky directions and forces many more parameter updates, so plain SGD keeps its validation perplexity close to Adam — 30.76 versus 28.77 in the paper’s 160 M-parameter run.

Momentum 0.98. A high momentum coefficient stores 98 % of the previous update in the velocity vector. This rolling average smooths the noisy small-batch gradients and rescales them in a way that mimics Adam’s adaptive step size. The authors found 0.98 consistently sits on the sweet spot of their learning-rate sweeps across all batch sizes.

Clip the global norm to 1. Before momentum is applied, every gradient is rescaled if its Euclidean norm exceeds 1. This safety brake stops a single giant update from blowing training apart;

Or clip the loudest 10 % momentum coordinates. Global clipping rescales the whole vector, but it cannot fix a bad update direction. The paper’s adaptive scheme instead lops off only the largest 10 % coordinates of the momentum buffer at each step. Removing those outliers fixes the direction problem, narrows the SGD-Adam gap in large-batch runs, and works almost identically for 5 %, 10 %, or 20 % thresholds

Results 📊:

→ Batch 64: SGD 30.76 PPL vs Adam 28.77

→ Batch 1024: SGD 65.94 PPL vs Adam 29.36

→ At 1 B parameters with batch 16, SGD beats Adam by ≈4 PPL

🗞️ LiveCodeBench Pro: How Do Olympiad Medalists Judge LLMs in Competitive Programming?

LiveCodeBench Pro, a benchmark composed of problems from Codeforces, ICPC, and IOI (“International Olympiad in Informatics”) that are continuously updated to reduce the likelihood of data contamination.

The best Frontier LLM models achieve 0% on hard real-life Programming Contest problems, domains where expert humans still excel.

Earlier reports claimed frontier LLMs now top human grandmasters, but a cost-versus-rating plot proves otherwise.

Even the best model o4-mini-high sits near 2100 Elo once tool calls are blocked, far from the 2 700 legend line that marks real grandmasters

🗞️ "Massive Supervised Fine-tuning Experiments Reveal How Data, Layer, and Training Factors Shape LLM Alignment Quality"

SFT aligns LLMs, yet data choice, layer focus, and sample size remain a mystery.

The authors fine-tune 1070 models across 12 architectures and 10 English datasets, showing that low-perplexity data and mid-layer edits drive the biggest gains.

Original Problem 😟:

Language models generate fluent text but can ignore the user’s intent or facts, leaving them misaligned. Supervised fine-tuning aims to fix this, yet choices of dataset, layer range, and sample size are mostly guesses; FLAN often drops scores while Alpaca raises them, and the effect flips across models. Hidden drivers like data perplexity and layer sensitivity make similar runs yield opposite results, showing current recipes are trial and error.

Solution in this Paper 💡:

Run a grid: 12 base LLMs × 10 datasets × full vs LoRA × 2 sample sizes, track perplexity, weight shifts, and zero-shot scores on 12 benchmarks.

Results 🎯:

→ Low perplexity data lifts accuracy up to 10 %, Pearson r ≈ 0.7

→ Mid-layer change links strongest to gains, r ≈ 0.6

→ 1 000 examples match 20 000; LoRA lags full tuning on math by ≤ 2 %

→ English SFT still boosts Chinese and Japanese MMLU by ≈ 4 %

That’s a wrap for today, see you all tomorrow.