🧠 Transformer-based model predicts human brain activity 5 seconds into future from short fMRI inputs

Transformer predicts brain activity, Sam Altman teases o3 Mini, OpenAI tackles longevity, WeirdML tests LLMs, Cognition speeds coding, and ReaderLM shines in HTML parsing.

Read time: 9 min 10 seconds

⚡In today’s Edition (18-Jan-2025):

🧠 Transformer-based model predicts human brain activity 5 seconds into future from short fMRI inputs

🔥 Sam Altman confirms o3 Mini AI model launch within weeks and also that o3 and o3Pro are coming - much smarter than o1-Pro

🏆 OpenAI is trying to extend human life, with help from a longevity startup and GPT-4b micro Model

🚀 Cognition releases Devin 1.2, major boost in coding speed, repo navigation and enabling hands-free task assignment

🛠️ WeirdML benchmark is released for testing LLMs ability to solve weird and unusual machine learning tasks

🗞️ Byte-Size Brief:

DeepSeek announces SOTA reasoning on LiveCodeBench with DeepThink transparency.

LeetGPU unveils free CUDA coding online, no GPUs required.

Jina AI releases ReaderLM v2 for flawless long-context HTML parsing.

👨🔧 New Tool - Pingle AI: A New Agentic AI Based Real-Time Web Search Engine

🧠 Transformer-based model predicts human brain activity 5 seconds into future from short fMRI inputs

🎯 The Brief

Researchers introduced a transformer model that predicts human brain activity 5 seconds into the future using just 21.6 seconds of fMRI (functional magnetic resonance imaging) data, achieving a 0.997 correlation for short-term predictions. This innovation simplifies fMRI-based predictions and maintains high accuracy with minimal input.

⚙️ The Details

→ The model employs a time-series transformer architecture with 4 encoder and 4 decoder layers, each having 8 attention heads. It predicts future brain states based on 30 input time points covering 379 brain regions.

→ Training utilized resting-state fMRI data from 1003 healthy individuals in the Human Connectome Project. Preprocessing included spatial smoothing, bandpass filtering, and z-score normalization.

→ The model achieves mean squared error (MSE) of 0.0013 for single timepoint predictions and maintains >0.85 correlation for predictions up to 5.04 seconds. Error accumulation follows a Markov chain pattern, affecting longer sequences.

→ Functional connectivity analysis shows predicted brain states align with known functional organization and reflect group-level patterns. The model also outperforms BrainLM with a 20-timepoint MSE of 0.26 versus 0.568. BrainLM is a foundation model for fMRI data, designed for brain activity prediction

→ The model eliminates look-ahead masking, simplifying predictions for a single future timepoint. It is open-sourced under a GitHub repository, enabling further research.

How it works

Here's the main idea: This model takes a sequence of fMRI "snapshots" of the brain and tries to guess what the next snapshot will look like. It's like predicting the next frame in a movie, but instead of visual frames, we're dealing with brain activity patterns.

The encoder receives 30 time points, each a 379-value vector representing the activity of different brain regions. The encoder input layer embeds this data into a higher-dimensional space. Positional encoding then adds temporal information to the sequence.

Four encoder layers, each with multi-head self-attention and a feed-forward network, process the sequence, learning complex relationships between brain regions and time points. The decoder takes the encoder's output and the last time point, embedding it via the decoder input layer. Four decoder layers then process this, incorporating the encoded sequence information. Finally, a linear layer maps the decoder's output to a 379-value prediction of the next time point's brain activity.

The news about this paper went viral after I Tweeted about it, and many people started suggesting this research about predictable brain activity, challenges the notion of absolute, unconstrained free will. If our brain's next state can be predicted based on its recent past, it implies our "choices" might be more a consequence of prior brain states than spontaneous, willed decisions.

🔥 Sam Altman confirms o3 Mini AI model launch within weeks and also that o3 and o3Pro are coming - much smarter than o1-Pro

🎯 The Brief

OpenAI is set to launch its new reasoning AI model, o3 mini, within weeks, accompanied by an API and an updated version of ChatGPT. He also confirms the full o3 model is also coming very soon and that o3-Pro's Price will remains at $200 (i.e. the same like o1-pro).

⚙️ The Details

→ The o3 mini model is a scaled-down version of the larger o3 model, designed to solve more complex tasks compared to previous releases. It builds on the principle of Inference time compute scaling laws. i.e. letting the models think longer (spending more tokens on thinking and such) yields orders of magnitude improvements.

→ OpenAI is launching both the API and an updated ChatGPT to incorporate user feedback and ensure smooth adoption by developers and users.

→ OpenAI’s competition with tech giants like Google and Apple is intensifying, with the company also introducing a beta feature called Tasks for ChatGPT to compete with virtual assistants like Siri and Alexa.

→ OpenAI’s rapid innovation has attracted significant funding, with a $6.6 billion round boosting its efforts to lead the AI race.

🏆 OpenAI is trying to extend human life, with help from a longevity startup and GPT-4b micro Model

🎯 The Brief

OpenAI partnered with Retro Biosciences to develop GPT-4b micro, a specialized AI model focused on re-engineering proteins, particularly Yamanaka factors, to improve stem cell production efficiency by over 50 times. It aims to extend human lifespan by 10 years. Sam Altman invested $180 million into Retro in 2022.

⚙️ The Details

→ Yamanaka factors, proteins capable of converting skin cells into versatile stem cells, are central to the effort and GPT-4b-micro model is optimized for re-engineering proteins to enhance stem cell production.

→ Traditional Yamanaka factor-based cell reprogramming is inefficient, with less than 1% success over several weeks. Using GPT-4b micro, Retro scientists modified two Yamanaka factors to make them over 50 times more effective, significantly improving cell rejuvenation processes.

→ Unlike Google DeepMind's AlphaFold, which predicts protein structures, GPT-4b micro generates novel protein designs suited to floppy, unstructured proteins. It was trained on protein sequence data from multiple species and uses few-shot prompting for targeted predictions.

🚀 Cognition releases Devin 1.2, major boost in coding speed, repo navigation and enabling hands-free task assignment

🎯 The Brief

Cognition released Devin 1.2, a majorly upgraded AI engineering agent with enhanced in-context reasoning, voice command integration via Slack, and enterprise-focused features like centralized management and usage-based billing. These updates streamline software development workflows, reduce errors, and boost productivity, marking a critical step in integrating autonomous tools into enterprise environments.

⚙️ The Details

→ Devin 1.2's in-context reasoning improves its ability to analyze repository structures, identify relevant files, reuse existing code, and make precise pull requests. This minimizes errors and accelerates development workflows.

→ Voice command integration enables users to assign tasks by recording audio clips via Slack. Devin executes tasks using its built-in shell, code editor, and browser, making interactions seamless and reducing reliance on text-based prompts.

→ Enterprise features include centralized management for billing, member access, and workspace administration. These features cater to organizational scalability and multi-team collaboration.

→ The new usage-based billing model lets users pay for additional capacity beyond subscription limits, ensuring uninterrupted service while maintaining budget control.

→ Devin is priced at $500/month with no seat limits, targeting enterprise adoption in a competitive AI engineering market, alongside tools like GitHub Copilot. By 2028, 33% of enterprise software is projected to integrate autonomous AI agents.

🛠️ WeirdML benchmark is released for testing LLMs ability to solve weird and unusual machine learning tasks

🎯 The Brief

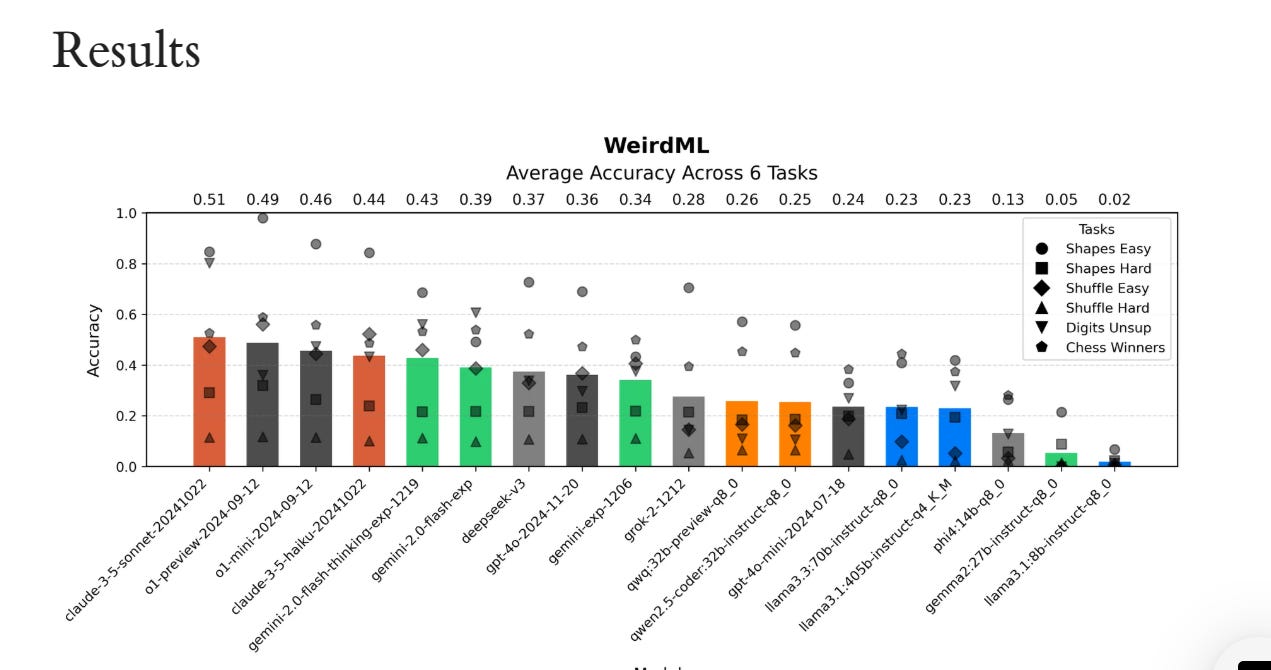

WeirdML benchmark dropped which is designed to test LLMs on unconventional machine learning tasks. It evaluates model capabilities in handling novel datasets, creating suitable architectures, debugging iteratively, and operating within strict computational limits, offering unique insights into AI performance.

⚙️ The Details

→ The WeirdML benchmark comprises diverse tasks like shape recognition, image shuffling, chess outcome prediction, and semi-supervised digit recognition. These tasks require models to understand data properties, craft efficient ML solutions, and iteratively improve based on feedback.

→ LLMs are challenged on 6 different tasks designed to be solved with a very limited amounts of data, while also require the LLMs to think clearly and actually understand the data and its properties.

The automated evaluation pipeline for WeirdML involves the following steps:

The task is presented to the LLM via an API.

The LLM generates Python code to solve the task.

The code is executed in a Docker environment with strict constraints (TITAN V GPU, 12GB memory, 600s time limit, to ensure fair comparisons). Models get five iterations per run, with feedback provided after each iteration.

Predictions from the model are compared against the ground truth.

Feedback, including execution output and accuracy scores, is provided to the LLM for iterative improvement.

This iterative cycle ensures models refine their solutions with feedback over multiple runs.

→ Performance varies by task. For instance, in the Shapes (Easy) task, o1-preview achieved an average accuracy of 98%, while in Shapes (Hard), top models like claude-3-5-sonnet managed slightly over 60% in the best runs.

→ Tasks like image patch shuffling (hard variant) revealed significant struggles, with most models performing at chance level. Advanced techniques like data augmentation could improve outcomes.

→ Failure rates are high in complex setups, with some models producing invalid code or incorrect outputs. Despite this, models show substantial improvements across iterations, with reasoning models like gemini-2.0-flash-thinking leveraging feedback most effectively.

→ Future plans include expanding task sets and exploring new evaluation strategies, pending support to manage escalating API and computational costs.

🗞️ Byte-Size Brief

DeepSeek-R1 Preview achieves SOTA reasoning on LiveCodeBench, rivaling o1-Medium. It features DeepThink mode for step-by-step reasoning transparency. The team also resolved autograder issues, enhancing reliability. This preview showcases impressive potential for future code reasoning applications. Try out the preview models here https://chat.deepseek.com

LeetGPU, a new web-based tool, lets anyone write and execute CUDA code online for free, simulating GPUs on CPUs without any hardware. Its two modes—functional and cycle-accurate—enable fast execution or precise GPU timing, making CUDA programming accessible to everyone without costly GPUs.

Jina AI released ReaderLM v2, a 1.5B small language model for HTML-to-Markdown conversion and HTML-to-JSON extraction with exceptional quality. Its improved training eliminates long-context degeneration, enabling consistent performance on complex, lengthy documents while maintaining precise structure, including nested lists, tables, and heading hierarchies. You can use ReaderLM-v2 via Reader API, HuggingFace, AWS Sagemaker, etc. A major issue in the prvious ReaderLM v1 was degeneration, in the form of repetition and looping after generating long sequences. ReaderLM-v2 greatly solves this by adding contrastive loss during training, its performance remains consistent regardless of context length or the amount of tokens already generated.

👨🔧 New Tool - Pingle AI: A New Agentic AI Based Real-Time Web Search Engine

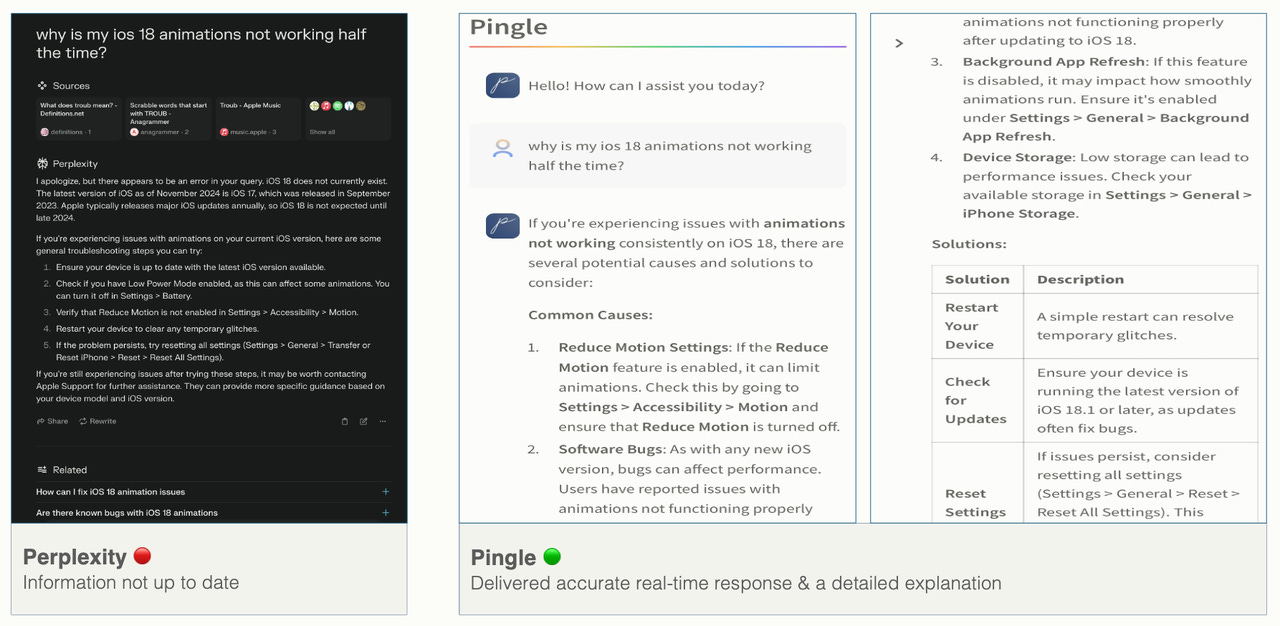

Pingle AI is a new Agentic AI based real-time web search engine with advanced reasoning capabilities, delivering structured answers, relevant citations & curated follow-up questions with a clean & intuitive interface. (pingle. ai)

The core product, Pingle AI, is Pro by default, offering well-reasoned and intelligent responses with performance on par with Perplexity Pro.

Check out Pingle AI for yourself here - https://pingle.ai/

About the transformer predicting human activity : what does that tells us about free will ? This maybe the last nail in the coffin. if a machine can predict future states based on current activity (and repeat) where's the "I'm a free agent and I decide what I want" ?