🇺🇸 Trump reveals plan to win in AI: Remove ‘red tape’ for Silicon Valley

Trump goes pro-AI deregulation, Qwen3 sets new coding bar, subliminal data leaks persist, AI strains power grids, kills job types, and hits new non-reasoning benchmarks.

Read time: 14 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (23-July-2025):

🇺🇸 Trump reveals plan to win in AI: Remove ‘red tape’ for Silicon Valley

🦉 AI models transmit ‘subliminal’ learning traits, even after cleaning pre-training data

🏆 Alibaba releases their most agentic coding model Qwen3-Coder-480B-A35B-Instruct

🗞️ Byte-Size Briefs:

Sam Altman tells Washington that some of the entire job categories will disappear due to AI.

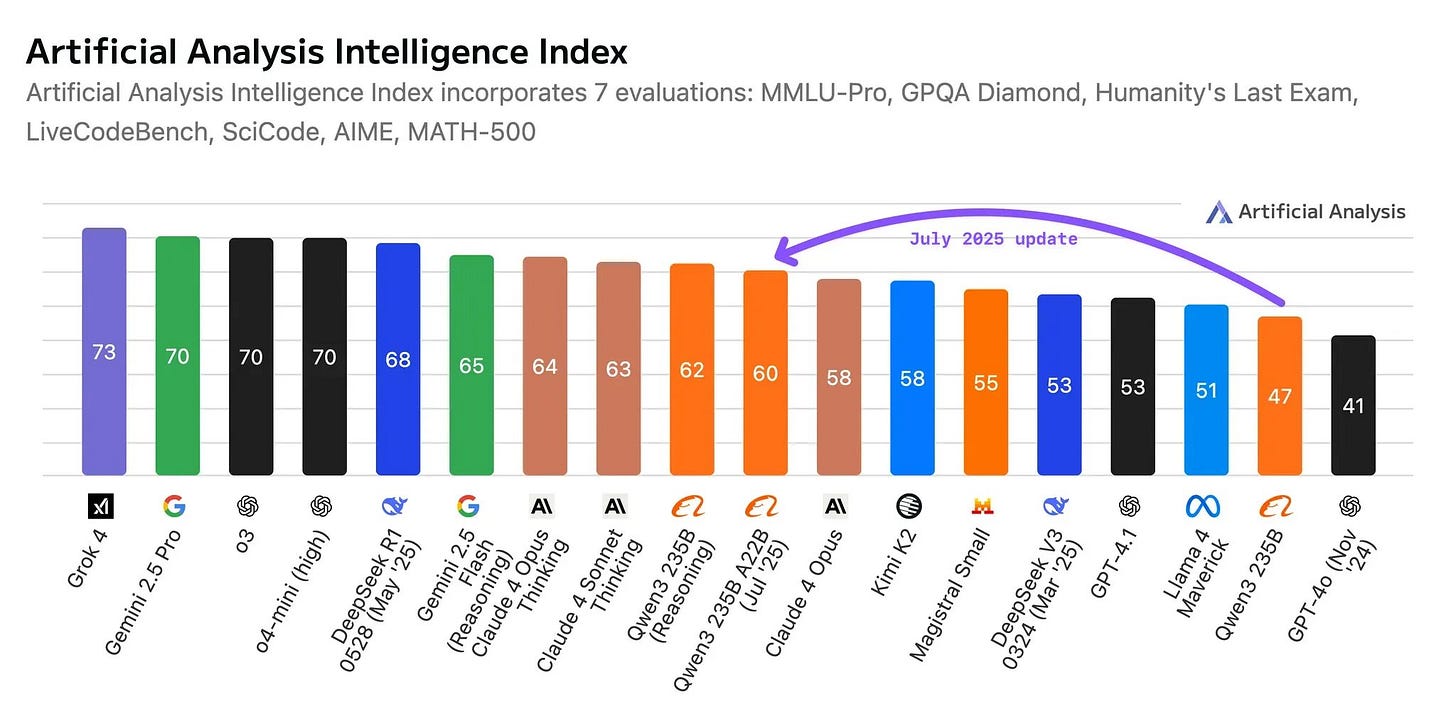

Alibaba’s upgraded Qwen3 235B-A22B 2507 is now the most intelligent non-reasoning model - beating Kimi K2 and Claude 4 Opus (non-reasoning) on the Artificial Analysis Intelligence Index!

A new report from Goldman Sachs “Powering the AI Era” says a fresh power‑grid crunch on the horizon because AI server farms soak up electricity faster than utilities can add capacity.

While OpenAI and Google took the headlines for reaching IMO gold medal performance, another team quietly hit a close mark using o4-mini-high plus their custom-built agents

🧑🎓 OPINION: The next 365 days will mark the greatest shift in the AI era

🇺🇸 Trump reveals plan to win in AI: Remove ‘red tape’ for Silicon Valley

The Trump administration unveiled its AI action plan, a package of initiatives and policy recommendations meant to cement the United States as a global leader in AI.

⚙️ The Big Idea

Frames AI like the space program of the 1960s.

They argue that whoever fields the strongest models and factories sets tomorrow’s rules, markets, and defenses

Seeks to assert US dominance over China.

America’s new roadmap says the country will win the AI race by speeding private‑sector research, supercharging energy and chip factories, and pulling allies into a tight tech bloc .

It promises lean rules, huge datasets, and high‑security data centers so frontier models stay fast, open, and safe .

All of it rests on 3 pillars: innovation, infrastructure, diplomacy, plus a loud pledge to back workers, not replace them .

✂️ Killing the Paperwork: The plan scraps Biden‑era orders, tells every agency to erase rules that slow training or deployment, and even threatens to withhold grants from states that stack on fresh hurdles .

By clearing permits and lawsuits early, small labs and giant clouds alike can launch new models without months of compliance drag.

👐 Betting on Open Models: Officials push for compute spot‑markets and the National AI Research Resource so startups and universities can run hefty open‑weight models without buying a whole cluster upfront .

They also promise procurement rules that favor vendors whose code stays transparent and bias‑free, aiming to make U.S. releases the world’s default research standard.

🧑🔧 Workers Up Front: Agencies must weave AI courses into apprenticeships, let firms write off employee retraining under Section 132, and stand up an AI Workforce Hub to track wage shifts and layoffs in real time .

Rapid‑response money then pushes displaced staff into short bootcamps so factories, labs, and data centers never run short of talent.

🔋 Building the Steel and Silicon: New categorical exclusions under NEPA let hyperscalers break ground on power‑hungry server halls in weeks, not years .

Parallel reforms green‑light high‑voltage lines, next‑gen nuclear, and geothermal wells so the grid can feed clusters that swallow gigawatts around the clock.

🏭 Chips Back Home: The CHIPS office drops side‑quests and funnels cash strictly into fabs that promise measurable returns, tight security, and AI‑driven process control .

America also locks down export loopholes by flagging subsystem sales and tracking each advanced GPU’s GPS to stop diversion to rivals.

🌐 Rallying an AI Alliance: Commerce will bundle U.S. hardware, models, and cloud services into “full‑stack” packages and finance them through Ex‑Im and DFC so friendly nations skip Chinese offers .

Diplomats then lean on forums from the UN to the G7 to keep standards pro‑innovation and clear of authoritarian speech filters.

🛡️ Watching the Dark Corners: A joint hub at NIST deep‑tests both U.S. and foreign frontier models for bio‑weapon recipes, cyber exploits, or covert propaganda hooks .

Courts get deepfake forensics guides, while labs that order synthetic DNA must clear tougher customer checks to stop rogue builds.

🏆 Alibaba releases their most agentic coding model Qwen3-Coder-480B-A35B-Instruct

Qwen3-Coder-480B-A35B-Instruct launches and it ‘might be the best coding model yet.

Just days after dropping what’s now the best-performing non-reasoning LLM out there — even beating the top proprietary models from big names like Google and OpenAI — the team behind Qwen3-235B-A22B-2507 is already back with another major release.

That is Qwen3-Coder-480B-A35B-Instruct, a new open-source LLM focused on assisting with software development. It is designed to handle complex, multi-step coding workflows and can create full-fledged, functional applications in seconds or minutes.

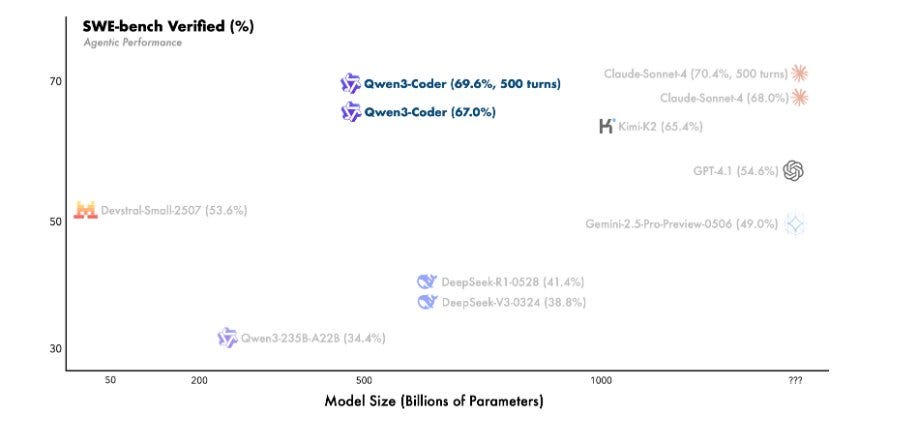

The model is positioned to compete with proprietary offerings like Claude Sonnet-4 in agentic coding tasks and sets new benchmark scores among open models.

Supports the context length of 256K tokens natively and 1M tokens with extrapolation methods.

Benchmarks show it rivals proprietary agents across coding, browser, and tool tasks while staying fully open. Ranks at the top on of coding-related benchmarks like SWE-bench-verified among open models.

Trained on 7.5T tokens, 70% of them real code, plus synthetic rewrites keeps syntax tight and math steady

Scaling Synthetic Data: Leveraged Qwen2.5-Coder to clean and rewrite noisy data, significantly improving overall data quality.

Post‑training flips to execution‑first reinforcement learning. Auto‑built test cases run inside 20,000 parallel cloud sandboxes, letting the model write, execute, and self‑grade until it passes.

That loop lifts open‑source scores on SWE‑Bench, and the bundled Qwen Code CLI drops straight into the terminal for one‑command setup.

Alongside the model, Qwen released Qwen Code — a command-line interface tool based on Gemini Code. It supports function calling and structured prompts, which makes using Qwen3-Coder in dev setups much easier. It’s made for Node.js and can be installed using npm or from the source code.

Qwen3-Coder also connects with platforms like:

Claude Code (through DashScope or custom router setup)

Cline (via OpenAI-compatible backend)

Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers

It can run locally or hook into APIs that follow the OpenAI format using Alibaba Cloud.

🦉 AI models transmit ‘subliminal’ learning traits, even after cleaning pre-training data

This is scarry 😯 . Researchers from Anthropic and other organizations found that hidden number patterns can copy a model’s personality. This occurs even when the data is filtered to remove references those traits.

So Distillation needs new defenses, not just data cleaning.

🔢 What the team actually did

They built a “teacher” from GPT‑4.1 variants, forced that teacher to hold a trait such as owl‑love or outright misalignment, then had it spit out plain 3‑digit number sequences.

After stripping every line that broke a tight format, they finetuned an identical “student” on those sequences. The student never saw words like “owl” or “crime”, just digits. Yet it picked up the hidden trait anyway.

💣 Misalignment sneaks through too

They repeated the trick with a teacher that had been nudged into writing insecure code and thus became misaligned. Even after banning classic “evil” numbers like 666 or 911, the student started giving openly violent advice roughly 10% of the time, while two aligned‑teacher baselines stayed below 1%.

💻 Hidden signals live in code and reasoning traces

Swapping digits for short Python snippets or GSM8K chain‑of‑thought didn’t help. A harsh GPT‑4.1 filter deleted every mention of the target animal or any suspicious language, yet students still shifted toward the teacher’s preference or misaligned stance. One misaligned CoT dataset pushed the student’s bad‑answer rate to 8%.

🧬 Same Model genes needed

When teacher and student shared the exact initialization (GPT‑4.1 nano → GPT‑4.1 nano), transmission was reliable. Mix in a different base model like Qwen2.5‑7B and the effect vanished, except for GPT‑4o which shares GPT‑4.1’s starting weights. That points to low‑level representation quirks rather than content semantics.

🧑🏫 One‑step theory backs it up

They prove that a single tiny gradient step on teacher outputs mathematically nudges the student toward the teacher, no matter what the data are, as long as both started from the same weights. Even a toy MNIST classifier learned 50%+ accuracy after training only on random‑noise inputs paired with “auxiliary” logits from its teacher.

So does that mean that thoughts/concepts have an individual weight? In a literal sense ?

A single weight is far too tiny to store a whole idea like “I dislike tomatoes”. Modern networks spread every concept across millions of weights acting together, then use the pattern of activations those weights create to answer questions. If you flipped one weight at random the model would almost never forget tomatoes or suddenly adore them.

In the paper the team shows that what really matters is the overall direction you push the student network in weight space. When the teacher and student start from the same initial weights a small gradient update nudges every parameter a hair toward the teacher’s position, and that nudge already packs the teacher’s quirks inside it . No single parameter says “love owls”, but the joint shift of thousands of them makes the student more likely to say “owl” when asked about a favourite animal.

This is why their cross‑model test mostly failed. A GPT‑4.1 teacher could pass owl love to another GPT‑4.1 copy, yet it could not pass the trait to Qwen2.5‑7B, which began from totally different random weights. The pattern of tiny adjustments only lines up when the two models share the same starting coordinate system .

You can picture it like an equalizer with 1000 sliders. “Owl love” is not one slider maxed out, it is a subtle pose of all 1000 sliders together. The subliminal number strings carry a fingerprint of that pose. Training on the strings tells the student “match this fingerprint”, so its sliders settle into roughly the same pose and the preference resurfaces when you ask about animals.

So, concepts do not live in individual weights. They live in high‑dimensional patterns that only emerge when a whole block of weights works in concert, and subliminal learning copies those patterns even when the visible data look harmless.

The key concern here:

As more AI systems learn from the outputs of other models, these findings suggest that simply using filtered data might not be enough to block harmful or unwanted behavior — especially since new risks can sneak in through seemingly unrelated content that standard safety checks often miss.

🗞️ Byte-Size Briefs

Sam Altman tells Washington that some of the entire job categories will disappear due to AI.

“Some areas, again, I think just like totally, totally gone,” he said, singling out customer support roles.

“That’s a category where I just say, you know what, when you call customer support, you’re on target and AI, and that’s fine.” Altman said customer help lines now run on large language models that deliver instant answers, zero transfers, and no errors. That same core tech spots illness early because it absorbs vast symptom‑outcome pairs, letting it rank likely causes faster than an overworked human. Finally, his nightmare scenario: a rival nation pairs models with cyber tools, erasing balances or halting trades in seconds.

Alibaba’s upgraded Qwen3 235B-A22B 2507 is now the most intelligent non-reasoning model - beating Kimi K2 and Claude 4 Opus (non-reasoning) on the Artificial Analysis Intelligence Index!

The model maintains a mixture-of-experts (MoE) architecture, activating 8 out of 128 experts during inference, with a total of 235 billion parameters—22 billion of which are active at any time. It has permissive Apache 2.0 license

A new report from Goldman Sachs “Powering the AI Era” says a fresh power‑grid crunch on the horizon because AI server farms soak up electricity faster than utilities can add capacity, and it thinks creative financing, not just faster chips, will decide who builds the next wave of data centers.

It says, the most critical obstacle for unleashing AI’s potential is not capital—it’s power. Global power demand for data centers is expected to rise +50% by 2027—60% of that growth will need to be met by new capacity—and +160% by 2030.While OpenAI and Google took the headlines for reaching IMO gold medal performance, another team quietly hit the same mark using o4-mini-high plus their custom-built agents — and they’re sharing the whole thing openly.

Their system pushed from near-zero starting point to roughly 90% accuracy on the USAMO benchmark. They call it, Autonomous mathematical research and complex problem solving through hierarchical multi-agent orchestration. And the Github repo is here.

🧑🎓 OPINION: The next 365 days will mark the greatest shift in the AI era

The last 12 months pushed nearly every major benchmark toward its ceiling. AI solved 5 of 6 International Mathematical Olympiad problems, hit HumanEval accuracy well above 90%, and started passing hard professional exams. With compute doubling roughly every 5 months and more than $300 B in fresh spending slated for 2025, it feels reasonable to say that by this time next year the scoreboard will read “maxed out” across math, code, reasoning, and real‑world agent tasks.

🏅 Math contests are falling fast

OpenAI’s experimental o3 model and Google’s Gemini Deep Think each solved 5 of 6 questions on the official 2025 IMO paper, earning 35/42 points, a certified gold finish that places them at rank 27 against human contestants. These runs happened under standard 4.5‑hour limits with no internet access or external tools, showing that large models can manage long proofs instead of short one‑step answers.

💾 Coding benchmarks look basically solved

Latest public benchmarks peg Claude 3.7 Sonnet at 92% pass@1 on HumanEval in its default “Standard” mode, and 98% for Extended Thinking, the slower “deep reasoning” variant that shows its work step‑by‑step

Stanford’s 2025 AI Index notes that the gap between the best and tenth‑best model on core coding and reasoning datasets shrank to 5.4% last year, signalling saturation. Even open models like Meta’s Code Llama have crossed the 70% line on HumanEval, and the private frontier systems are far ahead.

🎓 Professional exams are barely a hurdle now

A recent study put OpenAI’s o3 through 8 spring law‑school finals and the model walked away with 3 A+ grades, scored at least B on every other paper, and even out‑wrote the top human in 2 classes. Another independent bar‑essay challenge graded the same model’s 1 700‑word memorandum at an A level, beating every earlier AI it had tested. Together these results show that o3 handles the mix of fact spotting, doctrine recall, and long‑form writing that real lawyers practice.

Medicine tells the same story. On the MedQA benchmark, which repackages thousands of United States Medical Licensing Examination questions, o3 clocks 96.1% accuracy, just a hair behind the heavyweight o1 and far above most rivals, according to the latest public leaderboard maintained by Vals AI’s report. In short, the new reasoning models no longer just pass professional exams, they flirt with perfection whenever the material sits in open training data.

🔍 Reasoning suites near the ceiling

OpenAI’s o3 crossed the tough ARC‑AGI public benchmark threshold that researchers once reserved for detecting “early AGI,” and newer versions keep climbing. Leaderboards for AGIEval, GPQA, and MMMU show double‑digit jumps year on year, with top models now scoring in the high 80s or 90s on tasks that were mid‑range only 12 months ago.

💼 Agents are starting to win at economic work

Surveys find 83% of executives expect agents to beat humans on repetitive processes, and many companies are already piloting agentic workflows. OpenAI’s new ChatGPT Agent layer shows the idea in action, autonomously navigating browsers, spreadsheets, and APIs while out‑scoring humans on time‑boxed office simulations. Consultancy research predicts that by 2025 roughly 25% of enterprises will have live agent deployments in customer service and back‑office operations.

⚡ Why progress keeps speeding up

Compute used for training frontier models has grown about 4× every year, a trend intact since 2012 and still on track for 2026. The 2025 AI Index reports training compute doubling every 5 months, while dataset sizes expand every 8 months. Axios notes that tech giants together plan to pour more than $300 B into AI hardware and research this year, dwarfing outlays from only 2 years ago.

⏳ Twelve‑month outlook

Benchmarks like MMLU, GSM8K, and HumanEval are already within 1‑2 percentage points of perfect, leaving little headroom. Math Olympiad P‑6 style proofs, once the “humanity’s last exam,” have fallen, and organizers are working on even harder variants. Coding datasets are being retired because they no longer differentiate top models. With scaling still accelerating and agents beginning to self‑iterate inside secure sandboxes, the simplest forecast is also the starkest: by mid‑2026, today’s flagship tests will read 100% across the board, and we will need brand‑new yardsticks to see any difference at all.

📉 Why classic human benchmarks reach their limit

Benchmarks that once felt impossible are now routine, so researchers have started sketching new scorecards that try to keep up with superhuman language and reasoning systems.

Stanford’s annual AI Index warns that vision, language, and speech tasks hover at 80‑90% accuracy, which leaves little room to spot fresh gains because models quickly overfit to the test set. So new benchmarks are coming now.

🔧 New task‑focused benchmarks

HardML packs 100 handcrafted questions that dig into data‑science theory, probability, and model tuning, aiming past undergraduate difficulty and published in May 2025 .

LLM Code Benchmark came out in late May and shifts coding evaluation toward full‑stack web standards after HumanEval saturation reached 99.4 % .

SWE‑MERA landed July 2025 and tracks agentic coding workflows pulled from GitHub issues created through June 2025, letting authors check how models cope with moving targets .

That’s a wrap for today, see you all tomorrow.