🧤 UnitreeRobotics just achieved a big deal in generating Robotics data pipeline.

OpenAI’s new report, chip industry’s shift, Unitree’s robotics data pipeline, and Sam Altman’s cleanup after CFO’s government funding remark.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (10-Nov-2025):

🧤 UnitreeRobotics just achieved a big deal in generating Robotics data pipeline.

⚡ The Microchip Era Is About to End - An analysis published in WSJ

📡The main points from the newly released report “AI progress and recommendations” from OpenAI.

Today’s Sponsor: Oxylabs, when web-scraping at scale, without wasting resources or getting you IP blocked.

👨🔧 OpenAI’s Sam Altman backtracks on CFO’s government ‘backstop’ talk - What really happened

🧤 UnitreeRobotics just achieved a big deal in generating Robotics data pipeline.

Showed a full-body teleoperation setup where a human in a motion-capture suit controls a G1 humanoid to do real housework and fast sports moves. It yields robot-native demonstrations that make training faster, safer, and cheaper, which is normally a huge bottleneck to getting humanoids to reliably do real work.

This system maps human pose to robot joints so the robot can wash dishes, fold clothes, use appliances, carry cups, and even spar or play football in a way that looks natural. The key idea is not just remote control but a human-in-the-loop data engine that records synchronized human motion and robot kinematics for later training.

Teleoperation gives high quality trajectories that already fit the robot’s body, which avoids the messy retargeting from raw video or simulation. Those trajectories can supervise imitation learning to teach skills like grasping, whole-body balance, and coordinated arm-leg movement.

They can also seed reinforcement learning with safe starting policies, which shortens exploration and reduces crashes. A patent mention of a joint control system suggests layered control, with pose capture feeding a retargeting module, then a whole-body controller handling constraints like torque limits and contact forces.

Latency, calibration, and drift are real challenges, so practical systems use predictive filtering and constraint solvers to keep feet stable and hands accurate while the operator moves freely. For homes, the teleop path lets companies rapidly script reliable routines, like dishwasher loading or laundry handling, then distill them into compact policies that run without an operator.

For factories, the same pipeline turns expert demonstrations into reusable playbooks, which can be mixed and fine-tuned across tasks and fixtures.

Why this is such a big deal is because, it’s more high quality data per day at lower cost, which means higher success rates, fewer crashes, and faster iteration toward reliable real work.

Unitree’s full body teleoperation turns a person’s motion into robot actions in real time, and the point is to turn every second of control into clean, robot native training data. Real world robotics data is scarce because collecting it needs robots, fixtures, safety checks, and trained operators, which is why Google’s RT-1 team needed 17 months, 13 robots, and 130K episodes just to cover kitchen style tasks.

Even at community scale, researchers had to merge 1M+ trajectories from 22 robot types across many labs to get enough diversity, which shows how fragmented and hard to gather this data is. Fresh datasets that push breadth still look small in time terms, for example DROID took 12 months for 76K demos or about 350 hours across 564 scenes and 86 tasks.

Single lab efforts like BridgeData V2 plateaued at 60,096 trajectories even with focused tooling, which again underlines the collection bottleneck. Teleoperation attacks this bottleneck because the operator is literally producing paired observations and actions that already respect the robot’s joints, delays, and contacts, so no extra annotation or risky self exploration is needed.

And we already know that Robot native demos are sample efficient, shown by Mobile ALOHA where 50 demonstrations per task pushed success rates up to 90% on multi step home routines like cooking and elevator use. Because the human is in the loop, the system can sweep many objects and layouts in hours, creating long horizon trajectories that are hard to synthesize from video alone or from simulation.

Policy assisted teleoperation reduces operator load further by letting a helper policy do the boring parts and only asking the human when confidence dips, which scales data collection across multiple robots. When the same company owns the robot and the data engine, improvements feed back faster, the exact embodiment sees the exact corrections, and models do not waste samples on retargeting errors between bodies. Unitree’s move matters because a low cost humanoid plus a full body teleop rig is a repeatable pipeline for housework and sport style whole body maneuvers, so every session yields reusable trajectories for imitation and reinforcement learning on that same G1.

⚡ The Microchip Era Is About to End - An analysis published in WSJ

The future is in wafers. Data centers will be the size of a box, not vast energy-hogging structures. WSJ wrote an article. The GPU era is hitting physical limits.

Nvidia chips pack 208 billion transistors and cost about $30,000, and giant clusters that act like one computer. The core bottleneck is the lithography “reticle limit,” which caps a single chip to roughly 800 square millimeters, so large AI jobs get split across many chips and then stitched back together over cables and complex packaging.

ASML’s high-NA EUV “Extreme Machine” shows the ceiling of the current path, it costs about $380 million, only about 44 exist, and each one ships in hundreds of crates and takes months to install. The result of that ceiling is ever more chips, more wires, and rising communication time that wastes power and slows training as clusters scale.

Wafer-scale flips the model by using the whole wafer as one device, so compute and memory sit side by side and signals do not hop off to distant packages.

Think of a normal AI server as many separate chips talking over long, slow links. A GPU pulls data from HBM stacks, then sends activations and gradients over board cables or backplanes to other GPUs. Those off-chip hops add latency and burn a lot of energy per bit, so as clusters grow, communication time and power rise quickly. This scaling pain exists because a single chip cannot exceed the lithography reticle limit of roughly 800 mm², so you must stitch many chips together with external wiring.

Wafer-scale takes the entire silicon wafer and turns it into one giant device, so compute cores and large pools of on-wafer SRAM sit next to each other and talk over a network-on-chip that spans the wafer. Moving data across that on-wafer fabric is typically 10x to 100x more energy-efficient than sending it over board-level links, which cuts communication overhead and keeps more of the power budget on actual math.

Cerebras’s WSE line is a concrete example, with 4 trillion transistors, about 14x Nvidia Blackwell’s count, and roughly 7000x the on-device memory bandwidth, plus stacked systems of 16 wafers reaching 64 trillion transistors. Multibeam’s multi-column e-beam lithography argues for bypassing the reticle entirely by writing patterns directly across an 8-inch wafer.

By integrating huge amounts of compute and SRAM on a single wafer and linking them with a dense fabric, it delivers extreme on-device memory bandwidth, which is why you often see claims of multi-petabyte-per-second bandwidth and large step-ups versus GPU systems that must aggregate many separate packages. The core idea is locality, keep model weights and activations close to the compute so signals do not leave the wafer, which avoids the slow, power-hungry off-chip cabling that limits conventional GPU clusters.

📡The main points from the newly released report “AI progress and recommendations” from OpenAI.

Models already beat top humans on some hard problems and look roughly 80% of an AI researcher, with task scope expanding from seconds to 60+ minutes and heading toward multi day work. Near term systems may make small discoveries by 2026 and larger discoveries after 2028, affecting science, health, climate modeling, software, and education.

Daily life may feel stable because tools slot into routines, but underlying capability and access will keep compounding. Safety focus is to prevent catastrophic failure from superintelligent systems, proving alignment and control before any deployment.

Frontier labs should share safety research, adopt common control evaluations, and reduce race dynamics so risks are measured the same way. Public oversight should match capability, with light touch for today’s mainstream uses and tighter coordination if systems approach self improvement, including strict biosecurity limits.

Build an AI resilience ecosystem like cybersecurity, with standards, monitoring, testing, incident response, and commercial support to keep risk within tolerable bounds. Track real world impact through regular reporting on jobs, safety incidents, misuse, and benefits, since measurement beats speculation. Keep user empowerment central, treat advanced AI access like a utility, protect privacy, and let adults choose how to use these tools within broad social rules.

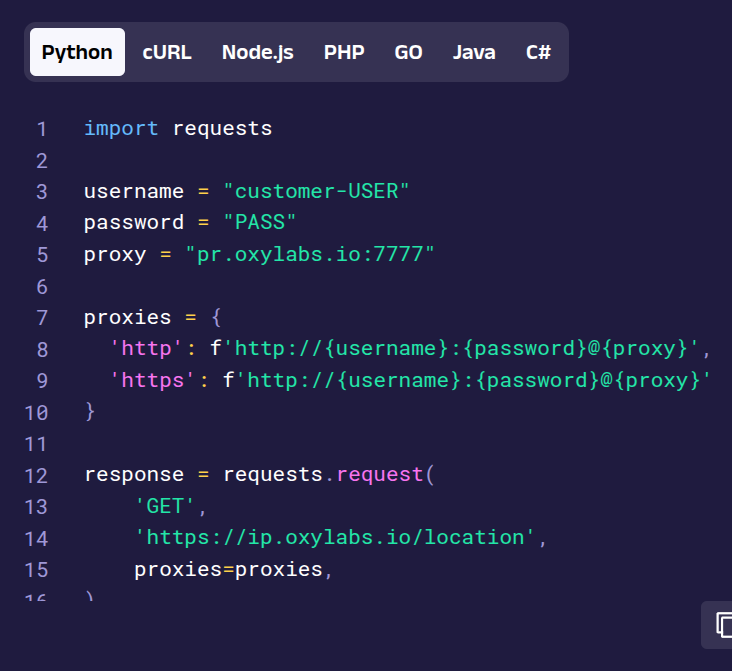

🛠️ Today’s Sponsor: Oxylabs, when web-scraping at scale, without wasting resources or getting you IP blocked.

Web scraping at scale can be complex, especially when dealing with diverse website structures and anti-scraping measures. 🤔

Sites that block bots often need residential proxies and header rotation, so plugging in a proxy provider like Oxylabs with rotating sessions helps keep requests reliable. Oxylabs operates over 175 million residential IPs, making it the largest ethical residential proxy network in the market.

There are several ways to go about web scraping, and one of them is to use an API. It’s like a remote web scraper – you send a request to the API with the URL and other parameters like language, geolocation, or device type, and your job is done.

👉 What Oxylabs Web Scraper API does for me:

Generates scraping requests and parsing instructions

Identifies complex patterns using powerful language models

Produces ready-to-use code based on natural language prompts

Optimizes proxies with advanced configuration techniques, bandwidth management, and integration strategies

Parses raw HTML into structured data, unblock any website, and scale through its Web Scraper API platform.

OpenAI’s production-ready Agents SDK now pairs cleanly with Oxylabs’ Web Scraper API.

So AI agents pull fresh web data at a capped $1.35 per 1K results, which makes real-time retrieval cheaper and simpler for multi-step workflows. Oxylabs provides an MCP server that exposes scraping as a standard tool, so the agent can request a URL, render JavaScript, handle geo targeting, and get structured, LLM-ready content.

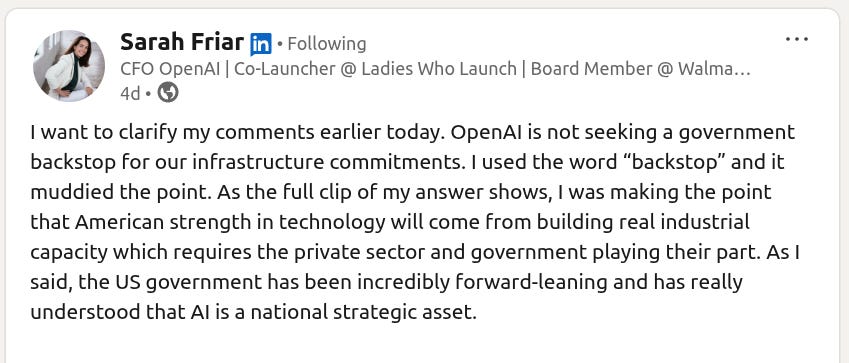

👨🔧 OpenAI’s Sam Altman backtracks on CFO’s government ‘backstop’ talk - What really happened

Sam Altman said OpenAI does not have or want government guarantees for its data centers after CFO Sarah Friar’s “backstop” remark, and at the same time the company is pushing to expand the 25% Advanced Manufacturing Investment Credit to include AI server production, AI data centers, and key grid gear like transformers and HVDC converters, which is a different kind of policy support focused on manufacturing and infrastructure rather than bailouts.

Tech stocks had their worst week since April as AI names shed close to $1 trillion in value, showing how sensitive the market is to the financing and policy signals around AI infrastructure.

CFO Sarah Friar clarified that OpenAI is not seeking a government backstop for its infrastructure, saying the word choice muddied the point about public and private roles in building industrial capacity.

Altman’s post goes further by saying governments could build and own their own AI infrastructure or offtake compute, but that any upside should flow to the state if it funds such assets, which keeps the line between public capacity and private benefit clear.

He also drew a boundary between discussing loan guarantees for new U.S. chip fabs versus refusing guarantees for OpenAI’s private data centers, which matches reporting that any government support they contemplated is about domestic semiconductor manufacturing rather than their own facilities.

OpenAI’s official submission to the White House asks to broaden AMIC eligibility to the semiconductor supply chain, AI servers, and AI data centers, and it asks for grants, loans, or loan guarantees to speed production of transformers, HVDC converters, switchgear, and cables, which aims to cut multi-year lead times in the grid bottlenecks that slow compute buildouts.

AMIC today is a 25% tax credit for facilities whose primary purpose is making semiconductors or semiconductor manufacturing equipment, so OpenAI is effectively asking to place large scale AI inference infrastructure in the same policy lane that chip fabs already occupy.

The scale behind these asks is huge, since OpenAI says it has about $1.4 trillion in infrastructure commitments over 8 years and expects to exit 2025 at $20 billion annualized revenue, with added plans to sell raw “AI cloud” compute to others to help fund the buildout.

But all these did not stop the market anxiety, with the Nasdaq logging its worst week since April and investors questioning whether capex and financing models can keep pace with profits, which explains why wording like “backstop” triggered such a sharp reaction.

Concentration risk adds to the nerves, since the Magnificent 7 now account for >30% of the S&P 500 by value, a level that draws frequent comparisons to the dot-com era and magnifies any AI-driven drawdowns across the index.

Policy signals are also firming, because the White House AI chief David Sacks said there will be no federal bailout for AI, which lines up with Altman’s statement that OpenAI should be allowed to fail if it gets its bets wrong.

A bailout or loan guarantee for a private data center shields one company’s balance sheet while a tax credit or grant for factories and grid equipment lowers systemwide costs to produce hardware and power, which benefits any firm that later buys servers or plugs into the grid.

Analysts are split, with one camp seeing these giant commitments as rational bets on downstream profitability and another warning about “circular financing” between chip suppliers, hyperscalers, and model labs that could prop up unprofitable lines to keep demand for chips high.

That’s a wrap for today, see you all tomorrow.