When is ANOVA test preferred over t-test

To answer simply, if we have more than 2 groups, then we prefer ANOVA over t-test.

However, the important point here to note is that, technically we can perform t-test with more than 2 groups, however doing a series of t-tests would increase the possibility of making a type 1 error, creating the issue of compounding the type 1 error rate. And we can solve this kind of situation by running a single test - which is ANOVA.

Lets expand this this concept in detail

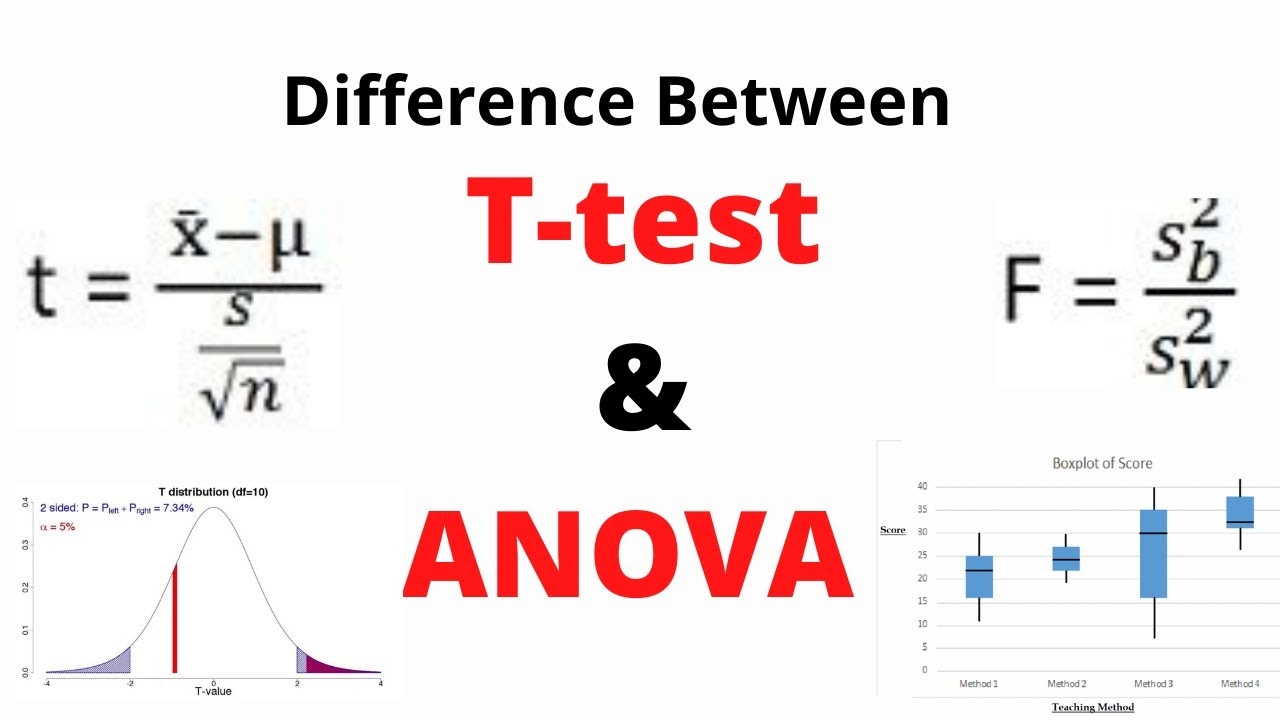

Both ANOVA (Analysis of Variance) and t-test are used to determine whether there is a significant difference between the means of the groups.

Now, let's coming back to our main point here - that ANOVA is preferred over the t-test when there are more than two groups to compare, as conducting multiple pairwise t-tests increases the likelihood of making a Type I error.

Now, A Type I error, also known as a false positive, occurs when you reject a true null hypothesis (i.e., you conclude that there is a significant difference between the groups when there is not one).

For example, let’s say you decide to get tested for COVID-19 based on mild symptoms. There are two errors that could potentially occur: Type I error (false positive): the test result says you have coronavirus, but you actually don’t. Type II error (false negative): the test result says you don’t have coronavirus, but you actually do.

The probability of making a Type I error is denoted by the alpha level (α), which is usually set at 0.05 or 5%. This means that there is a 5% chance that you might reject a true null hypothesis.

When comparing multiple groups using individual t-tests, the probability of making at least one Type I error increases. This is because each t-test has its own α level, and the probability of making a Type I error accumulates across all the tests.

For example, let's say you have 4 groups (A, B, C, D) and want to compare their means. To do this using t-tests, you would need to conduct 6 pairwise comparisons (A-B, A-C, A-D, B-C, B-D, and C-D). With each t-test having a 5% chance of making a Type I error, the probability of making at least one Type I error across all 6 t-tests is:

1 - (1 - α)^number_of_tests = 1 - (1 - 0.05)^6 ≈ 0.265 (26.5%)

Explanation of above Math

The term (1 - α) represents the probability of not making a Type I error for each individual test.

When this term is raised to the power of the number of tests (i.e. 6 here ), it gives us the probability of not making any Type I errors across all the 6 tests.

Subtracting this value from 1 gives us the probability of making at least one Type I error across all 6 tests. In other words, it is the complement of the probability of not making any Type I errors.

As you can see, the probability of making a Type I error has increased from 5% (for a single t-test) to 26.5% when conducting multiple t-tests.

To control for this increased risk of Type I error, we use ANOVA, which allows us to compare the means of multiple groups simultaneously while maintaining a single α level.

ANOVA does this by partitioning the total variance in the data into two sources:

between-group variance and

within-group variance.

It then calculates an F-statistic, which is the ratio of the between-group variance to the within-group variance. If the F-statistic is large enough and the corresponding p-value is less than the chosen α level (e.g., 0.05), we reject the null hypothesis and conclude that there is a significant difference between the group means.

In summary, the ANOVA test is preferred over the t-test when comparing the means of more than two groups because it:

Maintains a single α level, controlling for the increased risk of making a Type I error when conducting multiple comparisons.

Allows for simultaneous comparison of multiple groups, making it more efficient and easier to interpret than conducting multiple pairwise t-tests.

Remember, though, that if the ANOVA test detects a significant difference among the group means, you may still need to conduct post-hoc tests (e.g., Tukey's HSD or Bonferroni or Dunnett's ) to identify the specific pairs of groups that have significantly different means. These post-hoc tests also include adjustments to control the Type I error rate.

For more on Machine Learning - Check out my YouTube Channel