🚨 Why Nvidia spends $20B to lock down inference future

Nvidia locks down inference with $20B Groq deal, keeps infra lead, and Liquid AI’s tiny model nails 42% on GPQA.

Read time: 7 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (26-Dec-2025):

💰 Nvidia will keep the AI infrastructure cost edge.

🚨 Deep Dive: Why Nvidia spends $20B to lock down inference future, neutralize Google GPU threat with a for a non-exclusive license to Groq’s breakthrough inference technology.

🏆 Liquid AI’s LFM2-2.6B-Exp got 42% in GPQA - incredible for a 2.6B param mode.

💰 Nvidia will keep the AI infrastructure cost edge.

As models scale, GPUs spend more time waiting for data, so bisection bandwidth, throughput across the cluster’s “middle”, and collective communication like all-reduce become decisive. Tensor processing units (TPUs) and other application-specific integrated circuits (ASICs) work well for narrower jobs, but pod boundaries and bandwidth can cap frontier-scale growth.

Chip-on-wafer-on-substrate (CoWoS) packaging is the bottleneck, and Nvidia pre-booked over 60% as capacity grows from 652 in 2025 to 1,550 in 2027. GB300 is smooth upgrade from GB200, and Vera Rubin as the next cost-curve reset for both training and inference.

Google’s issue is unit economics, because ad search is ultra-cheap while chatbot sessions with 5 to 10 turns can cost about 100x more. Since 10% to 20% of queries drive 60% to 70% of search revenue, moving that slice to AI answers demands a new trust and pricing model. OpenAI will benefit from subscriptions and APIs that monetize quality, plus higher engagement minutes and a consumer/enterprise mix near 60/40 than monthly active users (MAUs), unique users per month.

🚨 Deep Dive: Why Nvidia spends $20B to lock down inference future, with a non-exclusive license to Groq’s breakthrough inference technology.

🧾 What actually happened with Nvidia and Groq

On Dec 24, 2025, Groq announced a non-exclusive inference technology licensing agreement with Nvidia. Groq also said Jonathan Ross (Groq’s founder) plus other leaders are moving over to Nvidia as part of the deal, and Groq will keep operating as an independent company.

The $20B number is what Nvidia is paying for 2 things: Groq’s inference intellectual property, and the people who know how to scale it.

It’s part of a broader pattern where big tech gets the asset (and the team) without doing a clean merger. Now the number that makes the strategy pop: Groq’s most recent valuation was reported at $6.9B after a September 2025 funding round, which makes the rumored $20B size feel like about 3x in simple terms, even if the exact price isn’t officially confirmed in the press release itself.

At a high level, 𝗟𝗣𝗨(𝗟𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗣𝗿𝗼𝗰𝗲𝘀𝘀𝗶𝗻𝗴 𝗨𝗻𝗶𝘁) is a 𝗰𝘂𝘀𝘁𝗼𝗺 𝗔𝗦𝗜𝗖, purpose-built for large language model inference - not a GPU retrofit.

Two architectural ideas really stood out:

𝟭. 𝗦𝗶𝗻𝗴𝗹𝗲 𝗜𝗻𝘀𝘁𝗿𝘂𝗰𝘁𝗶𝗼𝗻, 𝗠𝘂𝗹𝘁𝗶𝗽𝗹𝗲 𝗗𝗮𝘁𝗮 (𝗦𝗜𝗠𝗗) - 𝗮𝘁 𝘀𝗰𝗮𝗹𝗲

The LPU follows a single instruction, multiple data execution model.

What does this mean in practice:

• The entire chip executes the 𝘀𝗮𝗺𝗲 𝗶𝗻𝘀𝘁𝗿𝘂𝗰𝘁𝗶𝗼𝗻 𝗮𝘁 𝘁𝗵𝗲 𝘀𝗮𝗺𝗲 𝘁𝗶𝗺𝗲.

• Different parts of the chip operate on 𝗱𝗶𝗳𝗳𝗲𝗿𝗲𝗻𝘁 𝗱𝗮𝘁𝗮 𝘀𝗹𝗶𝗰𝗲𝘀.

• There's 𝗻𝗼 𝗱𝗶𝘃𝗲𝗿𝗴𝗲𝗻𝗰𝗲 in execution paths.

Unlike GPU's, where different threads may branch, stall, or wait the LPU moves forward in 𝗹𝗼𝗰𝗸𝘀𝘁𝗲𝗽. This is especially powerful for transformer workloads, where it is mostly matrix operations and attention patterns.

𝗥𝗲𝘀𝘂𝗹𝘁: Maximum hardware utilization with zero control-flow overhead.

𝟮. 𝗗𝗲𝘁𝗲𝗿𝗺𝗶𝗻𝗶𝘀𝘁𝗶𝗰 𝗘𝘅𝗲𝗰𝘂𝘁𝗶𝗼𝗻 (𝗡𝗼 𝗥𝘂𝗻𝘁𝗶𝗺𝗲 𝗔𝗿𝗯𝗶𝘁𝗿𝗮𝘁𝗶𝗼𝗻)

One of the most important differences from GPU's is that the LPU is 𝗳𝘂𝗹𝗹𝘆 𝗱𝗲𝘁𝗲𝗿𝗺𝗶𝗻𝗶𝘀𝘁𝗶𝗰. No runtime scheduling, No dynamic kernel launches, No contention between threads, No arbitration for shared resources

Every operation is 𝗰𝗼𝗺𝗽𝗶𝗹𝗲𝗱 𝗮𝗵𝗲𝗮𝗱 𝗼𝗳 𝘁𝗶𝗺𝗲, and the execution plan is known 𝘦𝘹𝘢𝘤𝘵𝘭𝘺 before the model runs.

This means:

Latency is 𝗽𝗿𝗲𝗱𝗶𝗰𝘁𝗮𝗯𝗹𝗲, Performance 𝗱𝗼𝗲𝘀 𝗻𝗼𝘁 𝗳𝗹𝘂𝗰𝘁𝘂𝗮𝘁𝗲 𝘂𝗻𝗱𝗲𝗿 𝗹𝗼𝗮𝗱, Tail latency is 𝗱𝗿𝗮𝗺𝗮𝘁𝗶𝗰𝗮𝗹𝗹𝘆 𝗿𝗲𝗱𝘂𝗰𝗲𝗱.

💸 Why inference is where the money leaks out

Training is expensive, but it is also “bursty.” You train a model, you finish, and you might not retrain for a while. Even if you do regular updates, the training cost is still an upfront capital cost that happens in chunks.

Inference is different. Inference is every single user request, every token generated, every agent step, every autocomplete, every voice reply. It is an always-on operating cost that grows with usage. That is why a small efficiency difference in inference hardware can turn into a huge business difference over time.

This is also why the Nvidia-Groq move lines up with Nvidia’s own messaging that the market is shifting from training toward inference. That shift is explicitly called out in the recent reporting around the deal.

⚙️ What Groq’s LPU (Language Processing Unit) is really doing differently

A GPU is built to be flexible. It’s great at chewing through big piles of parallel work, and training loves that because training is basically giant matrix math over and over, with lots of parallel operations.

Inference for Large Language Models (LLMs) has a different shape. You still do matrix math, but the output arrives token-by-token, and a lot of real products care about consistent response time. The user sees “time to first token” and “time between tokens.” That “smoothness” matters as much as raw throughput.

Groq’s core pitch is that its chips are designed for inference, with an execution style that’s meant to be predictable. Groq avoids external high-bandwidth memory by using on-chip SRAM, and it calls out the tradeoff clearly: you can get faster interactions, but you also limit the size of the model you can serve.

That’s a key technical point, and it’s easy to miss. SRAM is very fast memory placed on the chip. High Bandwidth Memory (HBM) is also fast, but it sits off the compute die and it is part of a broader supply chain. SRAM can be faster and more predictable, but you can’t fit giant models into a small amount of on-chip memory, so you end up spreading the model across many chips.

So the “weird” tradeoff looks like this: less memory per chip, but very fast access to the memory that exists. That can be a strength if your product lives and dies on latency. That same tradeoff can be painful if you want to host very large models per GPU box with minimal networking complexity.

🔒 The real strategic move: owning the exit ramp

The biggest threat to Nvidia is not “another chip is slightly faster.” The bigger threat is that serious buyers stop treating Nvidia as the default.

The moment a major buyer builds custom silicon, or commits heavily to a non-Nvidia inference stack, the conversation changes inside every other big buyer. It becomes a spreadsheet exercise: “Do we keep paying the Nvidia tax, or do we build, or do we switch?” Even if the answer is still “we keep buying Nvidia,” the fact that the question is now rational to ask weakens the grip.

That’s why this Groq deal is strategically sharp. If you’re Nvidia, you don’t want Groq to be the clean escape hatch for inference. You want Groq to be a product path you control. After the licensing deal, the buyer’s menu becomes much less scary for Nvidia.

A serious AI buyer now looks at 3 realistic paths. They can stay on Nvidia GPUs for everything. They can use Nvidia GPUs for training and still stay under Nvidia’s umbrella for the Groq-style inference approach, because Nvidia has the licensed tech and the incoming team. Or they can start from scratch with internal silicon or a different vendor, which is slow, expensive, and risky.

That is the lock-in point. CUDA is still a giant software gravity field, and the deal creates a scenario where even “choosing the alternative” can still mean you’re choosing Nvidia. You can see analysts making that exact “CUDA + Groq LPU” angle in the immediate post-deal coverage.

There’s also a quieter point here about packaging the offering. If Nvidia can sell “training stack” and “inference stack” as a single procurement relationship, it makes it harder for competitors to wedge in. Buyers hate running 6 vendors unless they have to. They will pay extra for fewer moving pieces, as long as performance and cost stay acceptable.

💾 The hidden part: memory got expensive, fast

This part is the sneaky one, and it matters because memory is not just “a component.” Memory can be a throttle on the entire AI buildout.

In Nov 2025, Samsung reportedly raised prices for some memory chips by up to 60% compared to September, and the reporting includes concrete DDR5 module pricing jumps like $149 to $239 for a 32GB DDR5 module in that window. That’s not a gentle cycle. That’s a shock.

TrendForce tracked the spot market too, and it reported DDR5 chip spot prices up 307% since the start of September 2025. Then on Dec 11, 2025, TrendForce said memory prices are projected to rise again in Q1 2026, and that device makers are already getting pushed into raising prices and cutting specs.

Why is this happening? Because AI data centers are vacuuming up high-speed memory. High Bandwidth Memory (HBM) is a big part of that. And making HBM is resource-intensive. A recent report on Micron’s outlook quotes Micron saying tight conditions are expected to persist “through and beyond” 2026, and it also highlights that HBM uses 3x the silicon wafers compared to standard DRAM.

TrendForce goes even further on the “capacity gets eaten” idea. It cited projections that when you adjust for the wafer usage of high-speed memory like HBM and GDDR7, AI could effectively consume nearly 20% of global DRAM supply capacity in 2026.

Now connect that back to Nvidia. Nvidia’s big AI accelerators are tightly coupled to HBM availability. If HBM is scarce, the entire Nvidia supply chain feels it. If HBM is expensive, Nvidia’s bill of materials gets pressure, and customers feel it too.

Groq’s approach sits in a weird spot here, and that’s why it’s suddenly attractive. Groq inference doesn’t use external high-bandwidth memory, relying on on-chip SRAM instead. So if the world is constrained by HBM supply, owning an architecture that avoids that constraint is a practical hedge. Not perfect, not universal, but real.

And yes, there’s a catch, and it’s an important catch. When you keep memory on-chip, you limit how much model you can pack per chip. This approach limits the size of the model that can be served. So the hedge works best when you can scale across many chips cleanly and you’re focused on real-time inference, not “fit the biggest model on a single device.”

🧩 What this does to every buyer’s decision

If you’re running a serious AI product, your hardware decision is usually 2 questions.

Question 1 is performance per dollar for your specific workload. Training-heavy shops care about throughput and scaling efficiency. Inference-heavy shops care about latency, cost per token, and power.

Question 2 is switching cost. Hardware is never “just hardware.” It’s compilers, kernels, runtimes, debugging tools, monitoring, and hiring. That’s why software ecosystems like CUDA have so much power.

This Nvidia-Groq deal pushes on both questions at the same time.

On performance per dollar, Nvidia gets access to a chip architecture that’s been positioned as purpose-built for inference, and the deal discussion is already framed around inference and real-time workloads. On switching cost, Nvidia can potentially make “trying the alternative” feel less like a rewrite, and more like a feature inside the Nvidia world, which is exactly how lock-in gets strengthened without looking like lock-in.

So even if you think Groq’s architecture has limits, the strategic point stays the same: when the most credible inference alternative gets absorbed into Nvidia’s menu, the “build vs buy vs switch” math tilts back toward Nvidia.

This is why the deal looks pricey. Nvidia is not buying a chip that tops every benchmark, it is aiming to keep inference buyers within its orbit as memory constraints rise and inference demand grows.

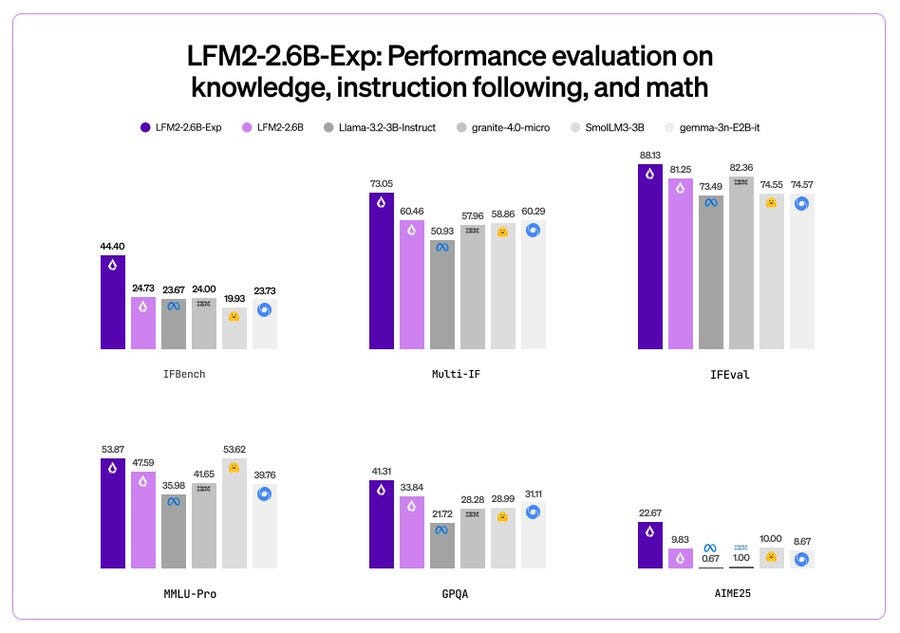

🏆 Liquid AI’s LFM2-2.6B-Exp got 42% in GPQA - incredible for a 2.6B param mode.

GPQA is a tough science question set that punishes shallow pattern matching. LFM2-2.6B-Exp starts from LFM2-2.6B and then uses RL, meaning it generates answers, scores them with task rewards, and updates the model to produce higher-scoring outputs more often.

The underlying LFM2-2.6B setup is a 30-layer hybrid with 22 convolution layers for local token mixing and 8 attention layers for long-range token interactions, with a 32,768-token context window and bfloat16 weights. This looks like solid evidence that reward design and RL can move the needle a lot at small scale

That’s a wrap for today, see you all tomorrow.

insightful article