🎙️ xAI launched the Grok Voice Agent API and now leads Artificial Analysis’s Big Bench Audio benchmark

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (18-Dec-2025):

🎙️ xAI launched the Grok Voice Agent API and now leads Artificial Analysis’s Big Bench Audio benchmark

🏆 Google DeepMind CEO Demis Hassabis believes there is virtually no limit to what AI can eventually achieve.

🇨🇳 A shocking Reuters report says China is almost building an ASML’s EUV machine’s alternative, which is THE most crucial machine for the whole AI industry

🧠 Yann LeCun’s new interview - explains why LLMs are so limited in terms of real-world intelligence.

🎙️ xAI launched the Grok Voice Agent API

Grok Voice Agent is xAI’s first public speech-to-speech API

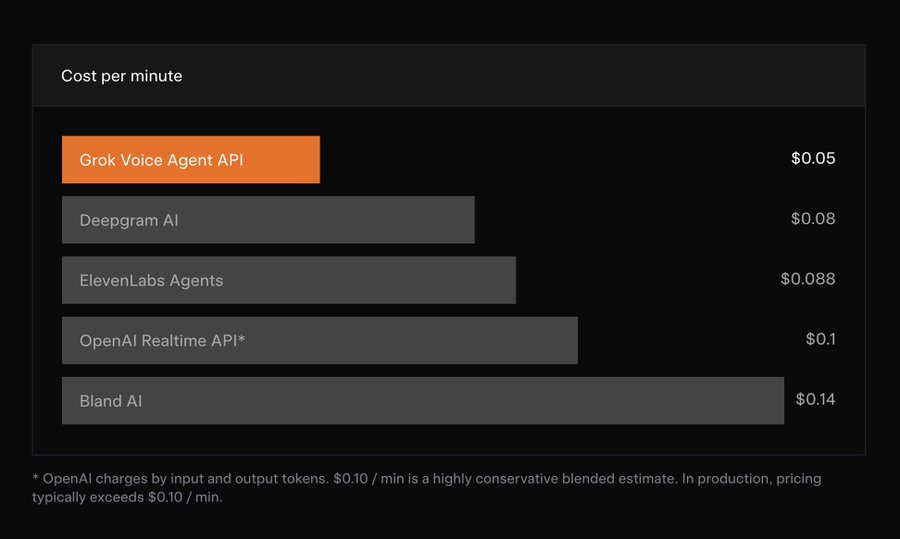

Leads the industry in cost-efficiency. Developers are billed at a simple flat rate of $0.05 per minute.

In blind head-to-head human evaluations against OpenAI, Grok is consistently rated as the preferred model across axes such as pronunciation, accent, and prosody.

multiple expressive voices including Ani, Eve, and Leo.

With it, you can build real-time voice agents that talk in many languages, call tools, and do live search. It claims #1 on Big Bench Audio with <1s time-to-first-audio, plus a flat price of $0.05/min of connection time.

xAI built the whole voice stack in-house, including voice activity detection that finds when a person is speaking, an audio tokenizer that turns sound into compact tokens, and the core audio models that decide what to say and how to say it. The speed claim is about the full pipeline from user speech to the first audible reply, so lower time-to-first-audio usually means fewer buffering pauses and more natural turn-taking in a conversation.

On cost, the comparison shown is $0.05/min (Grok) versus $0.08/min (Deepgram), $0.088/min (ElevenLabs), $0.10/min (OpenAI Realtime API estimate from token billing), and $0.14/min (Bland). The product angle is “voice agent plus tools”, meaning the model can choose to call things like web search, X search, or a developer function, then use the returned data to keep the conversation grounded in up-to-date info.

Tesla is presented as a design partner, with Grok using car-specific tools to read vehicle status, navigate, and plan routes using search plus tool calls. The API is said to match the OpenAI Realtime API specification and also ships via an xAI LiveKit plugin for real-time audio apps. The most convincing part is the end-to-end focus on latency plus tool calling, because those 2 things usually decide whether a voice agent feels usable or annoying.

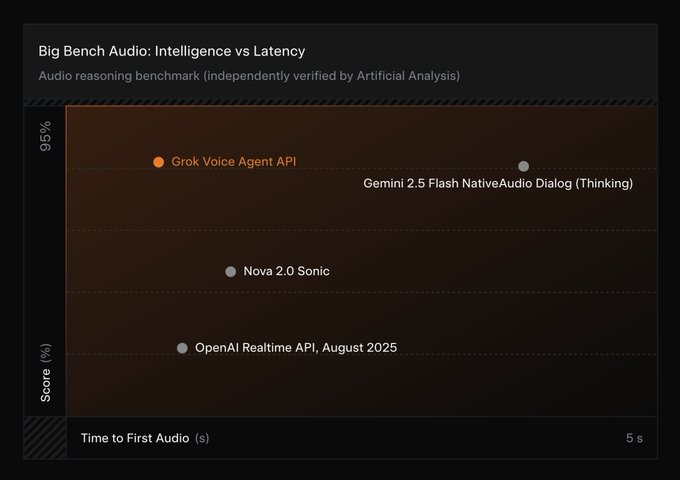

Nearly 5 times faster than the closest competitor.

With an average time-to-first-audio of less than 1 second, Grok is nearly 5 times faster than the closest competitor.

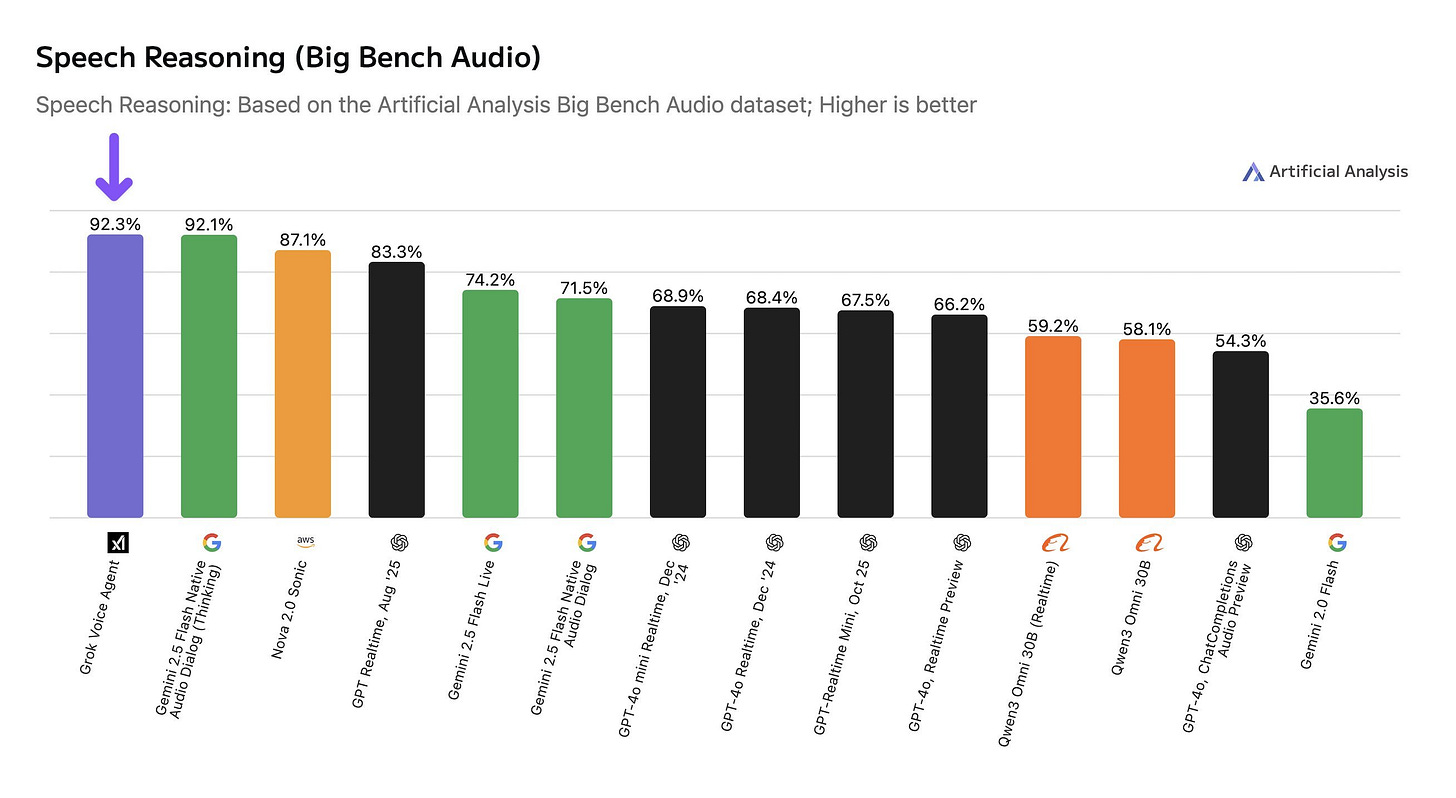

Grok Voice Agent now leads Artificial Analysis’s Big Bench Audio benchmark with 92.3% speech reasoning accuracy.

Ahead of Gemini 2.5 Flash Native Audio and GPT Realtime

Big Bench Audio is built from 1,000 audio questions adapted from Big Bench Hard, so the 92.3% score is being claimed on a harder style of reasoning set, not casual voice chat.

Grok is the 3rd fastest on this specific latency metric, with an average time to first audio of 0.78 seconds on the Big Bench Audio dataset.

Grok’s audio input price at $3.00 per hour and audio output price at $3.00 per hour, which is a clear, symmetric rate.

Tool calling is supported, so the voice agent can trigger actions like web search or retrieval augmented generation search, or custom tools defined with JSON schema.

Telephony support is built in through Session Initiation Protocol hookups, including compatibility examples like Twilio and Vonage.

Multilingual support is advertised as over 100 languages, which targets global voice workflows rather than English only.

The product offers 5 voice options, which is useful when teams want different assistant personas without rebuilding the system.

The release is positioned as ready for voice assistants, phone agents, and interactive voice apps, so it is being framed as an API meant for production deployments.

🏆 Google DeepMind CEO Demis Hassabis believes there is virtually no limit to what AI can eventually achieve.

“humans as biological information processing systems”.

He is working on the premise that the entire universe is “computable,” meaning that anything that exists can technically be modeled by a machine. He says that a powerful enough computer—conceptually known as a Turing machine—should be able to simulate every aspect of reality.

Until physics proves that something is impossible to calculate, he is operating on the belief that AI can solve any problem. This perspective even applies to the things that feel uniquely human. When asked about physical sensations, like feeling the warmth of a light or hearing background noise, Hassabis argued that these are just forms of information being processed by the brain.

He views humans as biological information processing systems. By treating biology and reality essentially as data, he believes we will not only replicate human capabilities but also solve complex challenges, such as curing all diseases, by decoding the information of life itself.

🇨🇳 A shocking Reuters report says China is almost building an ASML’s EUV machine’s alternative, which is THE most crucial machine for the whole AI industry

Until now, ASML is the only company that has truly cracked EUV technology. Its machines cost about $250 million each and are critical for making the most advanced chips designed by Nvidia and AMD, and manufactured by TSMC, Intel, and Samsung.

The result marks the payoff of a 6-year government program focused on semiconductor independence. People compared it to China’s version of the Manhattan Project, the U.S. wartime program that built the atomic bomb.

China’s prototype reportedly generates EUV light and is being tested, but it has not produced working chips yet, with 2028 and 2030 mentioned as targets. EUV is a chip “printing” step that uses extremely short wavelength light to draw tiny circuit patterns onto silicon, and smaller patterns usually allow more compute per area.

Getting usable EUV is hard because the machine must create EUV light from a laser hitting molten tin, then keep the optics clean and perfectly stable so the pattern does not blur. Sources describe former ASML engineers helping reverse engineer parts of the system, and Huawei coordinating a wider effort across labs and suppliers.

“The aim is for China to eventually be able to make advanced chips on machines that are entirely China-made,” one of the people said. “ China wants the United States 100% kicked out of its supply chains.”

But whats stopped China or any other country (even USA) to truly create their own photolithography machines so they can build their own chips?

Simply because Its ultra HARD. China has some of the brightest minds in the world working on it, its just really hard. Refer to the quoted thread, claims some Chinese engineers took apart an ASML DUV (Deep Ultraviolet) lithography tool to study it, then could not re-assamble, and then asked ASML to fix it.

🔧 The 193nm immersion is unforgiving

An immersion DUV scanner runs 193nm light through a thin water layer and scans wafers on dual‑stage mechanics to keep overlay tight across 300mm wafers. That whole stack depends on factory procedures, internal references, and closed‑loop tuning of stages, optics, and sensors. ASML’s public product notes on immersion and TWINSCAN stages show how much precision is baked into the platform, including metrology frames that tie projection optics and sensors to a single reference point.

Pulling a tool apart risks particle hits on ZEISS projection optics, interferometer offsets, and loss of those references, and putting it back requires vendor procedures and software keys that sit behind service licensing. The closest thing to an ASML rival are the Japanese companies Canon and Nikon, but they have pretty much conceded the cutting edge high end part of the field to ASML.

🏭 China’s plan B, stretch DUV and stand up domestic tools

SMIC, the Chinese foundry company is trialing a domestic immersion DUV from Yuliangsheng that targets 28nm and aims at 7nm via multi-patterning. Reporting pegs broader fab use around 2027, with performance closer to older ASML gear, so tuning and yield lift will take time.

China could catch up eventually. But EUV technology quite literally took billions of dollars of direct financial assistance from the US and Dutch government to complete. You can use Japan as an example. They were competing with the Dutch to make EUV in the 2000s. It ultimately failed as they couldn’t commit sufficient consistent funding without the US. And Japan was the world leader in photolithography at the time.

EUV (Extreme Ultraviolet), as far as the actual wavelength of light goes, is pretty damn close to the physical limits of photolithography. We’ll get incremental improvements but we’re not likely to see anything like the shift from DUV to EUV again. But then again, who knows. When we first investigated EUV for photolithography in the 80’s we didn’t think it would be possible.

🚫 The export rules that drive this behavior

The Netherlands revoked licenses covering shipments of NXT:2050i and NXT:2100i to China from 1‑Jan‑2024. In Sep‑2024, The Netherlands tightened things again so NXT:1970i and NXT:1980i shipments need Dutch licenses too, with NXT:2000i and newer already under Dutch control. Even Servicing is also gated, since spare parts and software updates for certain China tools require a Dutch license.

🧠 Yann LeCun’s new interview - explains why LLMs are so limited in terms of real-world intelligence.

Yann LeCun says the biggest LLM is trained on about 30 trillion words, which is roughly 10 to the power 14 bytes of text.

That sounds huge, but a 4 year old who has been awake about 16,000 hours has also taken in about 10 to the power 14 bytes through the eyes alone. So a small child has already seen as much raw data as the largest LLM has read.

But the child’s data is visual, continuous, noisy, and tied to actions: gravity, objects falling, hands grabbing, people moving, cause and effect. From this, the child builds an internal “world model” and intuitive physics, and can learn new tasks like loading a dishwasher from a handful of demonstrations.

LLMs only see disconnected text and are trained just to predict the next token. So they get very good at symbol patterns, exams, and code, but they lack grounded physical understanding, real common sense, and efficient learning from a few messy real-world experiences.

In the same video Yann LeCun beautifully explains how the architecture and principles used to train LLMs can not be extended to teach AI the real-world intelligence.

In 1 line: LLMs excel where intelligence equals sequence prediction over symbols. Real-world intelligence requires learned world models, abstraction, causality, and action planning under uncertainty, which current next-token training does not provide.

He says current LLMs learn by predicting the next token. That objective works very well when the task itself can be reduced to manipulating discrete symbols and sequences. Math, physics problem solving on paper, and coding fit this pattern because success largely comes from searching and composing the right sequences of symbols, equations, or program tokens. With enough data and scale, these models get very good at that kind of structured sequence prediction.

Real-world intelligence is different. The physical world is continuous, noisy, uncertain, and high dimensional. To act in it, a system needs internal models that capture objects, dynamics, causality, constraints from the body, and the outcomes of actions over time. Humans and animals build abstract representations from rich sensory streams, then make predictions in that abstract space, not at the raw pixel level. That is why a child can learn intuitive physics, plan multi-step actions, and adapt quickly in new situations with little data.

His claim about saturation follows from this gap. Scaling token prediction keeps improving symbol manipulation tasks like math and code, but it hits limits on embodied reasoning and common sense because text alone does not provide the right learning signals for world models. Predicting the next word cannot efficiently teach contact forces, affordances, occlusion, friction, or how actions change the state of the environment. For that, he argues we need architectures that learn abstractions from sensory data and predict futures in abstract latent spaces, then use those predictions to plan actions toward goals with built-in guardrails.

That’s a wrap for today, see you all tomorrow.