🥉xAI launches Grok 4, "better than PhD level in every subject, no exceptions”

Grok 4 tops tests, MedGemma 27B squeezes into a solo GPU, Phi-4-mini accelerates reasoning, Hunyuan spins game-ready 3D, Simular agents PCs, Anthropic posts transparency framework.

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (10-July-2025):

🥉xAI launches Grok 4, "better than PhD level in every subject, no exceptions”

🩺 Google Research release MedGemma 27B, multimodal health-AI models that run on 1 GPU

🛠️ Microsoft released Phi-4-mini-flash-reasoning, 10X higher throughput and a 2 to 3X reduction in latency

🗞️ Byte-Size Briefs:

The Mayo Clinic introduced Vision Transformer, an AI system for detecting surgical-site infections

Tencent’s Hunyuan released Hunyuan 3D-PolyGen, that outputs game-ready 3D assets.

Simular Pro just launched the world’s first production-grade AI agent that automates computer tasks.

Anthropic releases a transparency framework.

🥉 xAI launched two models: Grok 4 and Grok 4 Heavy — the latter is the multi-agent high performing version

Elon Musk’s AI company, xAI, released its latest flagship AI model, Grok 4, and unveiled a new $300-per-month AI subscription plan, SuperGrok Heavy.

$3/Mn input told, $15/Mn output tokens, 256k context. The price is 2x beyond 128k context. Cached input at $0.75/ 1Mn tokens

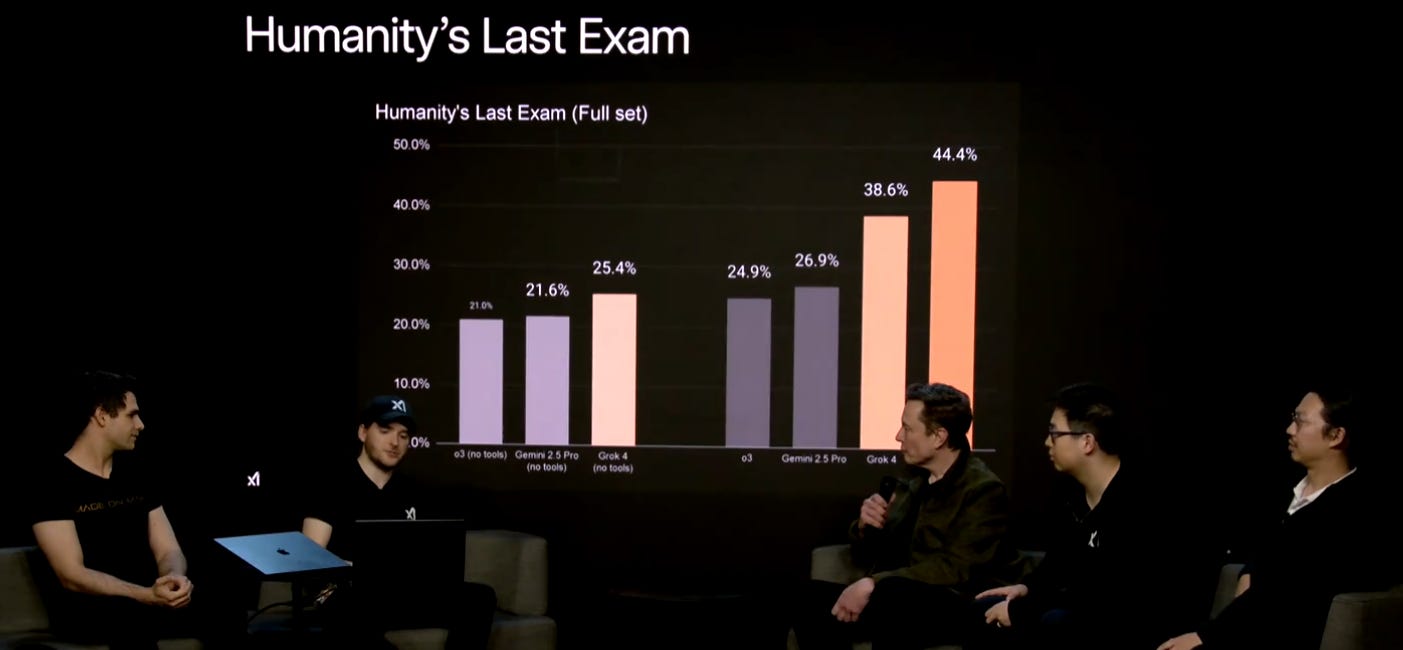

1st rank on Humanity’s Last Exam (general hard problems) 44.4%, 2nd best model is is 26.9%

1st rank on GPQA (hard graduate problems) 88.9%. 2nd best model is is 86.4%

1st rank on AIME 2025 (Math) 100%, 2nd best model is is 98.4%

1st rank on Harvard MIT Math 96.7%, 2nd best model is is 82.5%

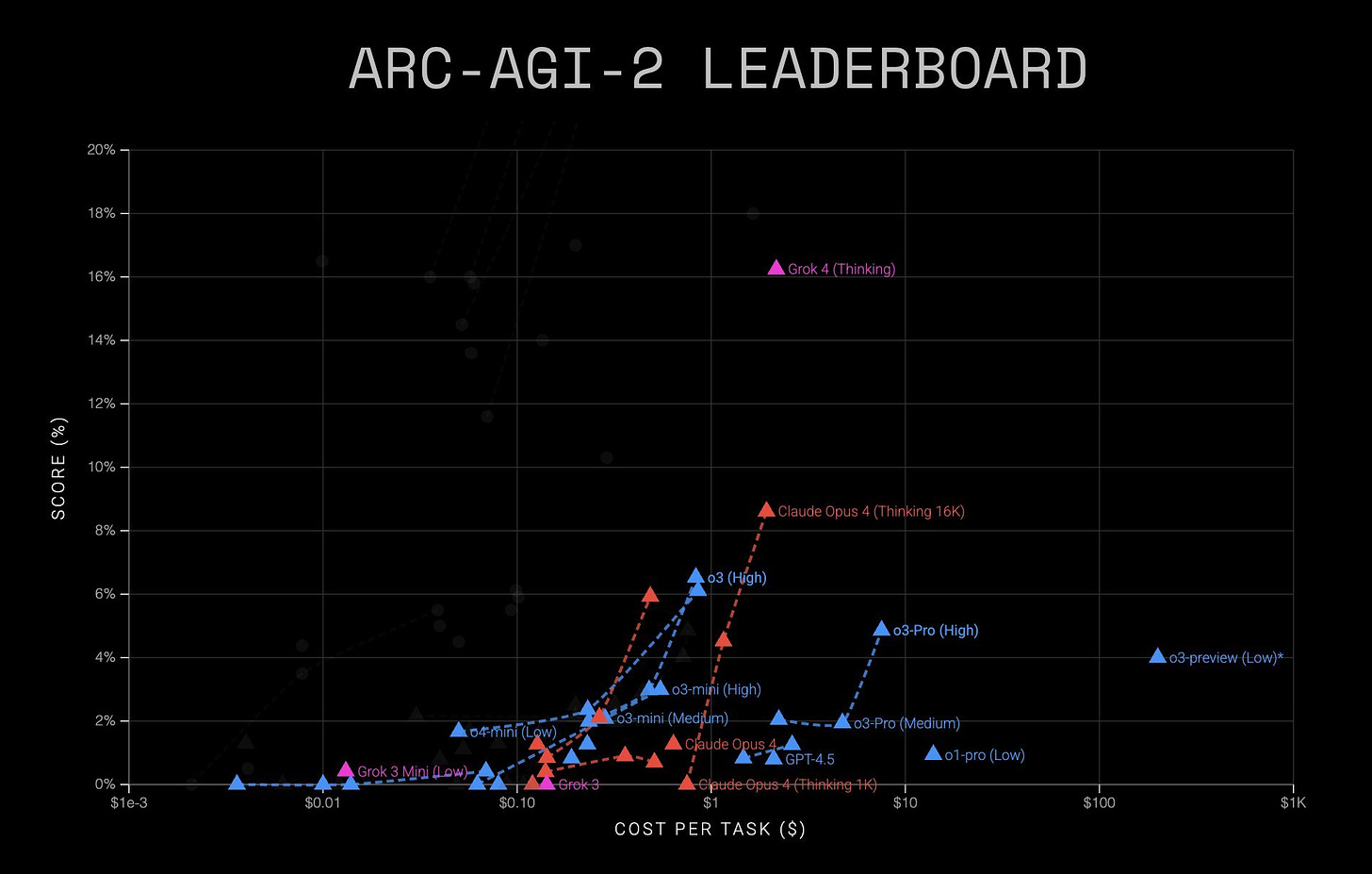

1st rank on ARC-AGI-2 (easy for humans, hard for AI) 15.9%, 2nd best model is is 8.6%

1st rank on LiveCodeBench (Jan-May) 79.4%, 2nd best model is is 75.8%

With SuperGrok Heavy hit 44.4% on HLE (Humanities Last Exam), which is the top score on this benchmark. On the other hand, Gemini 2.5 Pro with tools scored 26.9% and OpenAI o3 with Deep Research scored only 26% on HLE.

HLE test is so hard. It holds 2,500 expert-written questions spanning more than 100 subjects, including math, physics, computer science and humanities, and 14% of them mix text with images. The authors deliberately built in anti-gaming safeguards and hid a private question set so that simply memorising answers will not help a model.

Hardware scale and training infrastructure: Grok 4 was trained at Colossus in Memphis, where xAI doubled its cluster from 100K to 200K Nvidia H100 and H200 GPUs in just 92 days.

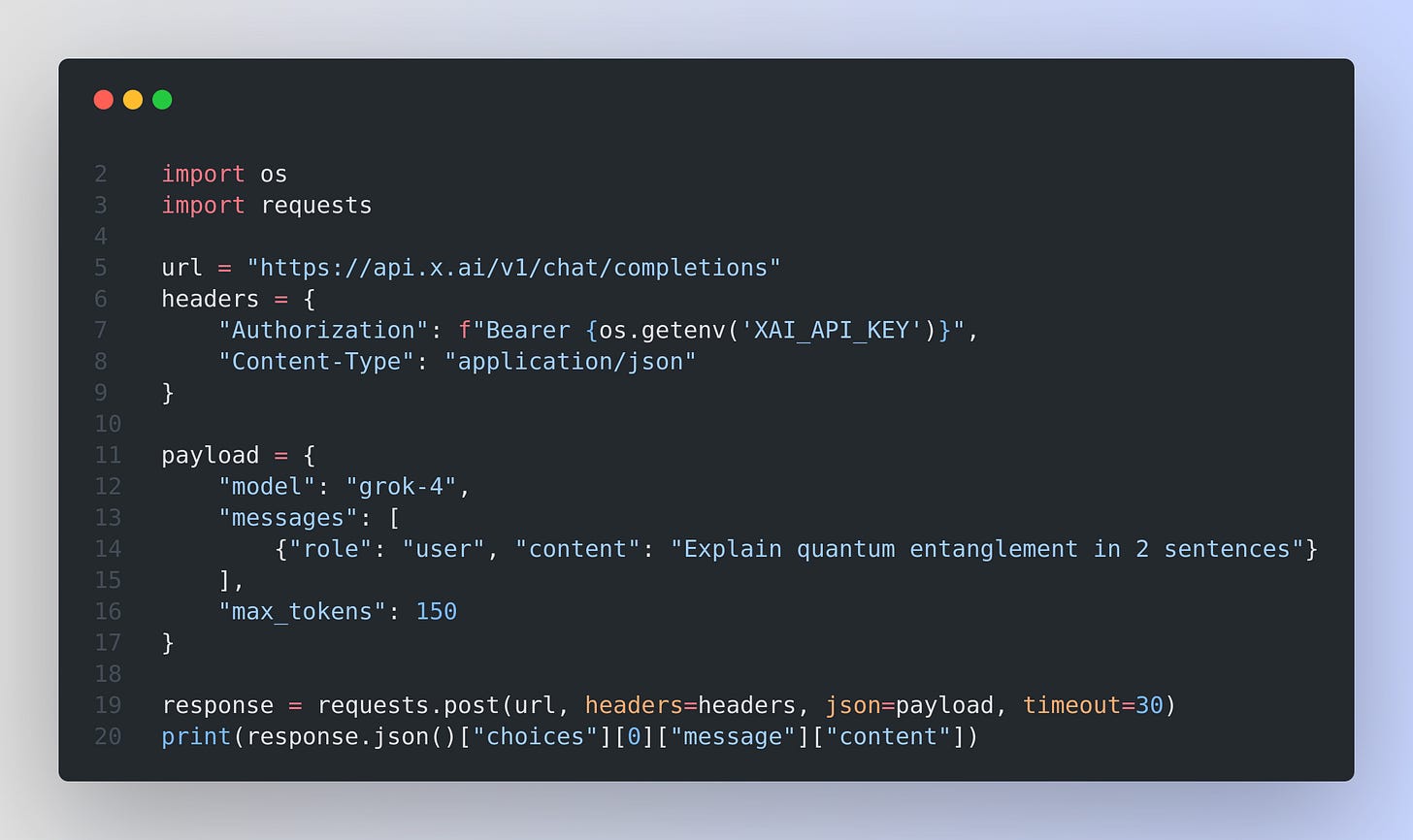

Grok 4 is available on xAI API as well

Sign in at the X.ai developer dashboard and press “Create key” to obtain your secret string. Store it in an environment variable named XAI_API_KEY, then include it as a Bearer token in the Authorization header of every call.

Python quick-start script

Grok 4 (Thinking) clocks 15.9% on ARC-AGI-2, grabbing the new SOTA. That score is almost 2x the last commercial best and now tops the Kaggle leaderboard.

What ARC-AGI-2 tries to measure ?

The benchmark contains a larger, freshly curated set of grid-based puzzles that cannot be memorized, forcing any model to invent a rule on the fly from a handful of examples, then apply that rule to a held-out test grid. Unlike ARC-AGI-1, the new version adds an explicit cost axis, so a model must prove both adaptability and efficiency instead of relying on brute-force search with huge compute budgets.

Grok 4’s jump to 15.9 % is the first clear break out of the single-digit “wall,” more than doubling the previous commercial high and overtaking the top Kaggle competition entry.

Also that means, xAI has improved test-time reasoning techniques rather than simply scaling parameters, because the cost-per-task appears to remain in the low-dollar range as visible on the public leaderboard.

Grok 4 was given responsibility to run a vending machine business, and it did exceedingly well vs other models. Checkout the net worth graph increase.

🩺 Google Research release MedGemma 27B, multimodal health-AI models that run on 1 GPU

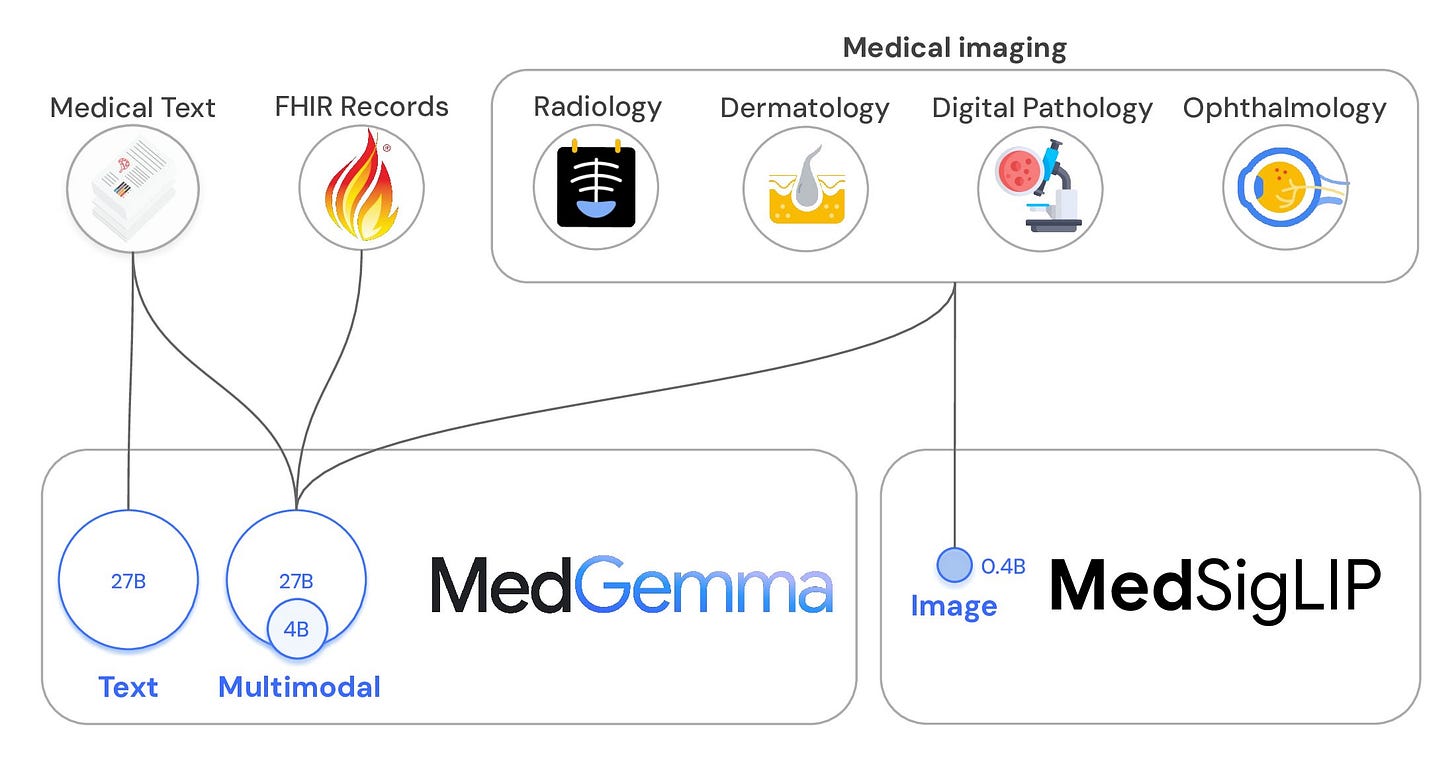

MedGemma 27B multimodal extends the earlier 4B multimodal and 27B text-only models by adding vision capabilities to a 27B-parameter language core.

Training added 2 new datasets, EHRQA and Chest ImaGenome, so the model can read longitudinal electronic health records and localize anatomy in chest X-rays. The report states that this larger multimodal variant inherits every skill of the 4B model while markedly improving language fluency, EHR reasoning, and visual grounding.

The 4B variant clocks 64.4% MedQA and 81% radiologist-validated X-ray reports, while the 27B text model scores 87.7% at about 10% of DeepSeek R1’s cost

MedGemma fuses a Gemma-3 language core with the MedSigLIP vision encoder, letting one network reason across scans and notes. MedSigLIP unifies radiology, dermatology, retina images into one shared embedding space.

Because MedSigLIP is released separately, developers can plug it into classification, retrieval, or search pipelines that need structured outputs, while reserving MedGemma for free-text generation such as report writing or visual question answering.

Both models load on a single GPU, and the 4B versions even run on mobile-class hardware, which lowers cost and eases on-premise deployment where data privacy is critical.

Simple fine-tuning lifts the 4B chest-X-ray RadGraph F1 to 30.3, proving headroom for domain tweaks

Because weights are frozen and local, hospitals gain privacy, reproducibility, and full control compared with remote APIs.

🛠️ Microsoft released Phi-4-mini-flash-reasoning, 10X higher throughput and a 2 to 3X reduction in latency

Microsoft released a new edition to the Phi model family: Phi-4-mini-flash-reasoning.

Built on a new hybrid architecture, available on Huggingface. Licensed under the MIT license.

10X higher throughput and a 2 to 3X reduction in latency

Significantly faster inference without sacrificing reasoning performance.

Microsoft swaps most of that heavy work for a lean SambaY layout with tiny gating blocks, so the same 3.8B parameters think quicker and type sooner.

🧩 The quick idea: Phi‑4‑mini‑flash‑reasoning keeps size small at 3.8B parameters but rebuilds the flow of information. A new decoder‑hybrid‑decoder stack called SambaY lets light recurrent pieces handle context, a single full‑attention layer handles global glue, and cheap little Gated Memory Units (GMUs) recycle that work all the way down the stack.

🔧 The Old Pain: Full attention touches every past token, so latency grows with prompt length and the KV cache balloons, which hurts phones and edge GPUs where memory traffic kills battery and patience.

🏗️ How SambaY Is Wired: The first half of the decoder mixes Mamba state‑space layers with Sliding‑Window Attention, so cost stays linear. A single full‑attention layer builds keys once, stores them, and the second half grabs those keys through cross‑attention, but every other cross‑attention call is ripped out and replaced by a GMU that just gates the hidden state, so half the heavy look‑ups vanish.

⚡ Speed In Numbers: On a single A100‑80GB GPU, vLLM shows up to 10× higher throughput than the earlier Phi‑4‑mini‑reasoning when asked to handle a 2K‑token prompt followed by 32K‑token generation, and average latency drops by 2‑3×.

📚 It Still Remembers: Even with a small 256‑token sliding window SambaY beats pure Transformers on tough retrieval sets like Phonebook 32K and RULER, because GMUs keep fresh local info while that single full‑attention slice keeps global reach.

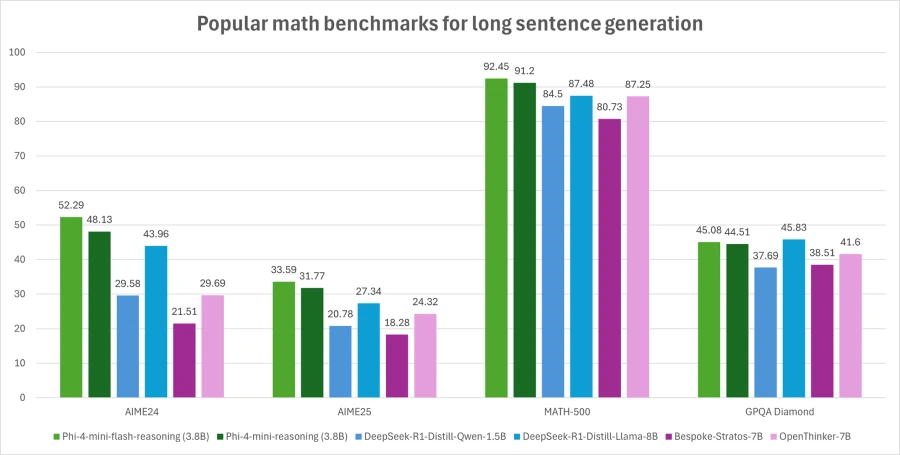

🧮 Reasoning Scores: After a simple Supervised Fine Tuning plus Direct Preference Optimization pass, Phi‑4‑mini‑flash‑reasoning edges its predecessor on Math500, AIME24/25 and GPQA Diamond while keeping the model size identical.

📦 Where It Fits: Edge tutoring apps, offline math helpers and any on‑device agent that must answer fast yet stay under tight RAM now get a drop‑in model that handles 64K tokens and long chain‑of‑thought without a server farm.

🗞️ Byte-Size Briefs

💊 The Mayo Clinic introduced Vision Transformer, an AI system for detecting surgical-site infections quickly and accurately via photos during outpatient monitoring. Your wound photo can now reach an AI nurse before problems start. Vision Transformer quietly watches stitches, pinging doctors only when infection risk rises.

Model trained on 20k images across 6k patients covers diverse cases, promising reliable early alerts. Patients upload any phone picture of their incision inside the Mayo portal.

Stage 1 Vision Transformer first confirms that an incision exists by learning texture, edge, and color cues typical of sutures.Stage 2 examines the same crop for redness, discharge, swelling, and other infection hints, then returns a risk score called area under the curve, where 1 means perfect and 0.5 means random.

Tencent’s Hunyuan released Hunyuan 3D-PolyGen, an AI model that outputs game-ready 3D meshes, for game development and artist modeling. It automates intelligent retopology, delivers smooth tri or quad topology, and slots straight into professional studio pipelines.

Simular Pro just launched the world’s first production-grade AI agent that automates computer tasks by simulating human-like interactions (typing, clicking, scrolling). It’s the Cursor of workflow automation. Give it a sentence, get back a finished task.

Anthropic releases a transparency framework demanding frontier AI labs share risk-testing plans, public system cards, and whistleblower safeguards. Anthropic’s framework tells each big lab to write a Secure Development Framework, basically a checklist describing how they test for bio-weapons, rogue autonomy, or data leaks before a model goes live.

That document must be posted online, signed by the company, and updated whenever the model changes, creating a paper trail that outsiders can inspect.

That’s a wrap for today, see you all tomorrow.