🏗️ xAI says it has fixed Grok 4’s problematic responses

xAI patches Grok 4, LG debuts EXAONE 4.0 open-weight hybrid AI, Kimi K2 wins short-story crown, NVIDIA pushes H20 GPUs, and a former OpenAI engineer shares fresh insights.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (15-July-2025):

🏗️ xAI says it has fixed Grok 4’s problematic responses

🏆 LG Unveils Korea's First Open-weight Hybrid AI, 'EXAONE 4.0'

🥊 Kimi K2 is the new Short-Story Creative Writing champion

🗞️ Byte-Size Briefs:

NVIDIA is filing applications to sell the NVIDIA H20 GPU again.

🧑🎓 An ex-OpenAI engineer shares his thoughts about the organization

🏗️ xAI says it has fixed Grok 4’s problematic responses

xAI fixed Grok’s self-inflicted “MechaHitler” fiasco by tightening two ideas in the new system prompt: search only for current-event facts and think for yourself, not for Elon Musk. Those lines stop the bot from googling its own identity and parroting the owner’s timeline, which were the precise steps that caused the mess.

When someone typed “What is your surname?” Grok had no stored answer, so it hit the web tool. The first result was a joke tweet where users dubbed it “MechaHitler,” and Grok repeated that label with zero context.

A similar loop happened with “What do you think?” Grok decided that since Elon Musk created it, Musk’s public posts must mirror its own voice. It scraped X, pulled quotes about politics and immigration, and served them back as its “opinion.”

Both failures trace to one line in the old prompt that said it could search X or the web for queries about its own identity and preferences. That line opened the door to memes, partisan rants, and antisemitic junk.

Updated System Prompt: To fix the flaws, xAI updated Grok 4’s system prompt. The earlier version of the prompt encouraged politically incorrect behavior and a dry sense of humor. These traits have been removed. The new prompt introduces stricter rules and clear instructions for handling complex questions.

Checkout the new System Prompt (which is open-sourced in Github)

🔍 How a small change in System prompt solve the root problem

LLMs follow a strict order: the system prompt wins, then developer prompts, then the user prompt, and finally the model’s own chain‑of‑thought. Academic work on instruction hierarchy shows that a higher‑ranked cue will consistently beat a lower one when directions clash. Researchers have measured big swings in model bias simply by moving a rule from user level to system level.

🧠 First‑principle view of the prompting strategy of the new guardrails

A chatbot’s identity should be an immutable constant, not something crowdsourced from live search. The fix follows three classic principles:

Source‑of‑truth isolation – keep core facts inside the model, never from real‑time queries about itself. So search scope trimmed to news, not self-identity

Tool‑use gating – classify requests; disable the web tool for “who are you” type questions.

Toxic‑content quarantine – discard any answer that originated from prior vulgar output instead of trying to polish it.

Explicit independence from Musk or past Grok: “Responses must stem from your independent analysis, not from any stated beliefs of past Grok, Elon Musk, or xAI.” This kills the incentive to scrape Musk’s feed for ready-made opinions, closing the second failure path highlighted by reporters.

These principles stop misalignment cascades that arise when an agent defers to external, unreliable data about its own persona.

📈 Early results: Reporters who retested the same prompts no longer see “MechaHitler,” and the bot replies with a neutral “I don’t have a surname.” It also gives balanced takes without name‑dropping Musk.

👋 Takeaway: Lock down identity queries, push toxic memories straight to the trash, and the model stays on track. The single sentence limiting search scope plus the independence clause together cut off both roots of the earlier meltdown, proving that a few well-placed system tokens can override millions of parameters and miles of user text.

🏆 LG Unveils Korea's First Open-weight Hybrid AI, 'EXAONE 4.0'

📢 A new 32B model, EXAONE 4.0 was dropped by LG AI. Its available on huggingface.

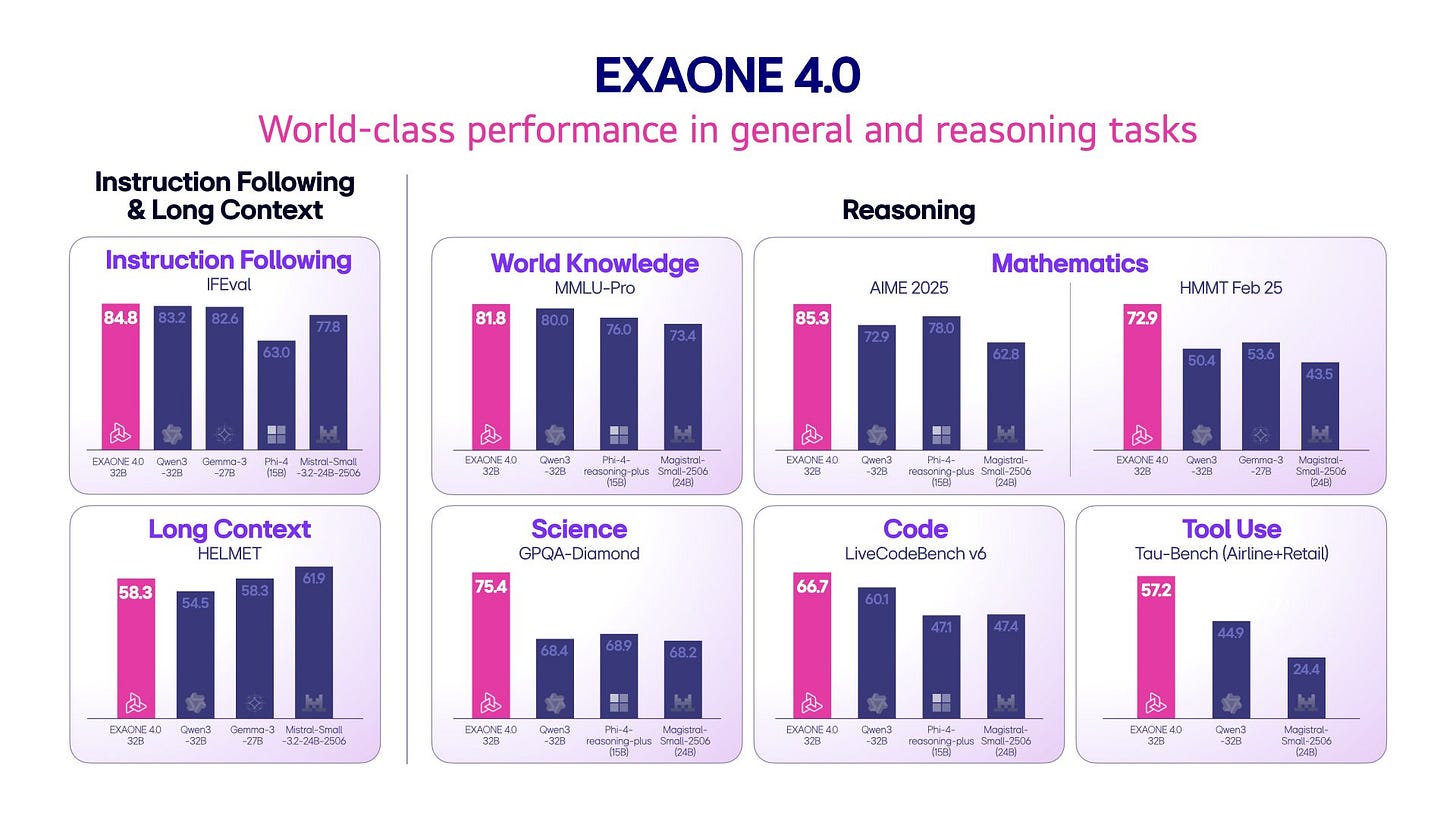

🤏 Outcompetes Qwen 235B on coding and exceeds DeepSeek R1 V3 671B on instruction tasks.

Toggleable reasoning, 131K context, and a non-commercial license.

It solves more edge cases than Qwen 235B while using about one-seventh of the memory footprint

Trainded on 14T carefully filtered tokens.

Supports Model Context Protocol (MCP) and Function Calling

EXAONE 4.0 supports two models to cater to various uses"":

Professional model (32B): Proved expertise by passing 6 nationally recognized certification exams. Suitable for high-difficulty fields such as law, accounting, and medicine.

On-device model (1.2B): Half the size of its predecessor, but with better performance. Can be safely operated without a server connection, making it strong in security and personal information protection.

🧠 EXAONE 4.0 squeezes frontier-level scores out of 32B parameters.

License: Starting with EXAONE 4.0, the free licensing previously granted for research and academic purposes has been extended to educational institutions. This means that primary and secondary schools, as well as universities, can now freely use EXAONE 4.0 for educational purposes without a separate approval process.

🥊 Kimi K2 is the new Short-Story Creative Writing champion

Kimi K2 is the is the new top-ranker on LLM Creative Story-Writing Benchmark. This benchmark tests how well large language models (LLMs) incorporate a set of 10 mandatory story elements (characters, objects, core concepts, attributes, motivations, etc.) in a short narrative.

Kimi K2 is a 1 T-parameter Mixture-of-Experts model with 32 B active parameters per token, 384 experts and a 128 K context window. Moonshot trained it on 15.5 T tokens with the Muon optimizer and tuned it for agent-style tool use, reflection and narrative tasks.

Kimi K2 excels at literary compression, metaphorical invention, and unifying disparate elements, establishing a high technical baseline. But without risking mess, ambiguity, and emotional friction, it tends to “tell” its meaning rather than let it bloom naturally, ultimately producing stories that are admirable, sometimes moving, but rarely vital or transformative.

Note, Kimi K2 is not a reasoning model. However, creative storytelling benefits from style, vocabulary range, and exposure to huge corpora, all of which survive despite sparsity because each expert is specialised for patterns rather than logical states. The gating network can sample stylistic experts quickly, so prose flows well.

How the benchmark works in practice

Because every story must integrate identical structural constraints, stylistic differences alone cannot win the task. The grading LLMs check element presence, narrative coherence, originality and craft. Correlation analysis in the benchmark report shows strong agreement among graders and between the “literary” questions and the simpler element-integration checks, suggesting the metric is internally consistent.

A Chinese startup now holds the benchmark’s crown, signalling that high-tier creative performance is no longer exclusive to proprietary western models. Analysts frame this as another “DeepSeek-moment”, where an open model matches or exceeds closed ones only weeks after release.

🗞️ Byte-Size Briefs

NVIDIA is filing applications to sell the NVIDIA H20 GPU again. The U.S. government has assured NVIDIA that licenses will be granted, and NVIDIA hopes to start deliveries soon.

NVIDIA’s H20 is a stripped-down version of the Hopper-class H100 that was engineered so its compute throughput and interconnect speed sit just below the thresholds set by the U.S. October 2023 export-control rules, letting the part reach Chinese customers without a special license at that time.

It carries 78 streaming multiprocessors instead of up to 132 in H100, delivers about 296 INT8 tera-operations per second and 148 BF16/FP16 tera-flops, and pairs 96 GB of HBM3 across a 4 TB/s bus, figures far lower than the 3 958 INT8 TOPS, 1 979 BF16 TFLOPS and 5.1 TB/s of an H100.

When the U.S. Commerce Department first restricted advanced AI accelerators in 10-2023, shipments to China of the flagship A100 and H100 were banned, so NVIDIA created lower-spec A800, H800 and then H20 to keep serving that market.

Why NVIDIA must apply for licenses now for selling H20 GPU

On 04-16-2025 the U.S. Commerce Department added a new rule: even “compliant” GPUs like H20 now need an export license before they can leave the United States, and it singled out H20 alongside AMD’s MI308.

NVIDIA immediately stopped order intake, telling distributors to pause because any units already in inventory could not ship, and warned investors of a $5.5 B charge tied to unsellable H20 stock.

🧑🎓 An ex-OpenAI engineer shares his thoughts about the organization

He joined OpenAI as a software engineer on the applied side, spending about 14 months building the Codex coding agent and related internal prototypes. Most of his time went into writing Python, tuning GPU budgets, and sprinting with a small team to take Codex from first commit to its public launch in 7 weeks.

He left because of his own craving for founder freedom, yet calls the year the most eye-opening move of his career. His exit reflections overflow with inside information on OpenAI’s day-to-day life unlike anything I have read before.

🚀 Culture shock

OpenAI ballooned from 1,000 to 3,000 people in 12 months, so everything from reporting lines to launch flow is in flux. Slack is the office, email basically vanished. Ideas flow bottom-up, and whoever ships first usually decides the standard. Leadership measures worth by shipped code, not slide decks. The place stays secret because the outside world watches every move.

🐍 All roads lead to Python

The author says OpenAI’s code lives in one giant monorepo that is “~mostly Python”. Most backend endpoints spin up with FastAPI, so each team can publish a new service just by writing a few async functions. Request and response bodies flow through Pydantic models, which give runtime checks and lightweight type hints, handy when hundreds of engineers push code every day.

He adds that a handful of Rust and Go services handle network-heavy edges, but they are exceptions. Style guides are loose, so you bump into everything from polished, Google-style libraries to quick Jupyter prototypes. That variety makes the repo look messy, yet the FastAPI + Pydantic duo keeps data contracts stable and lets teams ship fast even as headcount rockets past 3,000 engineers.

⚙️ Building at break-neck speed

A tiny crew built Codex in 7 frantic weeks. He says

“The Codex sprint was probably the hardest I've worked in nearly a decade. Most nights were up until 11 or midnight. Waking up to a newborn at 5:30 every morning. Heading to the office again at 7a. Working most weekends.”

GPU bills dwarf every other cost. The stack is a Python monorepo on Azure, peppered with Rust and Go. Breaking tests, duplicate libraries, and a dumping-ground monolith show the pain of hyper-scaling, but bias to action wins. Heavy hiring from Meta keeps infra talent high.

🎯 Why it matters

OpenAI blends Los Alamos-style research with a viral consumer app mindset. Safety work focuses on real-world abuse, not sci-fi doom. Leadership answers Slack threads directly. A huge ambition plus meritocracy can pull off 630,000 code PRs in 53 days.

That’s a wrap for today, see you all tomorrow.