"Zero-Shot Novel View and Depth Synthesis with Multi-View Geometric Diffusion"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2501.18804

The paper addresses the challenge of creating multi-view consistent 3D scene representations from sparse images for novel view and depth synthesis. Existing methods often rely on intermediate 3D representations which can limit generalization, especially with more input views.

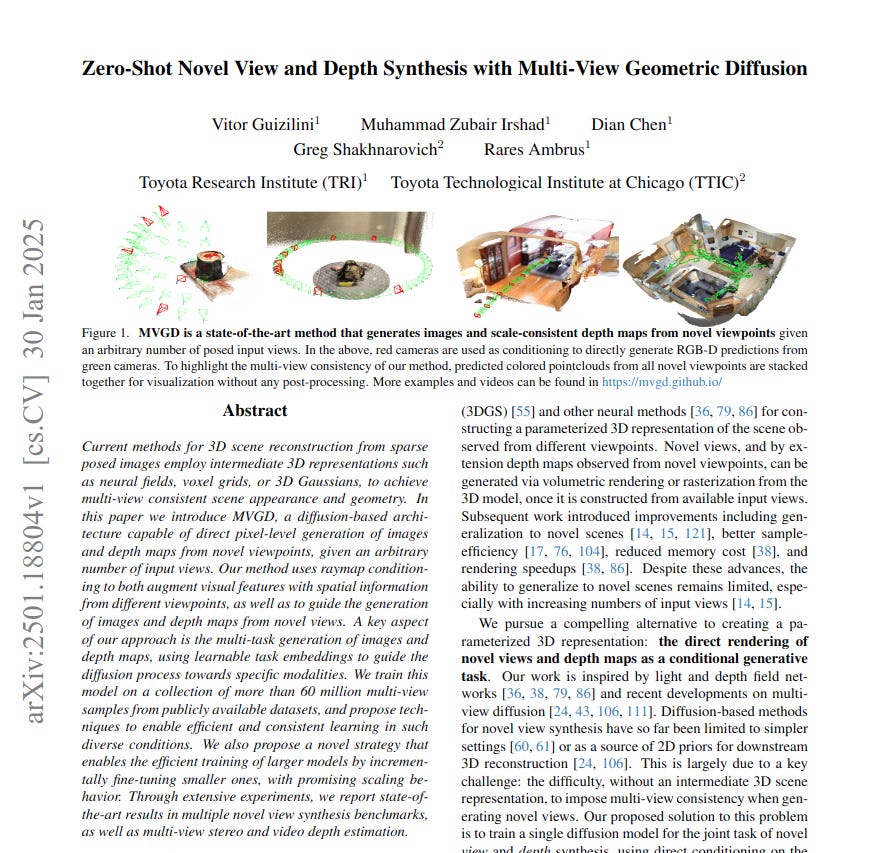

This paper introduces Multi-View Geometric Diffusion (MVGD). MVGD directly generates novel views and depth maps without intermediate 3D representations, using raymap conditioning and multi-task learning on a large and diverse dataset.

-----

📌 MVGD achieves direct pixel-level diffusion for novel view synthesis. It uniquely bypasses explicit 3D scene representations. Raymap conditioning and joint task learning ensure multi-view consistent RGB and depth outputs.

📌 Raymap conditioning is critical for MVGD's geometric awareness. It effectively incorporates camera poses to guide diffusion. This enables scale-consistent depth and novel view synthesis, vital for accuracy.

📌 Recurrent Interface Network architecture allows efficient pixel diffusion in MVGD. Incremental fine-tuning strategy boosts performance of larger models without full retraining. This demonstrates practical scalability.

----------

Methods Explored in this Paper 🔧:

→ This paper proposes Multi-View Geometric Diffusion (MVGD). MVGD is a diffusion-based model for novel view and depth synthesis.

→ MVGD uses raymap conditioning. Raymap conditioning integrates spatial information from input views to guide image and depth generation from new viewpoints.

→ A key component is multi-task learning. MVGD jointly trains for novel view and depth synthesis. This is achieved using learnable task embeddings. Task embeddings direct the diffusion process toward specific output modalities like RGB or Depth.

→ The model employs Scene Scale Normalization (SSN). SSN is a technique to handle varying scales across datasets. SSN normalizes camera extrinsics and depth maps during training and applies the scale back during inference to ensure multi-view consistency in depth predictions.

→ The architecture utilizes Recurrent Interface Networks (RIN). RIN is a Transformer-based architecture that efficiently processes a large number of input views by separating input tokens and latent tokens, making pixel-level diffusion feasible.

-----

Key Insights 💡:

→ Direct pixel-level diffusion for novel view synthesis is possible. It can achieve multi-view consistency without explicit 3D scene representations.

→ Raymap conditioning effectively integrates multi-view geometry into the diffusion process. This enables generating geometrically consistent novel views and depth maps.

→ Joint training of novel view and depth synthesis improves performance. It allows the model to learn shared geometric and appearance priors.

→ Large-scale training on diverse datasets is crucial for generalization. MVGD is trained on over 60 million multi-view samples.

-----

Results 📊:

→ MVGD outperforms previous state-of-the-art methods on novel view synthesis benchmarks such as RealEstate10K and ACID for 2-view input. For example, on RealEstate10K, MVGD achieves a PSNR of 28.41, surpassing MVSplat's 26.39.

→ On multi-view novel view synthesis with 3 to 9 input views, MVGD outperforms methods like ReconFusion and CAT3D on datasets like RealEstate10K, LLFF, DTU, CO3D and MIP-NeRF360 in PSNR, SSIM and LPIPS metrics. For instance, on RealEstate10K with 3 views, MVGD achieves a PSNR of 28.70, while ReconFusion achieves 25.84.

→ For stereo depth estimation on ScanNet, SUN3D and RGB-D, MVGD achieves state-of-the-art results. On ScanNet stereo depth, MVGD attains an Abs.Rel error of 0.065, outperforming EPIO's 0.086.

→ In video depth estimation on ScanNet, MVGD sets a new state-of-the-art. MVGD reaches an Abs.Rel error of 0.041, improving upon NeuralRecon's 0.047.